Next.js on Vercel

Next.js is a fullstack React framework for the web, maintained by Vercel.

While Next.js works when self-hosting, deploying to Vercel is zero-configuration and provides additional enhancements for scalability, availability, and performance globally.

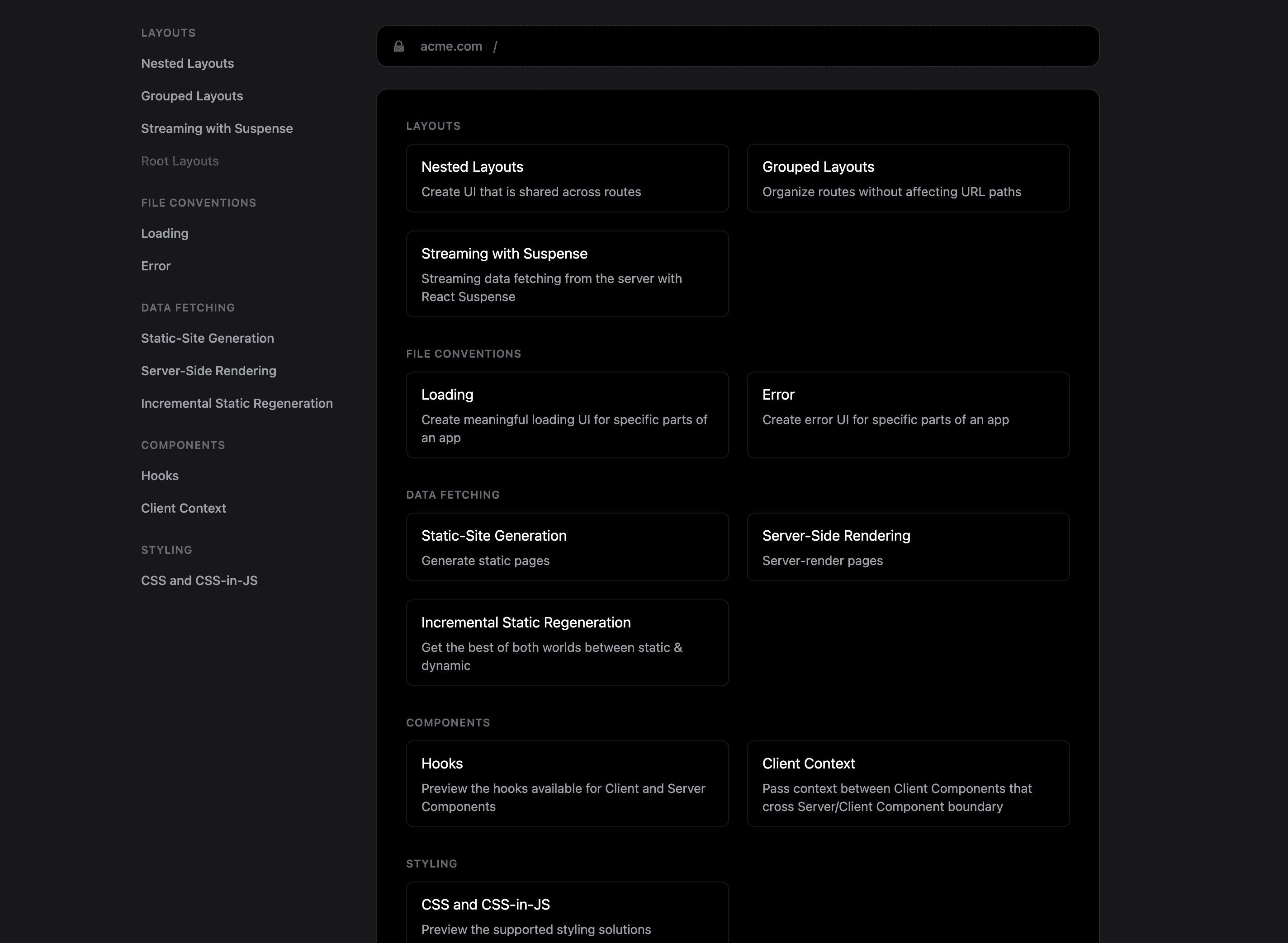

To get started with Next.js on Vercel:

- If you already have a project with Next.js, install Vercel CLI and run the

vercelcommand from your project's root directory - Clone one of our Next.js example repos to your favorite git provider and deploy it on Vercel with the button below:

- Or, choose a template from Vercel's marketplace:

Vercel deployments can integrate with your git provider to generate preview URLs for each pull request you make to your Next.js project.

Incremental Static Regeneration (ISR) allows you to create or update content without redeploying your site. ISR has three main benefits for developers: better performance, improved security, and faster build times.

When self-hosting, (ISR) is limited to a single region workload. Statically generated pages are not distributed closer to visitors by default, without additional configuration or vendoring of a CDN. By default, self-hosted ISR does not persist generated pages to durable storage. Instead, these files are located in the Next.js cache (which expires).

To enable ISR with Next.js in the app router, add an options object with a revalidate property to your fetch requests:

export default async function Page() {

const res = await fetch('https://api.vercel.app/blog', {

next: { revalidate: 10 }, // Seconds

});

const data = await res.json();

return (

<main>

<pre>{JSON.stringify(data, null, 2)}</pre>

</main>

);

}export default async function Page() {

const res = await fetch('https://api.vercel.app/blog', {

next: { revalidate: 10 }, // Seconds

});

const data = await res.json();

return (

<main>

<pre>{JSON.stringify(data, null, 2)}</pre>

</main>

);

}export async function getStaticProps() {

/* Fetch data here */

return {

props: {

/* Add something to your props */

},

revalidate: 10, // Seconds

};

}export async function getStaticProps() {

/* Fetch data here */

return {

props: {

/* Add something to your props */

},

revalidate: 10, // Seconds

};

}To summarize, using ISR with Next.js on Vercel:

- Better performance with our global CDN

- Zero-downtime rollouts to previously statically generated pages

- Framework-aware infrastructure enables global content updates in 300ms

- Generated pages are both cached and persisted to durable storage

Learn more about Incremental Static Regeneration (ISR)

Server-Side Rendering (SSR) allows you to render pages dynamically on the server. This is useful for pages where the rendered data needs to be unique on every request. For example, checking authentication or looking at the location of an incoming request.

On Vercel, you can server-render Next.js applications through Vercel Functions.

To summarize, SSR with Next.js on Vercel:

- Scales to zero when not in use

- Scales automatically with traffic increases

- Has zero-configuration support for

Cache-Controlheaders, includingstale-while-revalidate - Framework-aware infrastructure enables automatic creation of Functions for SSR

Vercel supports streaming in Next.js projects with any of the following:

- Route Handlers

- Vercel Functions

- React Server Components

Streaming data allows you to fetch information in chunks rather than all at once, speeding up Function responses. You can use streams to improve your app's user experience and prevent your functions from failing when fetching large files.

In the Next.js App Router, you can use the loading file convention or a Suspense component to show an instant loading state from the server while the content of a route segment loads.

The loading file provides a way to show a loading state for a whole route or route-segment, instead of just particular sections of a page. This file affects all its child elements, including layouts and pages. It continues to display its contents until the data fetching process in the route segment completes.

The following example demonstrates a basic loading file:

export default function Loading() {

return <p>Loading...</p>;

}export default function Loading() {

return <p>Loading...</p>;

}Learn more about loading in the Next.js docs.

The Suspense component, introduced in React 18, enables you to display a fallback until components nested within it have finished loading. Using Suspense is more granular than showing a loading state for an entire route, and is useful when only sections of your UI need a loading state.

You can specify a component to show during the loading state with the fallback prop on the Suspense component as shown below:

import { Suspense } from 'react';

import { PostFeed, Weather } from './components';

export default function Posts() {

return (

<section>

<Suspense fallback={<p>Loading feed...</p>}>

<PostFeed />

</Suspense>

<Suspense fallback={<p>Loading weather...</p>}>

<Weather />

</Suspense>

</section>

);

}import { Suspense } from 'react';

import { PostFeed, Weather } from './components';

export default function Posts() {

return (

<section>

<Suspense fallback={<p>Loading feed...</p>}>

<PostFeed />

</Suspense>

<Suspense fallback={<p>Loading weather...</p>}>

<Weather />

</Suspense>

</section>

);

}To summarize, using Streaming with Next.js on Vercel:

- Speeds up Function response times, improving your app's user experience

- Display initial loading UI with incremental updates from the server as new data becomes available

Learn more about Streaming with Vercel Functions.

Partial Prerendering as an experimental feature. It is currently not suitable for production environments.

Partial Prerendering (PPR) is an experimental feature in Next.js that allows the static portions of a page to be pre-generated and served from the cache, while the dynamic portions are streamed in a single HTTP request.

When a user visits a route:

- A static route shell is served immediately, this makes the initial load fast.

- The shell leaves holes where dynamic content will be streamed in to minimize the perceived overall page load time.

- The async holes are loaded in parallel, reducing the overall load time of the page.

This approach is useful for pages like dashboards, where unique, per-request data coexists with static elements such as sidebars or layouts. This is different from how your application behaves today, where entire routes are either fully static or dynamic.

See the Partial Prerendering docs to learn more.

Image Optimization helps you achieve faster page loads by reducing the size of images and using modern image formats.

When deploying to Vercel, images are automatically optimized on demand, keeping your build times fast while improving your page load performance and Core Web Vitals.

When self-hosting, Image Optimization uses the default Next.js server for optimization. This server manages the rendering of pages and serving of static files.

To use Image Optimization with Next.js on Vercel, import the next/image component into the component you'd like to add an image to, as shown in the following example:

import Image from 'next/image';

const ExampleComponent = (props) => {

return (

<>

<Image src="example.png" alt="Example picture" width={500} height={500} />

<span>{props.name}</span>

</>

);

};import Image from 'next/image';

interface ExampleProps {

name: string;

}

const ExampleComponent = ({ name }: ExampleProps) => {

return (

<>

<Image

src="example.png"

alt="Example picture"

width={500}

height={500}

/>

<span>{name}</span>

</>

);

};

export default ExampleComponent;import Image from 'next/image';

const ExampleComponent = (props) => {

return (

<>

<Image src="example.png" alt="Example picture" width={500} height={500} />

<span>{props.name}</span>

</>

);

};import Image from 'next/image';

interface ExampleProps {

name: string;

}

const ExampleComponent = ({ name }: ExampleProps) => {

return (

<>

<Image

src="example.png"

alt="Example picture"

width={500}

height={500}

/>

<span>{name}</span>

</>

);

};

export default ExampleComponent;To summarize, using Image Optimization with Next.js on Vercel:

- Zero-configuration Image Optimization when using

next/image - Helps your team ensure great performance by default

- Keeps your builds fast by optimizing images on-demand

- Requires No additional services needed to procure or set up

Learn more about Image Optimization

next/font enables built-in automatic self-hosting for any font file. This means you can optimally load web fonts with zero layout shift, thanks to the underlying CSS size-adjust property.

This also allows you to use all Google Fonts with performance and privacy in mind. CSS and font files are downloaded at build time and self-hosted with the rest of your static files. No requests are sent to Google by the browser.

import { Inter } from 'next/font/google';

// If loading a variable font, you don't need to specify the font weight

const inter = Inter({ subsets: ['latin'] });

export default function MyApp({ Component, pageProps }) {

return (

<main className={inter.className}>

<Component {...pageProps} />

</main>

);

}import { Inter } from 'next/font/google';

import type { AppProps } from 'next/app';

// If loading a variable font, you don't need to specify the font weight

const inter = Inter({ subsets: ['latin'] });

export default function MyApp({ Component, pageProps }: AppProps) {

return (

<main className={inter.className}>

<Component {...pageProps} />

</main>

);

}import { Inter } from 'next/font/google';

// If loading a variable font, you don't need to specify the font weight

const inter = Inter({

subsets: ['latin'],

display: 'swap',

});

export default function RootLayout({ children }) {

return (

<html lang="en" className={inter.className}>

<body>{children}</body>

</html>

);

}import { Inter } from 'next/font/google';

// If loading a variable font, you don't need to specify the font weight

const inter = Inter({

subsets: ['latin'],

display: 'swap',

});

export default function RootLayout({

children,

}: {

children: React.ReactNode;

}) {

return (

<html lang="en" className={inter.className}>

<body>{children}</body>

</html>

);

}To summarize, using Font Optimization with Next.js on Vercel:

- Enables built-in, automatic self-hosting for font files

- Loads web fonts with zero layout shift

- Allows for CSS and font files to be downloaded at build time and self-hosted with the rest of your static files

- Ensures that no requests are sent to Google by the browser

Learn more about Font Optimization

Dynamic social card images (using the Open Graph protocol) allow you to create a unique image for every page of your site. This is useful when sharing links on the web through social platforms or through text message.

The Vercel OG image generation library allows you generate fast, dynamic social card images using Next.js API Routes.

The following example demonstrates using OG image generation in both the Next.js Pages and App Router:

import { ImageResponse } from '@vercel/og';

export default function () {

return new ImageResponse(

(

<div

style={{

fontSize: 128,

background: 'white',

width: '100%',

height: '100%',

display: 'flex',

textAlign: 'center',

alignItems: 'center',

justifyContent: 'center',

}}

>

Hello world!

</div>

),

{

width: 1200,

height: 600,

},

);

}import { ImageResponse } from '@vercel/og';

export default function () {

return new ImageResponse(

<div

style={{

fontSize: 128,

background: 'white',

width: '100%',

height: '100%',

display: 'flex',

textAlign: 'center',

alignItems: 'center',

justifyContent: 'center',

}}

>

Hello world!

</div>,

{

width: 1200,

height: 600,

},

);

}import { ImageResponse } from 'next/og';

// App router includes @vercel/og.

// No need to install it.

export async function GET(request: Request) {

return new ImageResponse(

(

<div

style={{

fontSize: 128,

background: 'white',

width: '100%',

height: '100%',

display: 'flex',

textAlign: 'center',

alignItems: 'center',

justifyContent: 'center',

}}

>

Hello world!

</div>

),

{

width: 1200,

height: 600,

},

);

}import { ImageResponse } from 'next/og';

// App router includes @vercel/og.

// No need to install it.

export async function GET(request) {

return new ImageResponse(

<div

style={{

fontSize: 128,

background: 'white',

width: '100%',

height: '100%',

display: 'flex',

textAlign: 'center',

alignItems: 'center',

justifyContent: 'center',

}}

>

Hello world!

</div>,

{

width: 1200,

height: 600,

},

);

}To see your generated image, run npm run dev in your terminal and visit the /api/og route in your browser (most likely http://localhost:3000/api/og).

To summarize, the benefits of using Vercel OG with Next.js include:

- Instant, dynamic social card images without needing headless browsers

- Generated images are automatically cached on the Vercel CDN

- Image generation is co-located with the rest of your frontend codebase

Learn more about OG Image Generation

Middleware is code that executes before a request is processed. Because Middleware runs before the cache, it's an effective way of providing personalization to statically generated content.

When deploying middleware with Next.js on Vercel, you get access to built-in helpers that expose each request's geolocation information. You also get access to the NextRequest and NextResponse objects, which enable rewrites, continuing the middleware chain, and more.

See the Middleware API docs for more information.

To summarize, Middleware with Next.js on Vercel:

- Runs using Middleware which are deployed globally

- Replaces needing additional services for customizable routing rules

- Helps you achieve the best performance for serving content globally

Draft Mode enables you to view draft content from your Headless CMS immediately, while still statically generating pages in production.

See our Draft Mode docs to learn how to use it with Next.js.

When self-hosting, every request using Draft Mode hits the Next.js server, potentially incurring extra load or cost. Further, by spoofing the cookie, malicious users could attempt to gain access to your underlying Next.js server.

Deployments on Vercel automatically secure Draft Mode behind the same authentication used for Preview Comments. In order to enable or disable Draft Mode, the viewer must be logged in as a member of the Team. Once enabled, Vercel's CDN will bypass the ISR cache automatically and invoke the underlying Vercel Function.

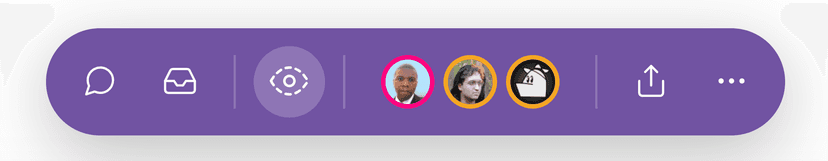

You and your team members can toggle Draft Mode in the Vercel Toolbar in production, localhost, and Preview Deployments. When you do so, the toolbar will become purple to indicate Draft Mode is active.

Users outside your Vercel team cannot toggle Draft Mode.

To summarize, the benefits of using Draft Mode with Next.js on Vercel include:

- Easily server-render previews of static pages

- Adds additional security measures to prevent malicious usage

- Integrates with any headless provider of your choice

- You can enable and disable Draft Mode in the comments toolbar on Preview Deployments

Vercel's Web Analytics features enable you to visualize and monitor your application's performance over time. The Analytics section in your project's dashboard offers detailed insights into your website's visitors, with metrics like top pages, top referrers, and user demographics.

To use Web Analytics, navigate to the Analytics section in your project dashboard sidebar on Vercel and select Enable in the modal that appears.

To track visitors and page views, we recommend first installing our @vercel/analytics package by running the terminal command below in the root directory of your Next.js project:

pnpm i @vercel/analyticsThen, follow the instructions below to add the Analytics component to your app either using the pages directory or the app directory.

import type { AppProps } from 'next/app';

import { Analytics } from '@vercel/analytics/next';

function MyApp({ Component, pageProps }: AppProps) {

return (

<>

<Component {...pageProps} />

<Analytics />

</>

);

}

export default MyApp;import { Analytics } from '@vercel/analytics/next';

function MyApp({ Component, pageProps }) {

return (

<>

<Component {...pageProps} />

<Analytics />

</>

);

}

export default MyApp;The Analytics component is a wrapper around the tracking script, offering more seamless integration with Next.js, including route support.

Add the following code to the root layout:

import { Analytics } from '@vercel/analytics/next';

export default function RootLayout({

children,

}: {

children: React.ReactNode;

}) {

return (

<html lang="en">

<head>

<title>Next.js</title>

</head>

<body>

{children}

<Analytics />

</body>

</html>

);

}import { Analytics } from '@vercel/analytics/next';

export default function RootLayout({ children }) {

return (

<html lang="en">

<head>

<title>Next.js</title>

</head>

<body>

{children}

<Analytics />

</body>

</html>

);

}To summarize, Web Analytics with Next.js on Vercel:

- Enables you to track traffic and see your top-performing pages

- Offers you detailed breakdowns of visitor demographics, including their OS, browser, geolocation, and more

Learn more about Web Analytics

You can see data about your project's Core Web Vitals performance in your dashboard on Vercel. Doing so will allow you to track your web application's loading speed, responsiveness, and visual stability so you can improve the overall user experience.

On Vercel, you can track your Next.js app's Core Web Vitals in your project's dashboard.

If you're self-hosting your app, you can use the useWebVitals hook to send metrics to any analytics provider. The following example demonstrates a custom WebVitals component that you can use in your app's root layout file:

'use client';

import { useReportWebVitals } from 'next/web-vitals';

export function WebVitals() {

useReportWebVitals((metric) => {

console.log(metric);

});

}'use client';

import { useReportWebVitals } from 'next/web-vitals';

export function WebVitals() {

useReportWebVitals((metric) => {

console.log(metric);

});

}You could then reference your custom WebVitals component like this:

import { WebVitals } from './_components/web-vitals';

export default function Layout({ children }) {

return (

<html>

<body>

<WebVitals />

{children}

</body>

</html>

);

}import { WebVitals } from './_components/web-vitals';

export default function Layout({ children }) {

return (

<html>

<body>

<WebVitals />

{children}

</body>

</html>

);

}Next.js uses Google's web-vitals library to measure the Web Vitals metrics available in reportWebVitals.

To summarize, tracking Web Vitals with Next.js on Vercel:

- Enables you to track traffic performance metrics, such as First Contentful Paint, or First Input Delay

- Enables you to view performance analytics by page name and URL for more granular analysis

- Shows you a score for your app's performance on each recorded metric, which you can use to track improvements or regressions

Learn more about Speed Insights

Vercel has partnered with popular service providers, such as MongoDB and Sanity, to create integrations that make using those services with Next.js easier. There are many integrations across multiple categories, such as Commerce, Databases, and Logging.

To summarize, Integrations on Vercel:

- Simplify the process of connecting your preferred services to a Vercel project

- Help you achieve the optimal setup for a Vercel project using your preferred service

- Configure your environment variables for you

See our Frameworks documentation page to learn about the benefits available to all frameworks when you deploy on Vercel.

Learn more about deploying Next.js projects on Vercel with the following resources:

- Build a fullstack Next.js app

- Build a multi-tenant app

- Next.js with Contenful

- Next.js with Stripe Checkout and Typescript

- Next.js with Magic.link

- Generate a sitemap with Next.js

- Next.js ecommerce with Shopify

- Deploy a locally built Next.js app

- Deploying Next.js to Vercel

- Learn about combining static and dynamic rendering on the same page in Next.js 14

- Learn about suspense boundaries and streaming when loading your UI

Was this helpful?