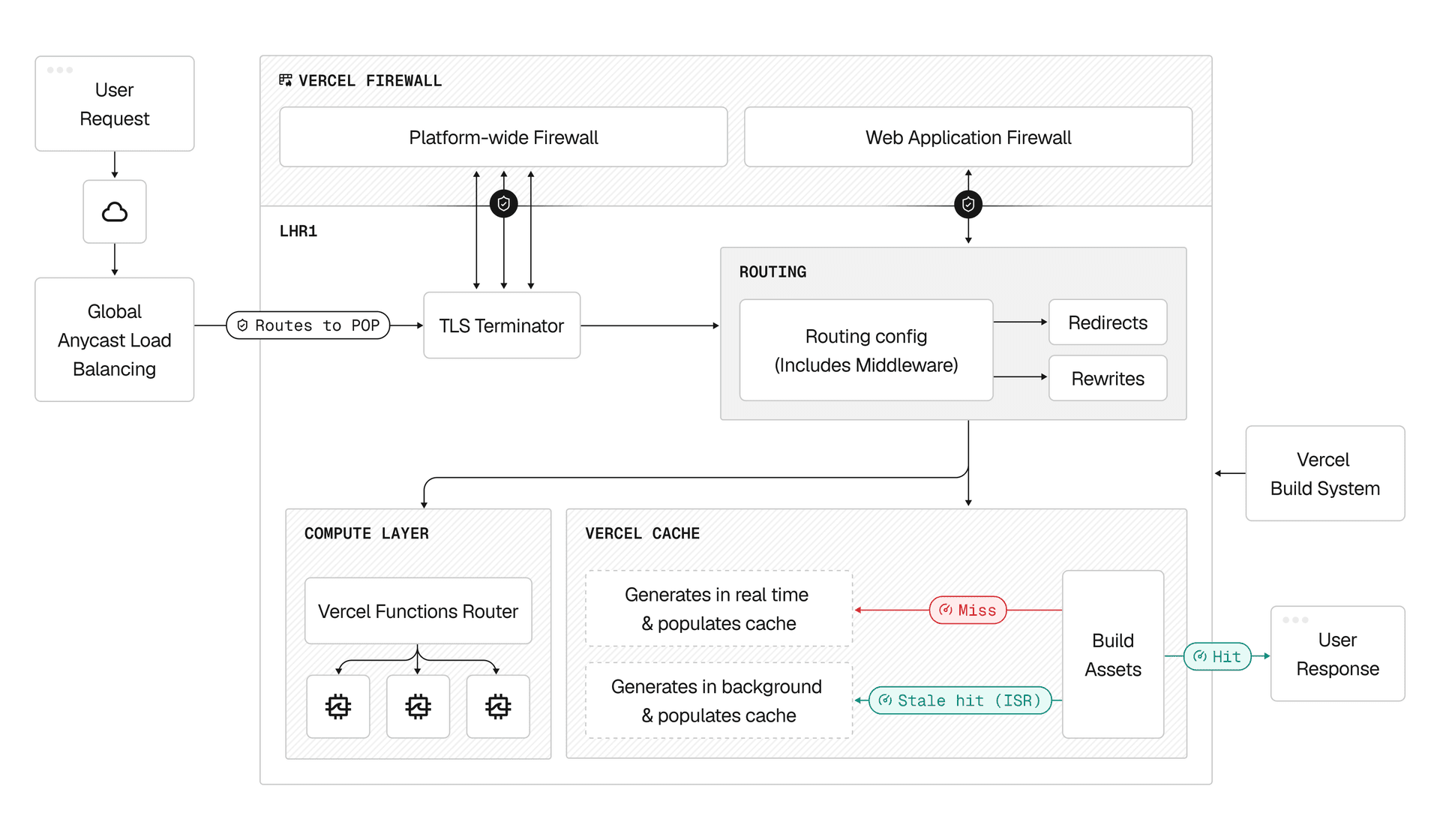

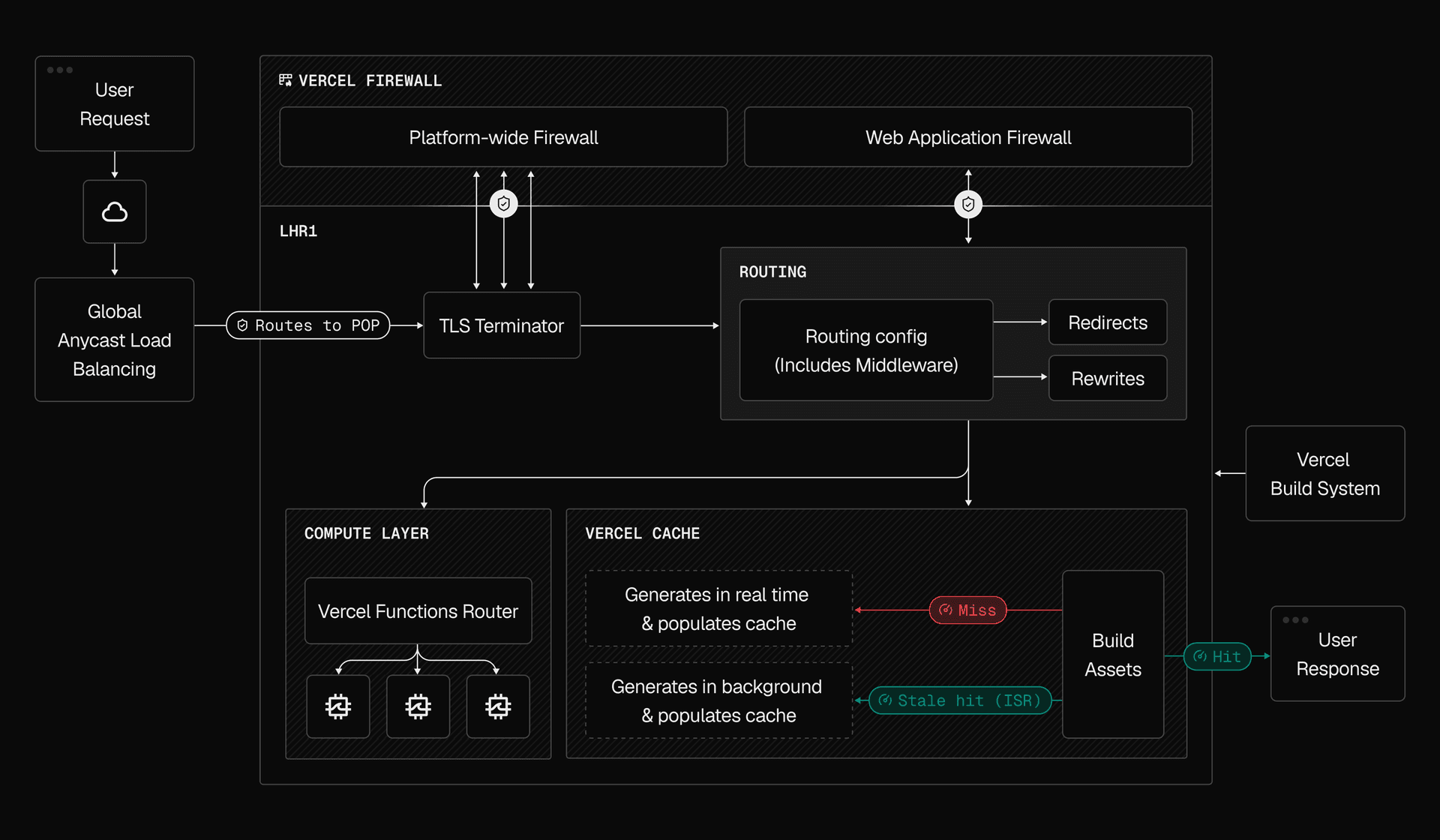

How requests flow through Vercel

When you deploy to Vercel, your code runs on a global network of servers. This network puts your application close to your users, reduces latency, and handles scaling automatically. This is part of Vercel's self-driving infrastructure: a system where you express intent, and the platform handles operations.

This guide explains what happens from the moment a user presses enter on their keyboard to when your application appears on their screen. For a deeper technical dive, see Life of a Vercel Request: What Happens When a User Presses Enter.

When a user requests your site, their browser performs a DNS lookup. For sites hosted on Vercel, this resolves to an anycast IP address owned by Vercel.

Vercel uses a global load balancer with anycast routing to direct the request to the optimal Point of Presence (PoP) across 100+ global locations. The routing decision considers:

- Number of network hops

- Round-trip time

- Available bandwidth

Once the request reaches a PoP, it leaves the public internet and travels over a private fiber-optic backbone. Think of this as an "express lane" that reduces latency, jitter, and packet loss compared to the unpredictable public internet.

For more on how Vercel's network operates, see Life of a Vercel Request: Navigating the Network.

Before your application logic sees any request, it passes through Vercel's integrated security layer. Requests encounter multiple stages of defense covering Network layer 3, Transport layer 4, and Application layer 7.

The global load balancer hands off raw TCP/IP requests to the TLS terminator. This service handles the TLS handshake with the browser, turning encrypted HTTPS requests into readable HTTP that Vercel's systems can process.

At any moment, the TLS terminator holds millions of concurrent connections to the internet. It:

- Decrypts HTTPS requests, offloading CPU-intensive cryptographic work from your application

- Manages connection pooling to handle slow clients without blocking resources

- Acts as an enforcer: if a request is flagged as malicious, this is where it gets blocked

Working in tandem with the TLS terminator is Vercel's always-on system DDoS mitigation. Unlike traditional firewalls that rely on static rules, this system analyzes the entire data stream in real time:

- Continuously maps relationships between traffic attributes (TLS fingerprints, User-Agent strings, IP reputation)

- Detects attack patterns, botnets, and DDoS attempts

- Pushes defensive signatures to the TLS terminator within seconds

- Blocks L3, L4, and L7 threats close to the source, before they reach your application

This system runs across all deployments by default, delivering a P99 time-to-mitigation of 3.5 seconds for novel attacks.

For additional protection, you can configure the Web Application Firewall (WAF) with custom rules. The WAF lets you create granular rules for your specific application needs, while Vercel's system DDoS mitigation handles platform-wide threat detection automatically.

Vercel Firewall

Configure firewall rules to protect your applications.

DDoS Mitigation

Learn how Vercel protects against distributed denial-of-service attacks.

After passing security checks, the request enters the proxy. This is the decision engine of the Vercel network.

The proxy is application-aware. It consults a globally replicated metadata service that contains the configuration for every deployment. This metadata comes from your vercel.json or framework configuration file (like next.config.js).

Using this information, the proxy determines:

- Route type: Does this URL point to a static file or a dynamic function?

- Rewrites and redirects: Does the URL need modification before processing?

- Middleware: Does Routing Middleware need to run first for tasks like authentication or A/B testing?

For a detailed look at how routing decisions work, see Life of a Request: Application-Aware Routing.

Routing Middleware

Run code before a request is completed for authentication, A/B testing, and more.

Redirects

Redirect incoming requests to different URLs.

Rewrites

Map incoming requests to different destinations without changing the URL.

Most applications serve a mix of static and dynamic content. For static assets, pre-rendered pages, and cacheable responses, the proxy checks the Vercel Cache.

| Cache Status | What Happens |

|---|---|

| Hit | Content returns immediately to the user from the PoP closest to them |

| Miss | Content generates in real time and populates the cache for future requests |

| Stale hit (ISR) | Stale content serves instantly while a background process regenerates fresh content |

With Incremental Static Regeneration (ISR), you can serve cached content instantly while keeping it fresh. The cache serves the existing version to your user and triggers regeneration in the background for the next visitor.

Caching

Understand how Vercel's edge cache accelerates your applications.

Incremental Static Regeneration

Update static content without rebuilding your entire site.

Cache Headers

Control caching behavior with HTTP headers.

When a request requires dynamic data, personalization, or server-side logic, the proxy forwards it to the Compute Layer.

The request flow works like this:

- Vercel Functions router receives the request and manages concurrency. Even during massive traffic spikes, the router queues and shapes traffic to prevent failures.

- A Compute instance executes your code. With Fluid compute, instances can handle multiple concurrent requests efficiently.

- Response loop: The compute instance generates HTML or JSON and sends it back through the proxy. If your response headers allow caching, the proxy stores the response for future requests.

Vercel Functions

Run server-side code without managing infrastructure.

Fluid Compute

Scale compute resources automatically based on demand.

What is Compute?

Understand the fundamentals of compute on Vercel.

Everything described above depends on artifacts created during deployment.

When you push code, Vercel's build infrastructure:

- Detects your framework

- Runs your build command

- Separates output into static assets (sent to the cache) and compute artifacts (sent to the function store)

- Compiles your configuration into the metadata that powers the proxy

For more on what happens during deployment, see Behind the Scenes of Vercel's Infrastructure.

Was this helpful?