5 min read

Unpacking the core usage metrics of Edge Requests and Fast Data Transfer

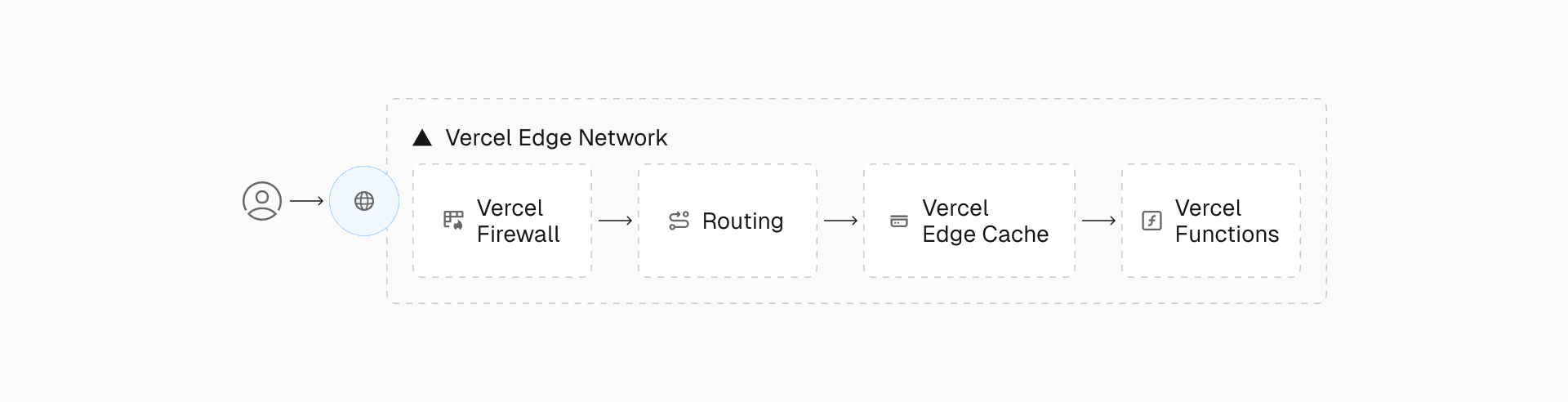

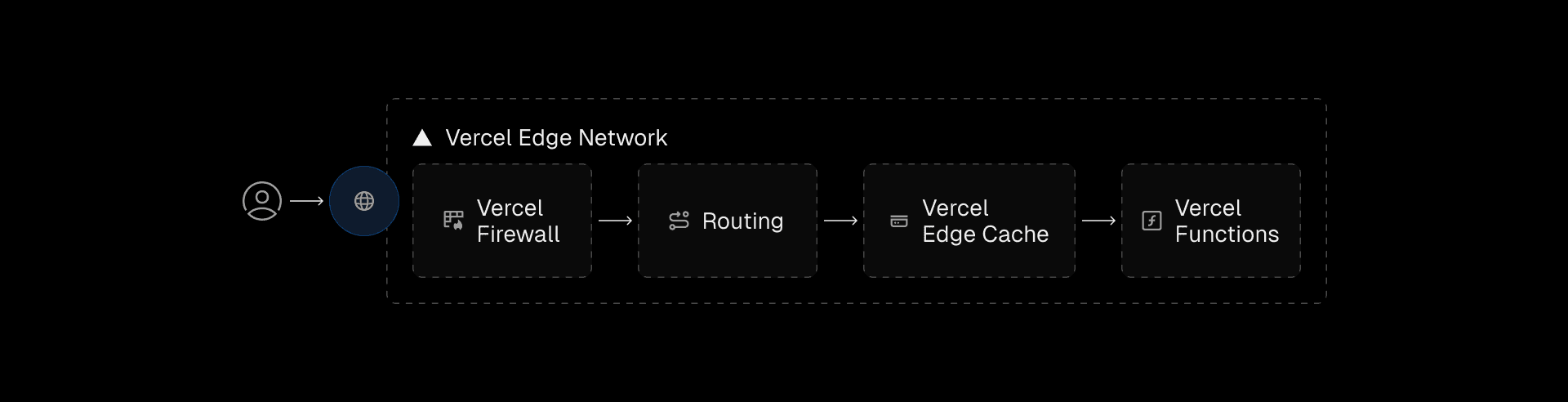

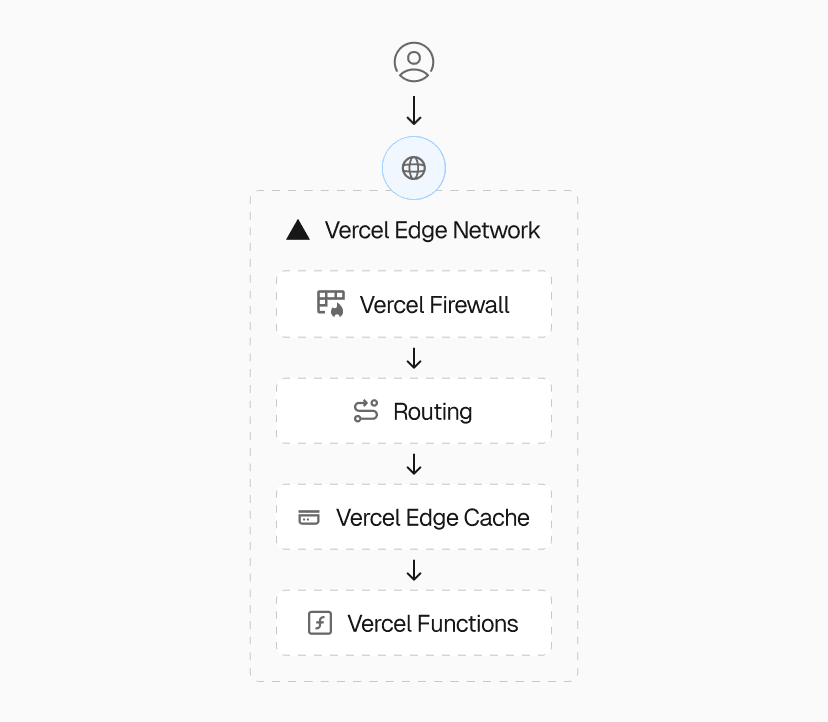

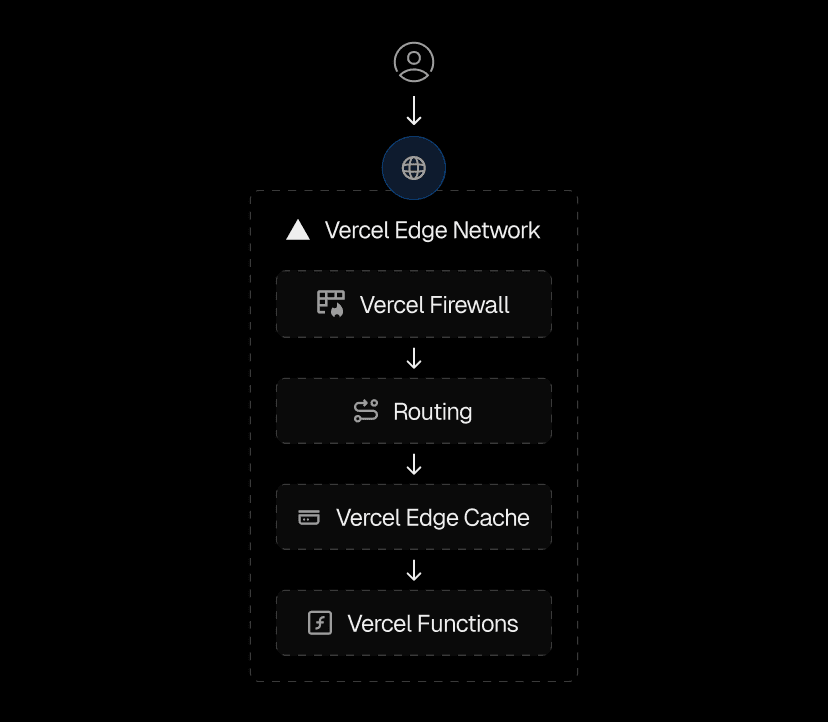

Vercel’s framework-defined infrastructure provisions cloud resources while providing full transparency, from the initial build to every incoming request. Developers can track how static assets are distributed globally, functions handle ISR revalidation, and resources manage routing, server-side rendering, and more.

As users visit your app, granular metrics reveal which resources were leveraged to serve their request. This series unpacks the Vercel Edge Network and associated resource allocation, exploring each stage of a request, and how Vercel streamlines the process.

With a clear understanding of these metrics and optimization strategies, you can deliver better user experiences while improving resource consumption and reducing costs.

Catch up: Read Part I

Life of a Vercel request: What happens when a user presses enter

Read now

Link to headingPoints of Presence (PoPs) and a local first-contact with Vercel's network

In our first post, we showed how each of Vercel's Edge Network components are pieced together to power the full request lifecycle. Now, let’s look closer at the start of that journey.

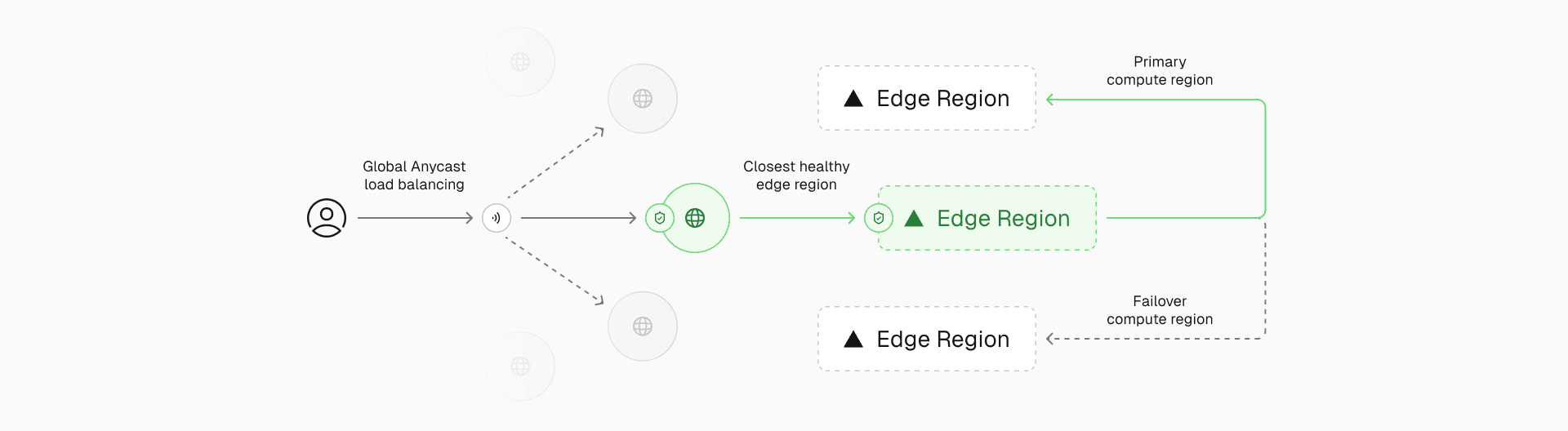

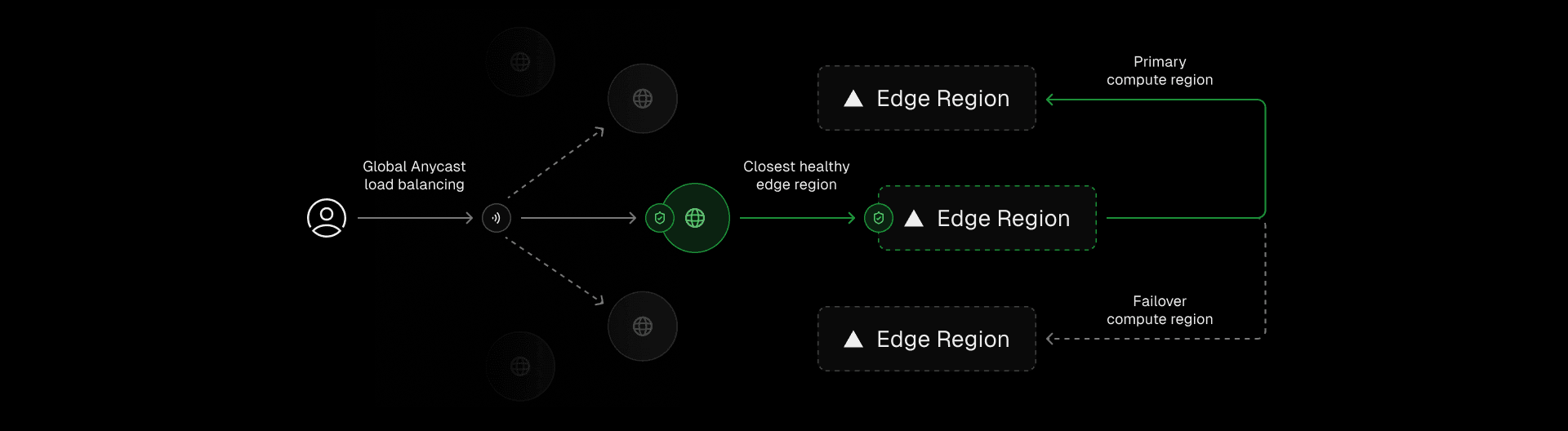

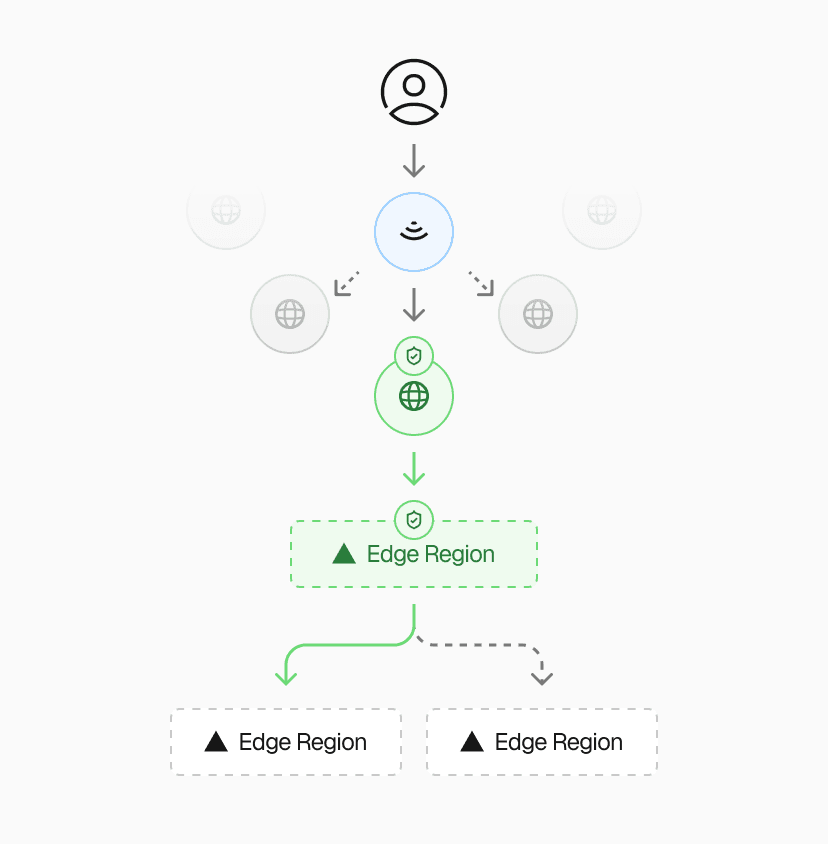

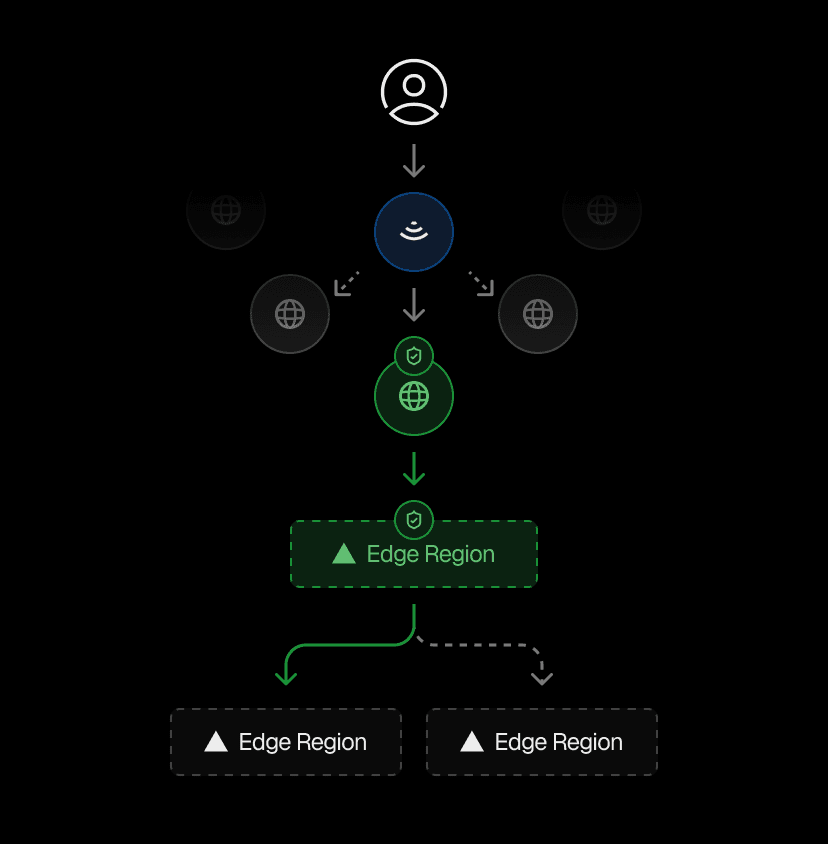

The Vercel Edge Network continuously monitors the health of all networking endpoints to route connections to the most efficient destinations. Leveraging Anycast routing—and real-time awareness of health, speed, and user proximity—it selects the best Point of Presence (PoP) for each request.

After passing initial evaluation and security checks by the Vercel Firewall, the PoP links the request to one of 18 globally distributed Edge Regions. Throughout the request lifecycle, Vercel’s network dynamically adapts, rerouting traffic to redundant or failover systems when necessary to maintain uninterrupted service.

Let's keep zooming in, starting with the foundations of how Vercel transforms every request into Edge Requests, optimized for rapid delivery via Fast Data Transfer.

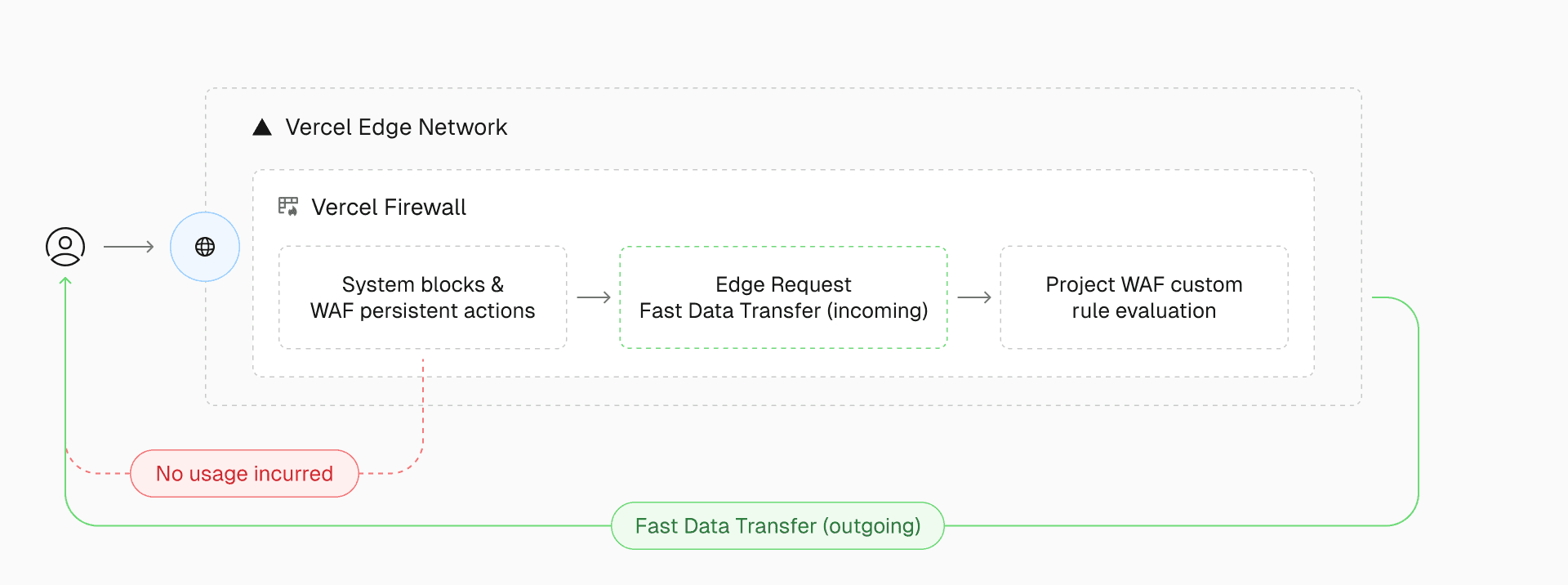

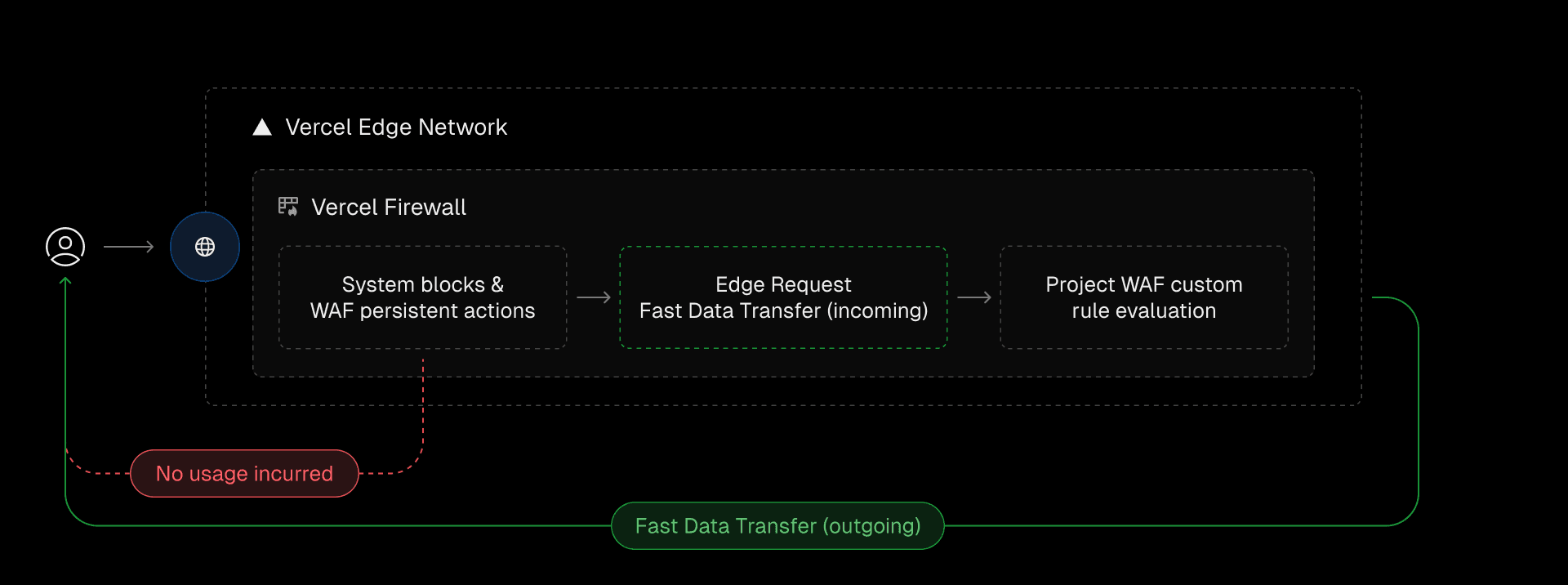

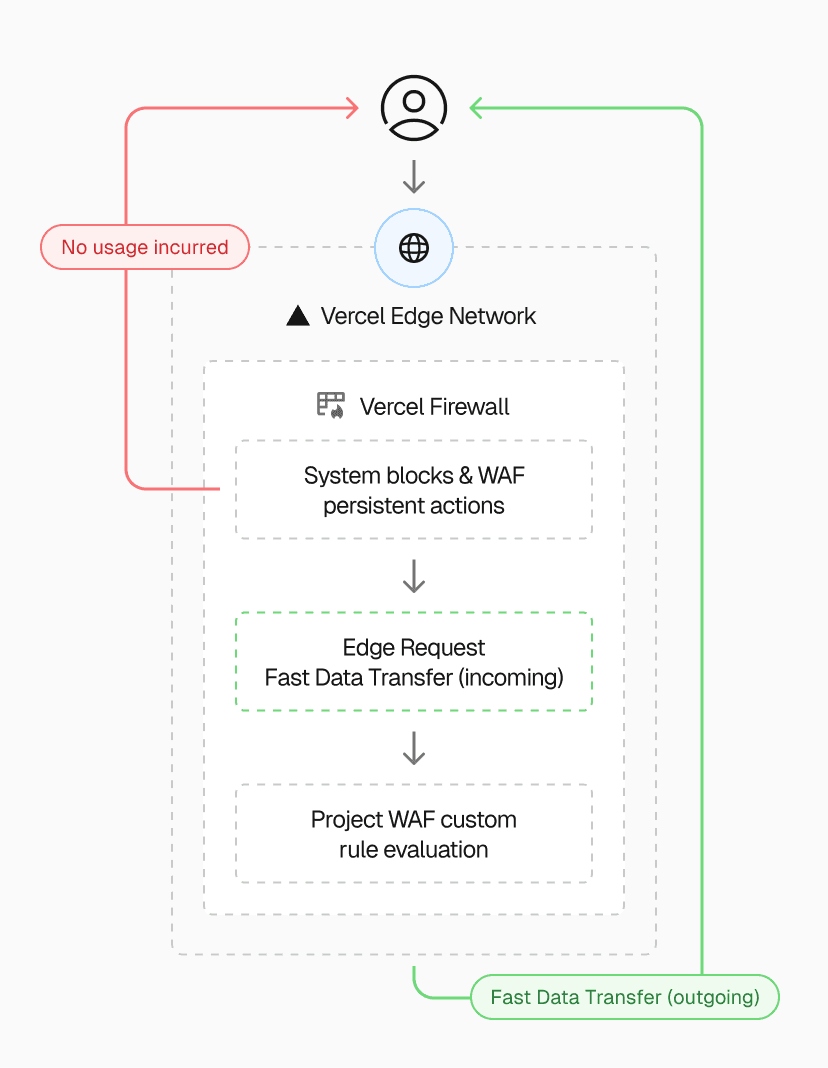

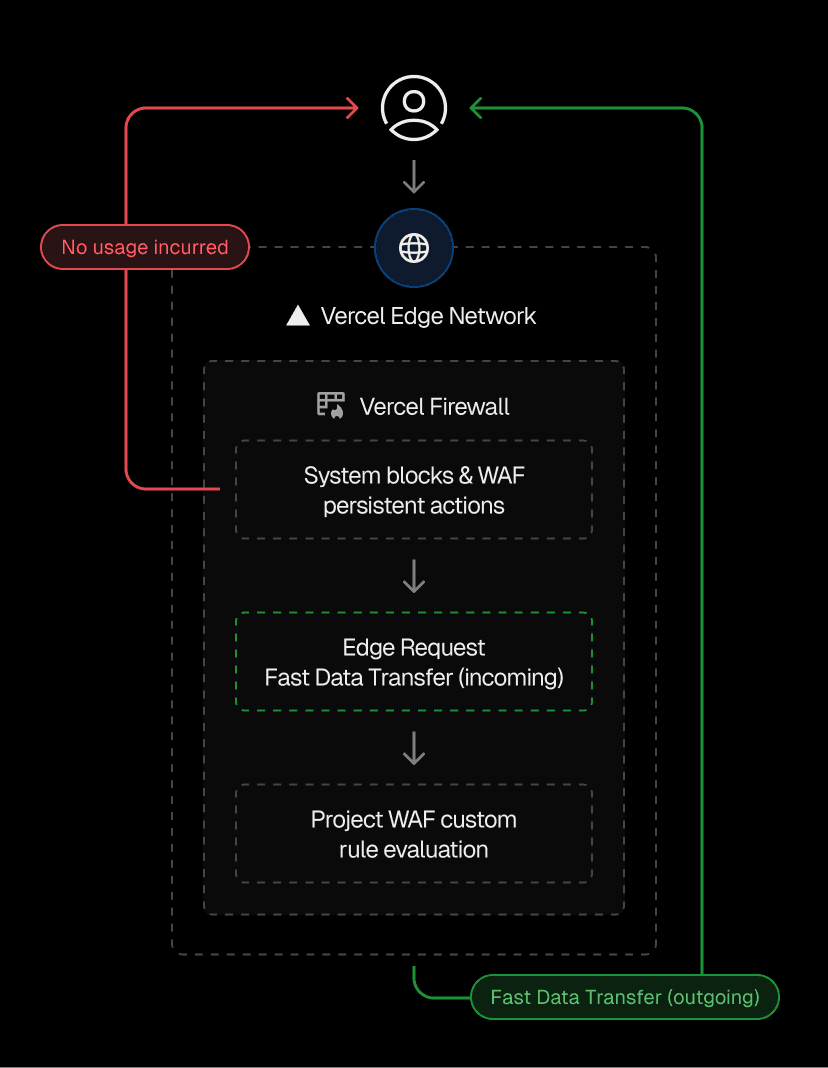

Link to headingWhen does a request become an Edge Request?

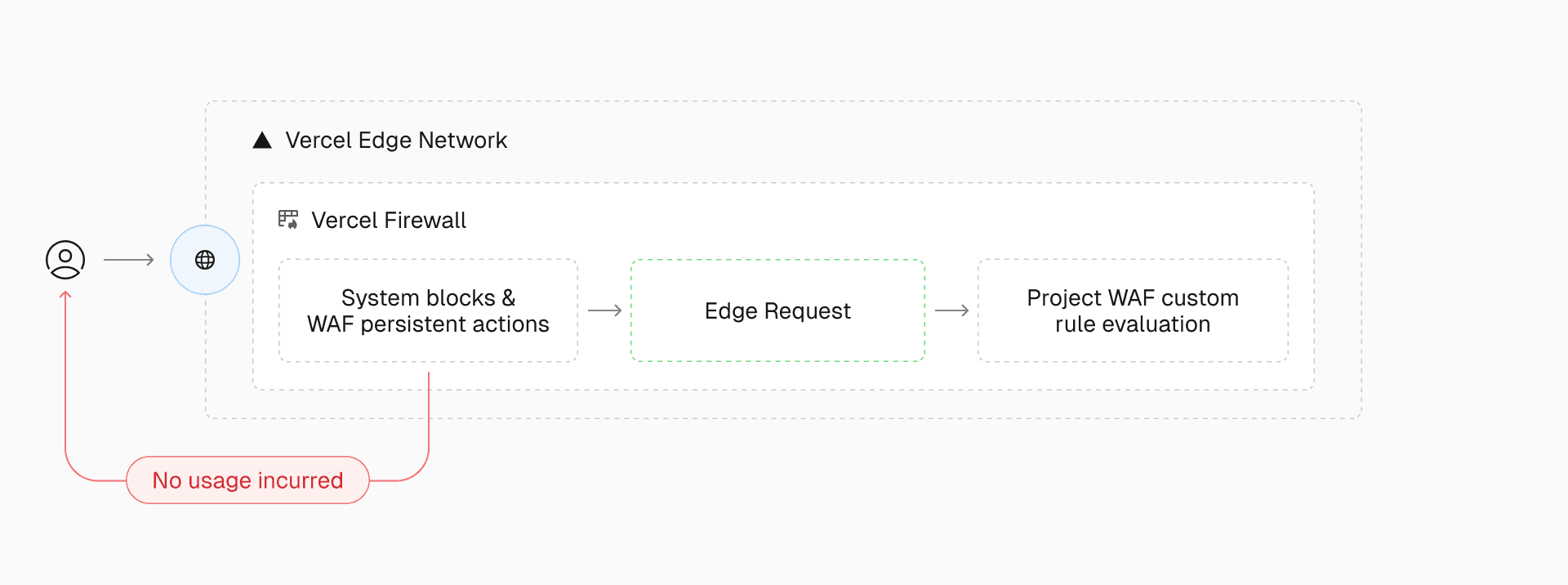

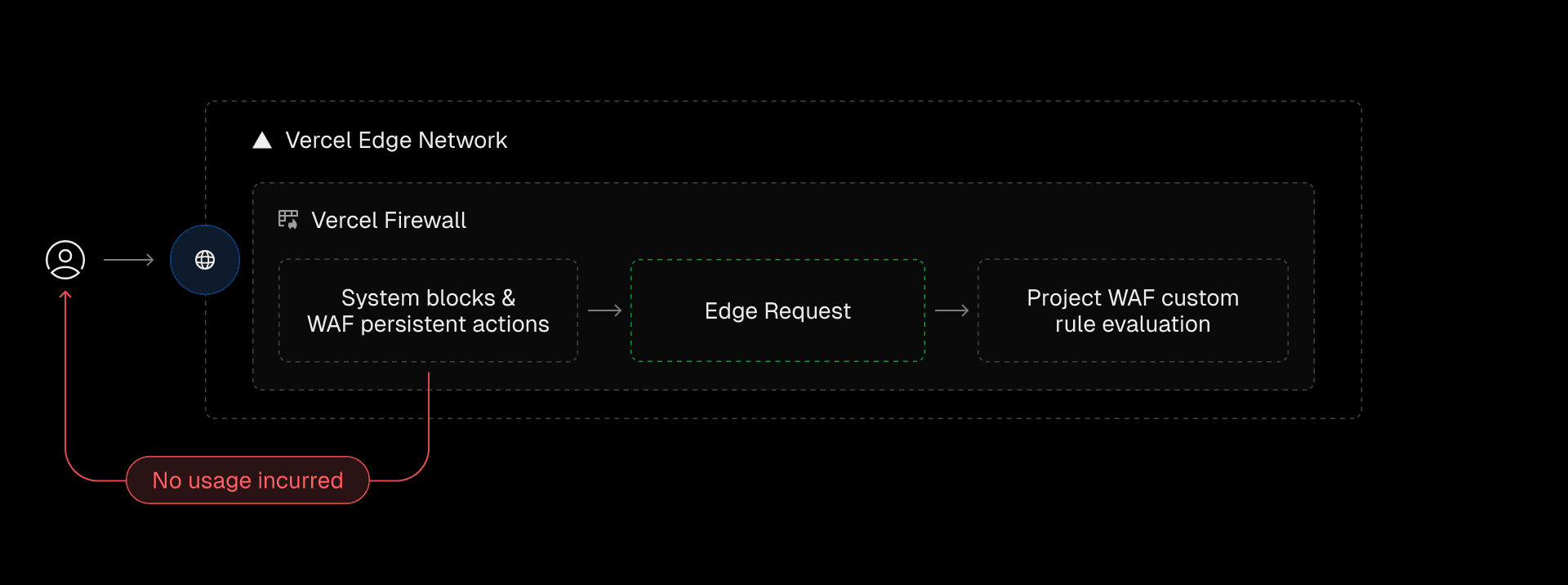

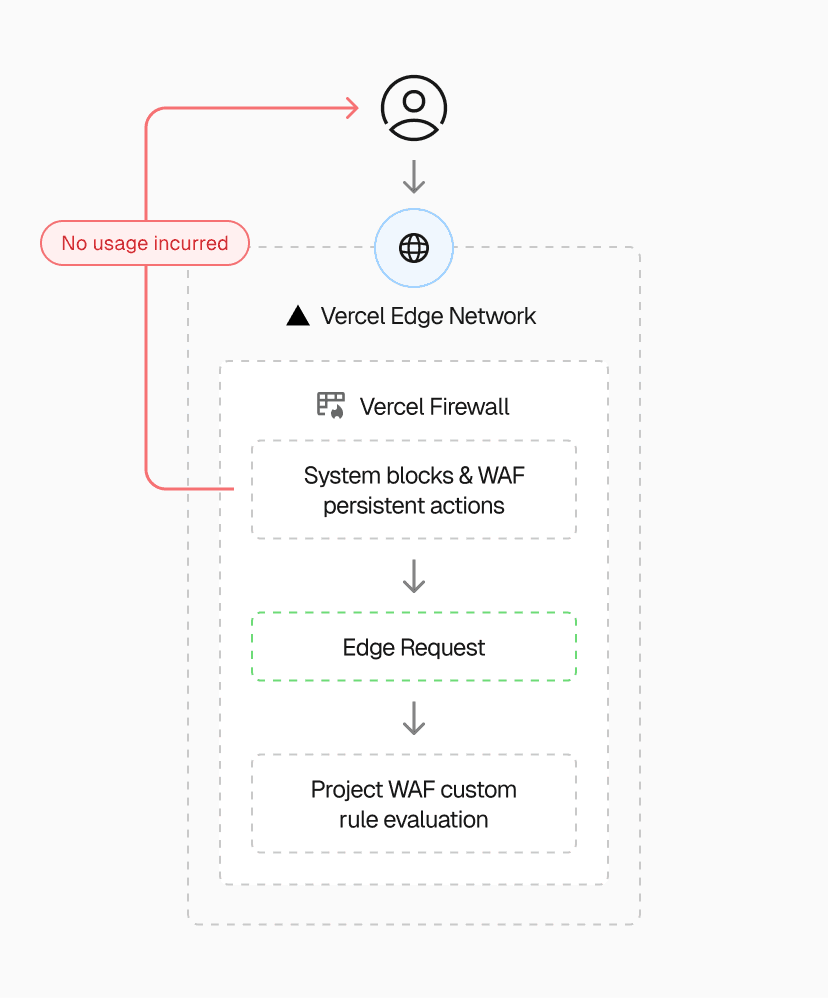

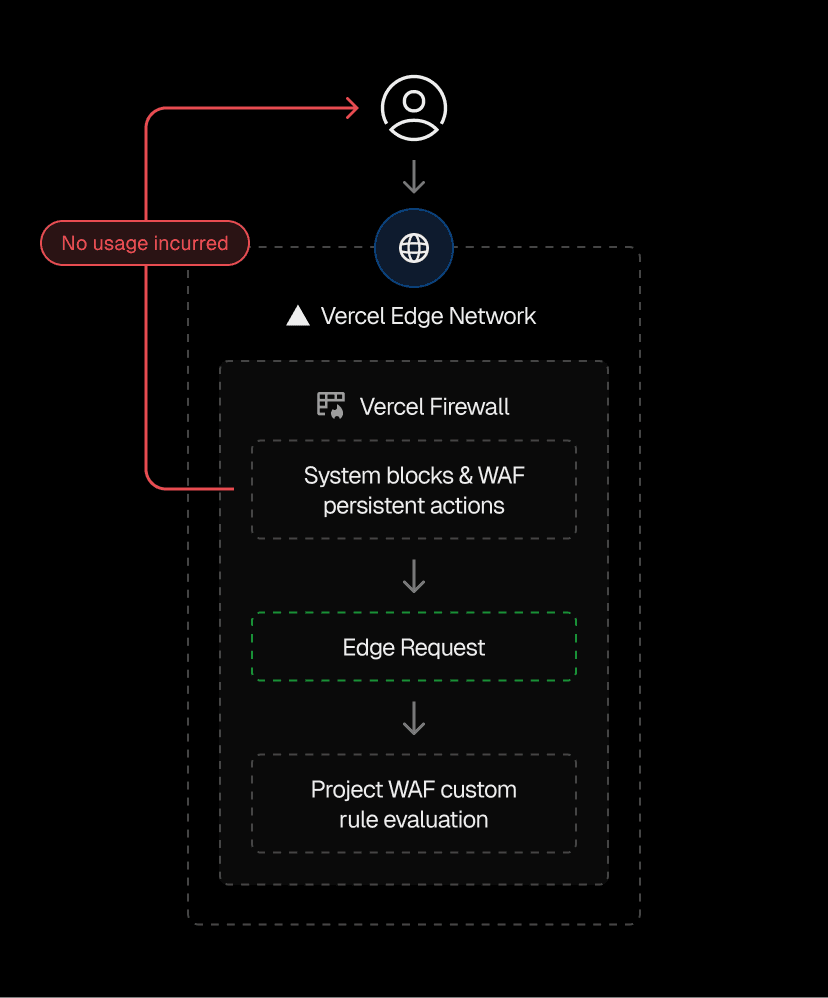

Requests to the Vercel Edge Network are filtered by platform defenses and already-active Web Application Firewall (WAF) actions before becoming "Edge Requests," optimized for ultra-low latency and high-speed delivery.

Every Edge Request has a clear purpose—fetching data or triggering an event. Its specifics depend on the payload, route, application, deployment, and client details like cookies, user agents, or TLS fingerprints. These attributes shape how the request is processed, its bandwidth usage, and the client’s next steps after a response is delivered.

Vercel enriches requests with contextual data—like geolocation, secure environment variables, and deployment or branch details—without adding latency. By leveraging request and application awareness, this data integrates into Edge Middleware and Vercel Functions, allowing you to make even more informed decisions in real-time.

Usage:

Every Edge Request processes in real-time—from static assets, to dynamic API routes, and server-side functions

Requests blocked by Vercel Firewall or WAF persistent actions are excluded from this count

Why it matters:

Tracking Edge Requests provides insight into application demand and behavior

Every request uses network resources, so optimizing request frequency and type can boost performance and cut expenses

Seeing Edge Requests in action:

In your project’s usage tab, you’ll find one-to-one tracking of each Edge Request

Use “Inspect Element” in your browser to observe Edge Requests

Optimization:

When a page loads, the initial request retrieves the HTML, with additional requests fetching assets and styles

While frameworks optimize requests, some features can increase their frequency

Edge Requests can be optimized by reducing unnecessary calls through lazy loading, adjusting polling frequency, and batching related API calls to reduce overhead

Vercel’s analytics and developer tools can be used to monitor patterns and identify optimization opportunities

Edge Requests offer flexibility, adapting to your framework and architecture. By optimizing re-renders, data-fetching, real-time features, and client-side routing, you can streamline request volume and enhance application efficiency.

Link to headingFast Data Transfer (FDT): Powering rapid data movement to and from Vercel

A key element of the Vercel Edge Network, Fast Data Transfer (FDT) ensures rapid data movement between the visitor’s browser and edge regions. Supported by a vast network of PoPs and private fiber connections, Vercel bypasses typical public internet bottlenecks to deliver secure, high-speed data.

FDT refers to the low-latency movement of data between users and the Vercel Edge Network. By reducing Time to First Byte (TTFB) and Largest Contentful Paint (LCP), FDT minimizes latency and enhances the user experience.

Usage:

FDT is measured by the volume of data transferred during a request, covering both the inbound data (user to Vercel) and outbound data (Vercel to user)

Higher data volumes impact both performance and usage costs

Why it matters:

Fast, efficient data transfer is vital for core web vitals, improving load times and user experience

By sending only essential data, developers can manage both performance and costs, ensuring that users encounter minimal wait times and smoother interactions

Seeing Fast Data Transfer in action:

Request and response headers in your browser’s devtools can show FDT in action, detailing the data transferred

Optimization:

FDT can be optimized by how you handle requests and the data volume sent with each call, like API requests

Many frameworks support optimizations such as code-splitting, lazy loading, and tree-shaking to help reduce payload size

For images, Vercel's Image Optimization can significantly reduce the sizes of images served with Vercel

Vercel will automatically compress assets such as images, JavaScript, and CSS, optimizing them for faster delivery. This reduces the overall data size, minimizing Fast Data Transfer, which in turn improves page load times and performance.

Link to headingInspecting requests: A behind the scenes look

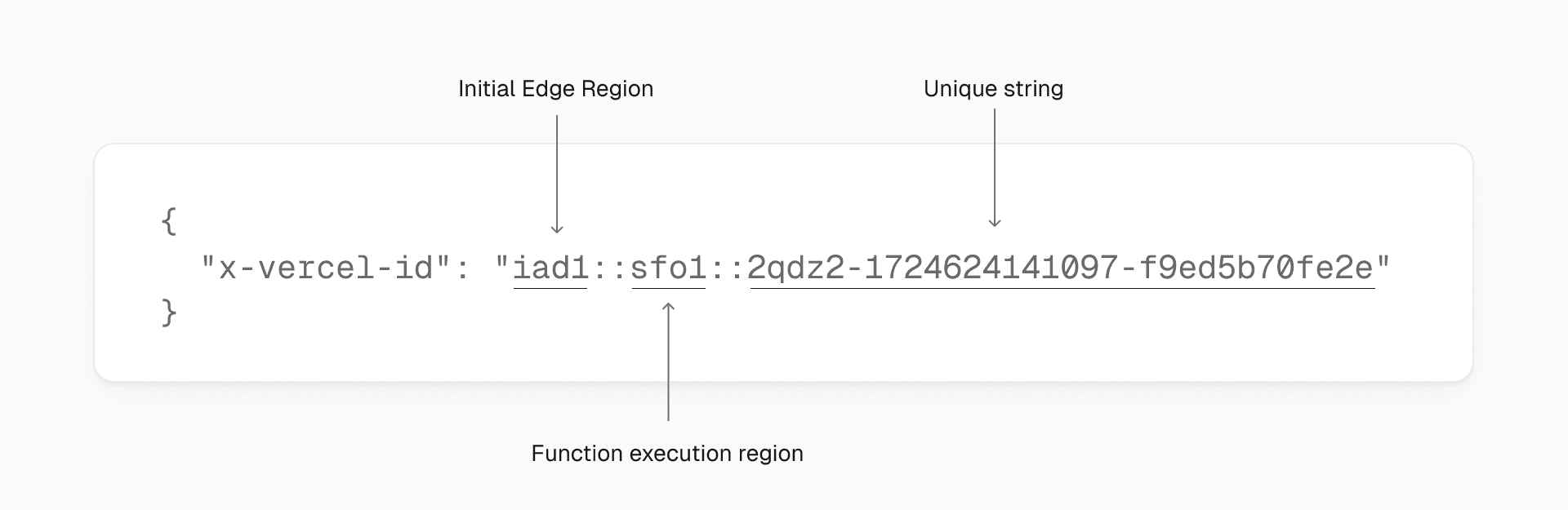

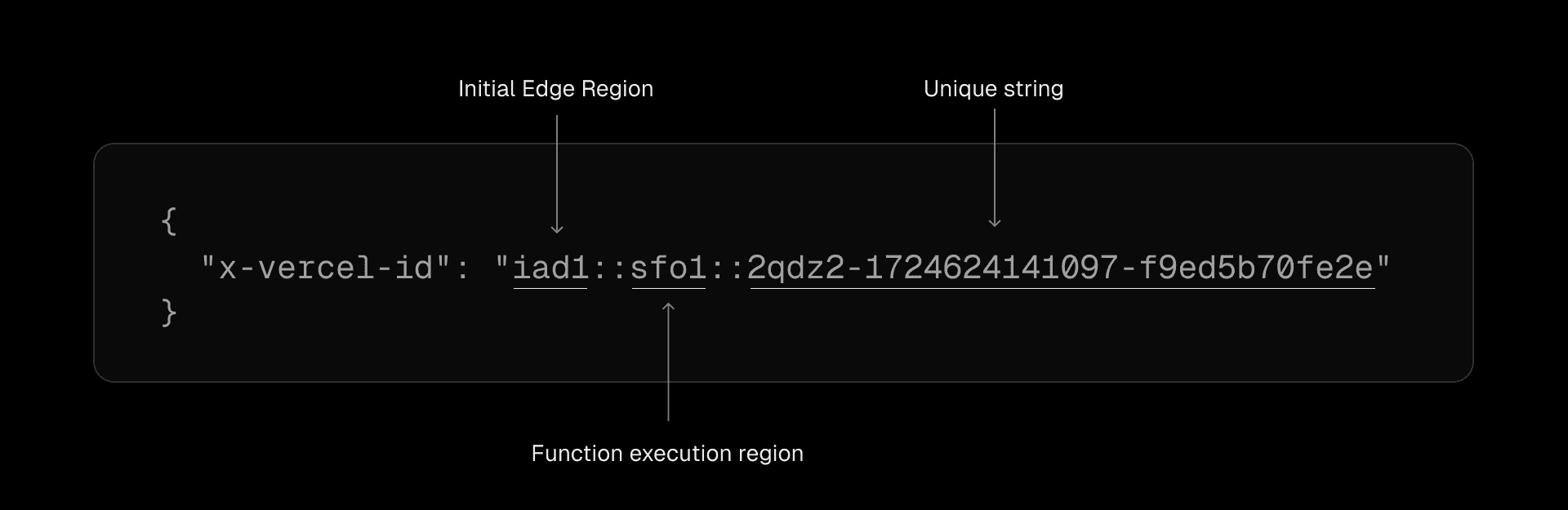

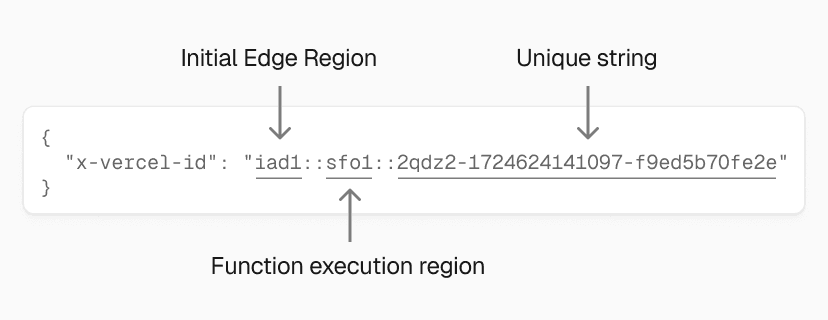

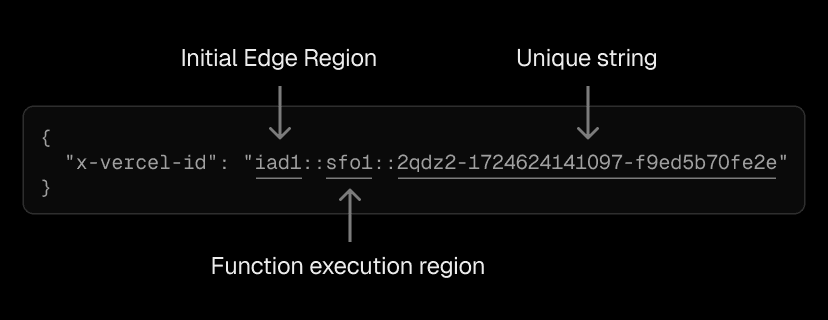

To see how your requests flow through the Vercel Edge Network, you can check the x-vercel-id header in your HTTP responses. This header reveals the edge region that handled the request and the region where any functions (Edge or serverless) were executed.

The structure of the Vercel ID acts as a globally unique identifier, useful for debugging and understanding server-side routing details, starting from the client initiating the request.

Link to headingPractical Example

Suppose you’re in New York City. Your request might route to the nearest edge region, iad1 (Washington, DC), while the function executes in sfo1 (San Francisco, CA) due to its proximity to your backend origin. The x-vercel-id might look like this:

Points of Presence (PoPs) are not included in the x-vercel-id header.

Link to headingOptimizing Vercel Edge Network usage

Every request to a Vercel app—from the moment a user presses enter to the final response—adapts to the user’s intent and your framework’s code. Whether it’s routing, rendering, or caching, every step reflects decisions shaped by user parameters and your application logic. As you push code, Vercel builds the application and allocates cloud resources, activating components exactly when needed for optimal delivery.

Maximize your app’s performance by tracking Edge Requests and Fast Data Transfer. With resources allocated based on your framework, you have full control to ensure your application is efficient and cost-effective.