Observability

The AI Gateway logs spend, model usage, and observability metrics related to your requests, which you can use to monitor and debug.

You can view these details in the AI Gateway Overview section in your Vercel dashboard sidebar:

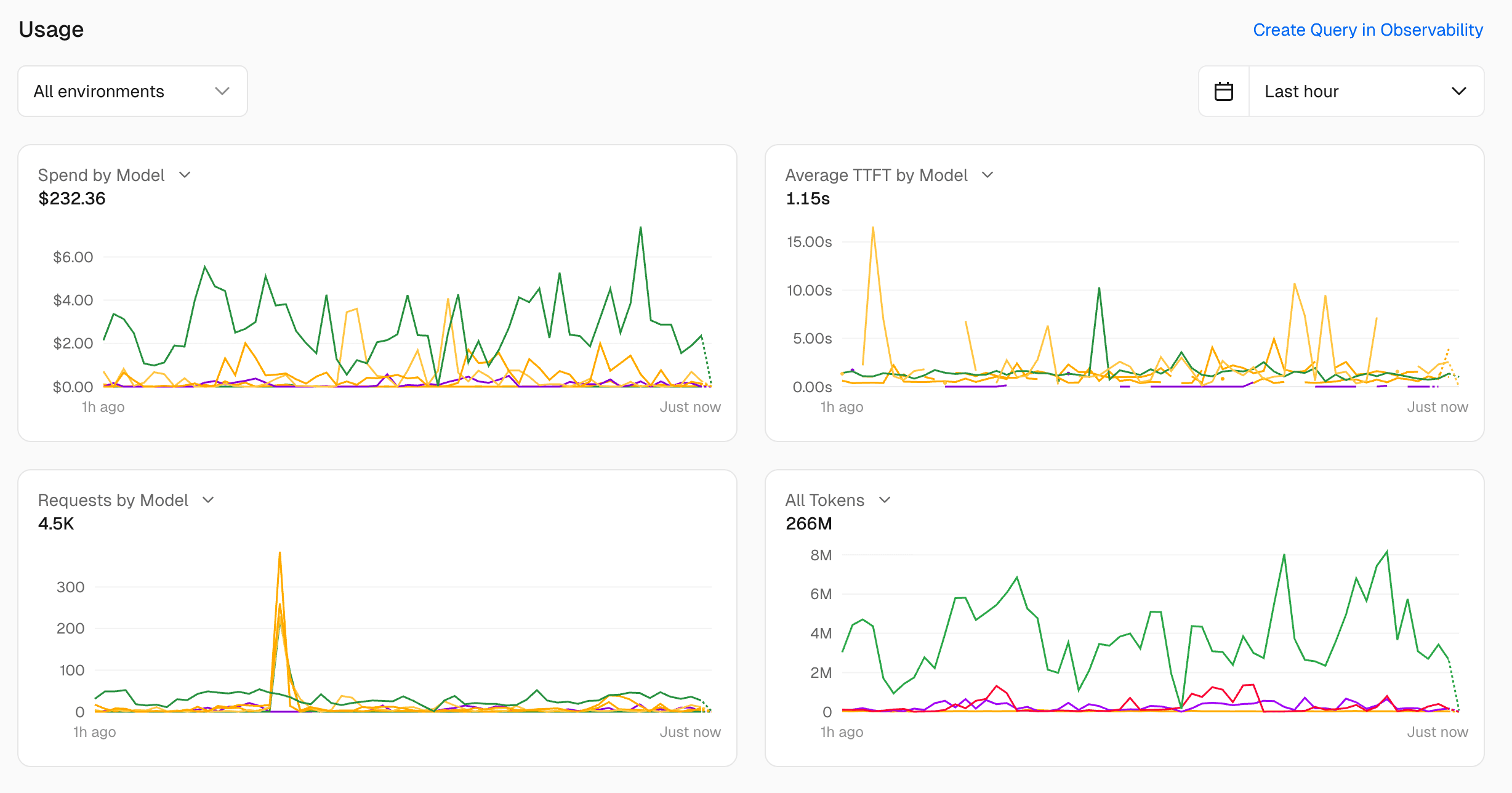

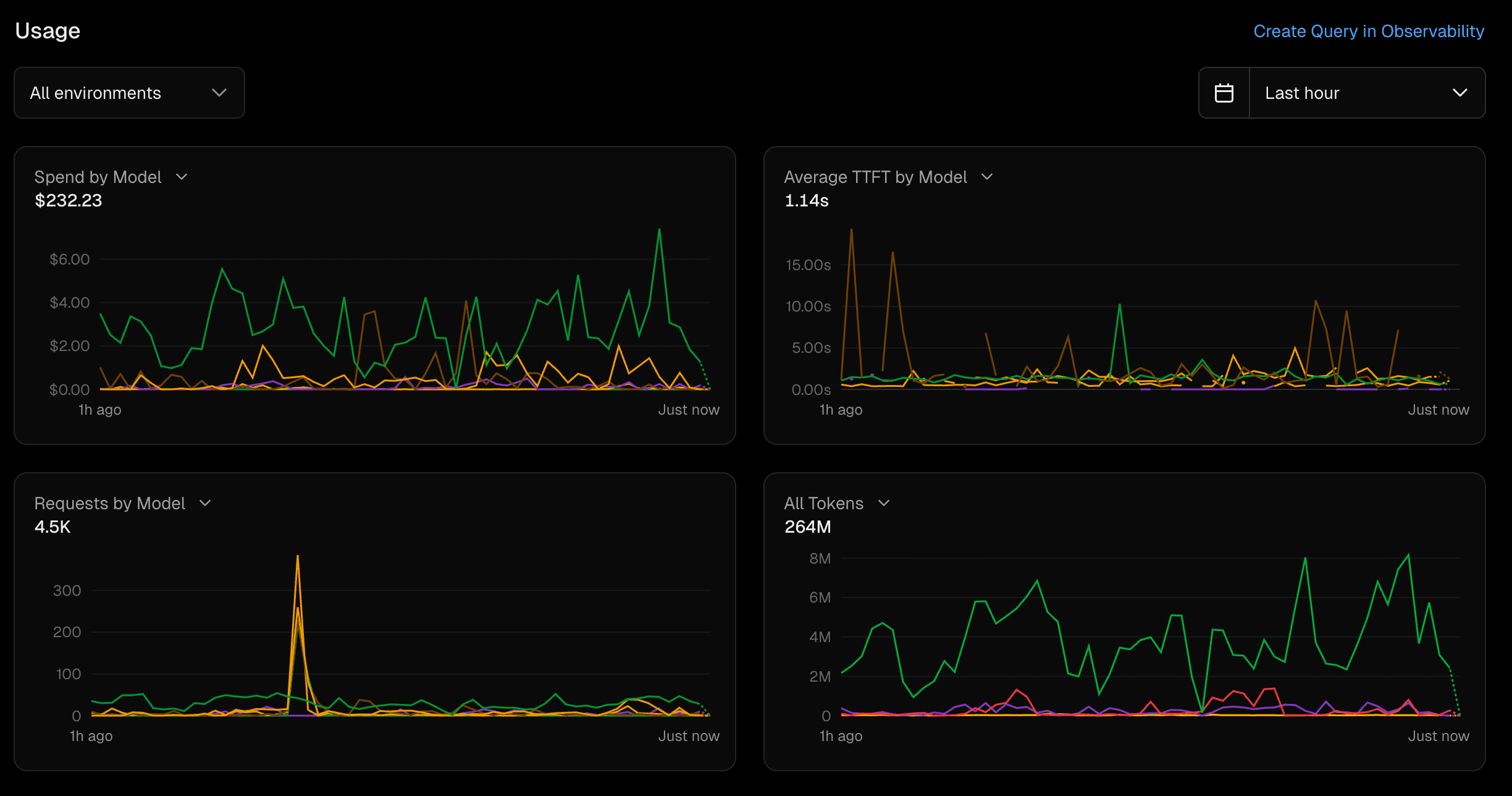

- Usage: Graphs and metrics to track your AI Gateway usage and cost

- Requests: Summaries by project, API key, and a detailed log of all requests

You can view these metrics in two ways:

- Team level: Stay in your team scope to see aggregated metrics across all projects

- Project level: Use the new dashboard view and select a specific project from the top project dropdown to see project-specific metrics

The Usage section displays four metrics to help you monitor your AI Gateway activity. For extended timeframes and further retention, you need Observability Plus.

The Requests by Model chart shows the number of requests made to each model over time. This can help you identify which models are being used most frequently and whether there are any spikes in usage.

The Time to First Token chart shows the average time it takes for the AI Gateway to return the first token of a response. This can help you understand the latency of your requests and identify any performance issues.

The Input/Output Token Counts chart shows the number of input and output tokens for each request. This can help you understand the size of the requests being made and the responses being returned.

The Spend chart shows the total amount spent on AI Gateway requests over time. This can help you monitor your spending and identify any unexpected costs.

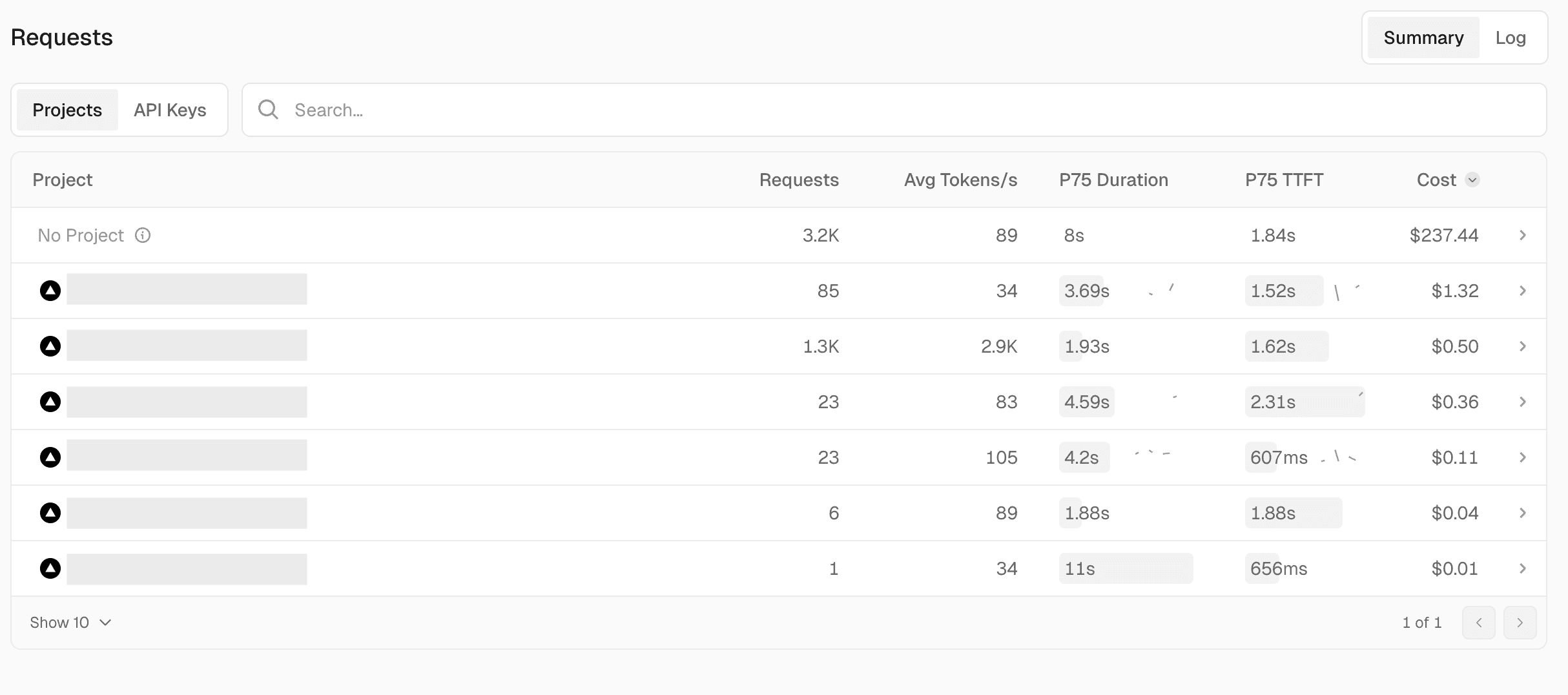

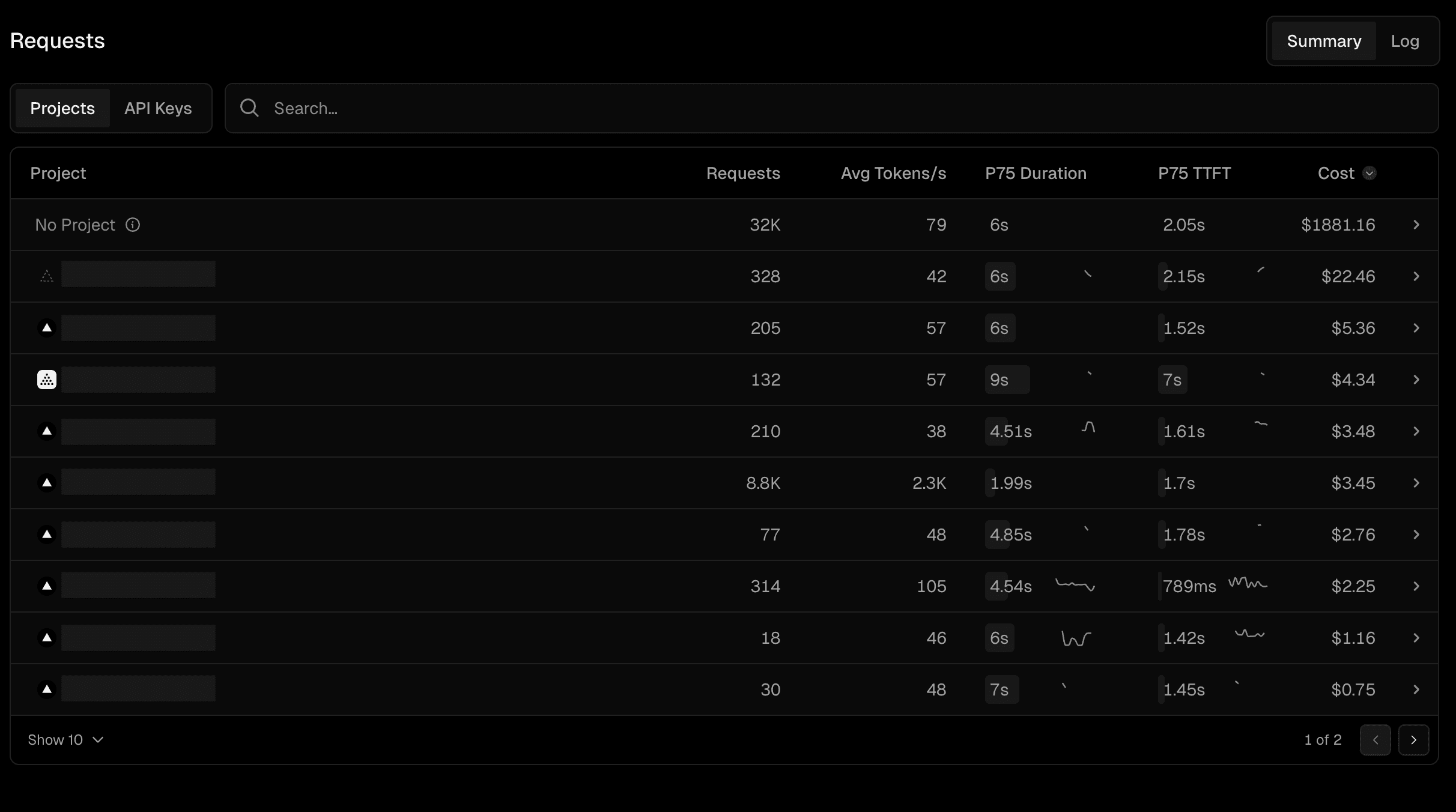

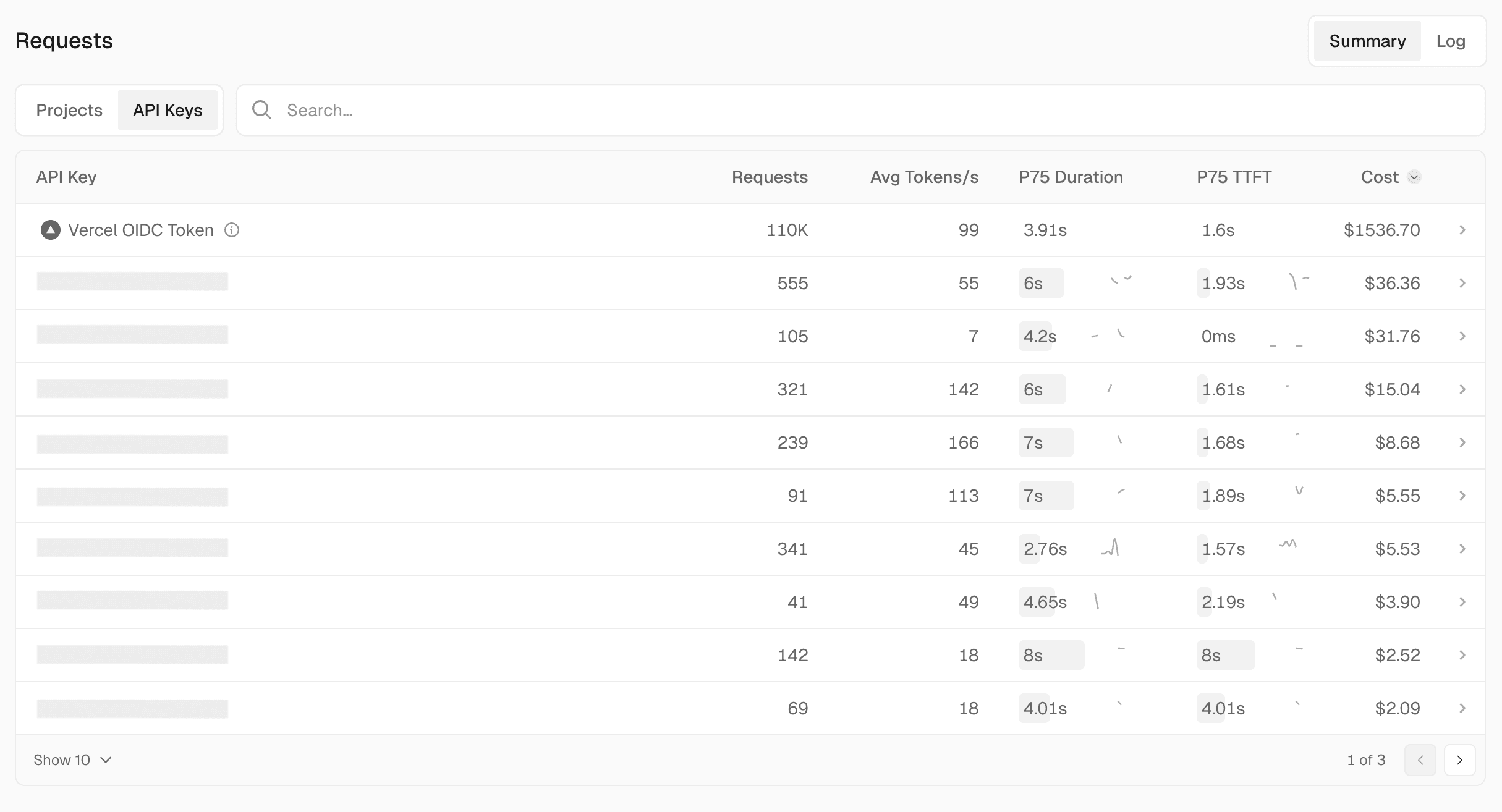

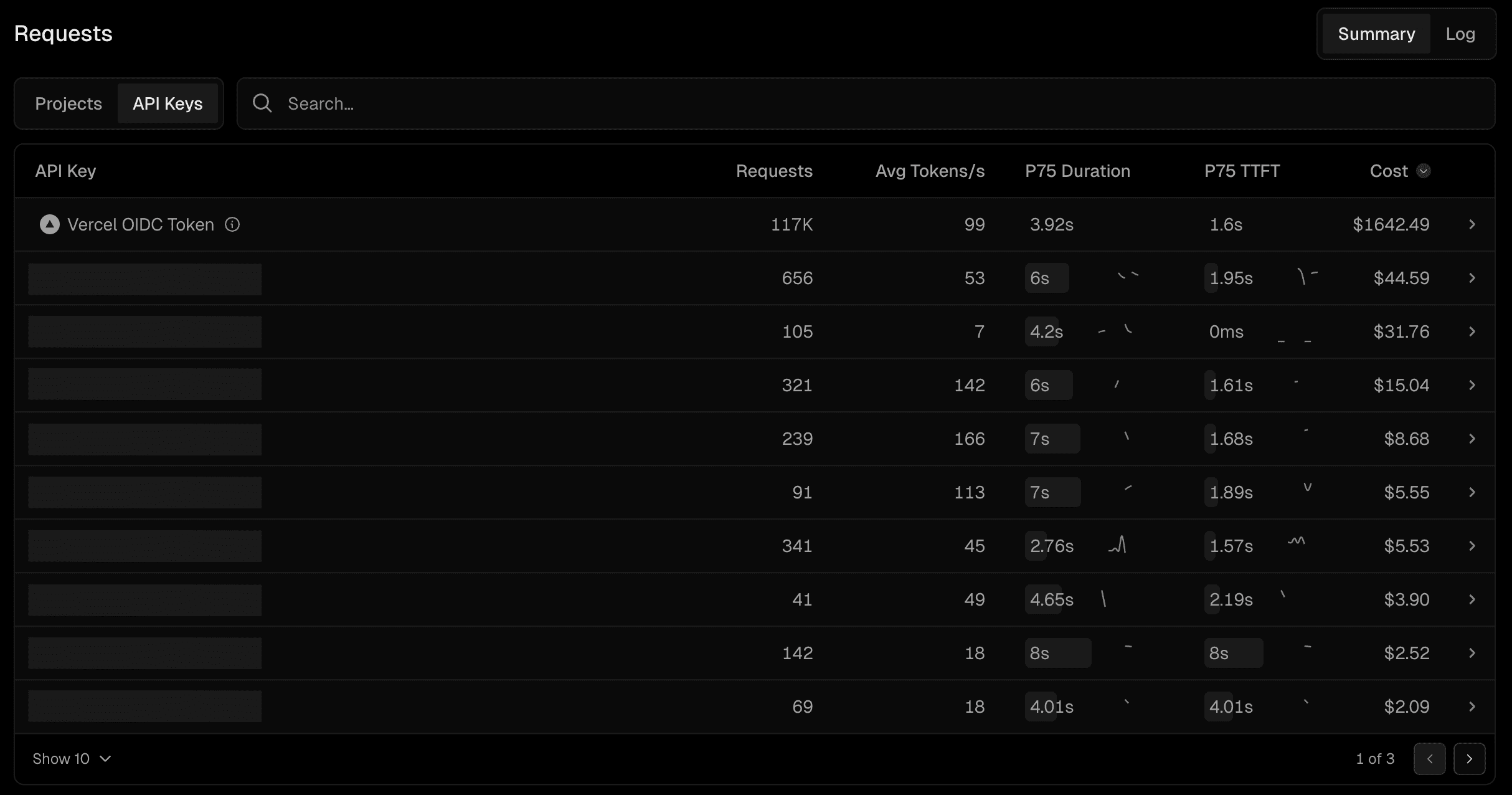

The Requests section displays summaries by project, API key, and a detailed log of all requests. Each summary includes request count, average tokens, P75 duration, P75 TTFT, and cost for the specified time frame.

View usage grouped by project. Use this view to associate usage and spend with specific projects. Click into a project for more detailed information.

View usage grouped by API key. Use this view to track usage by a specific person or part of your organization. Click into an API key for more detailed information.

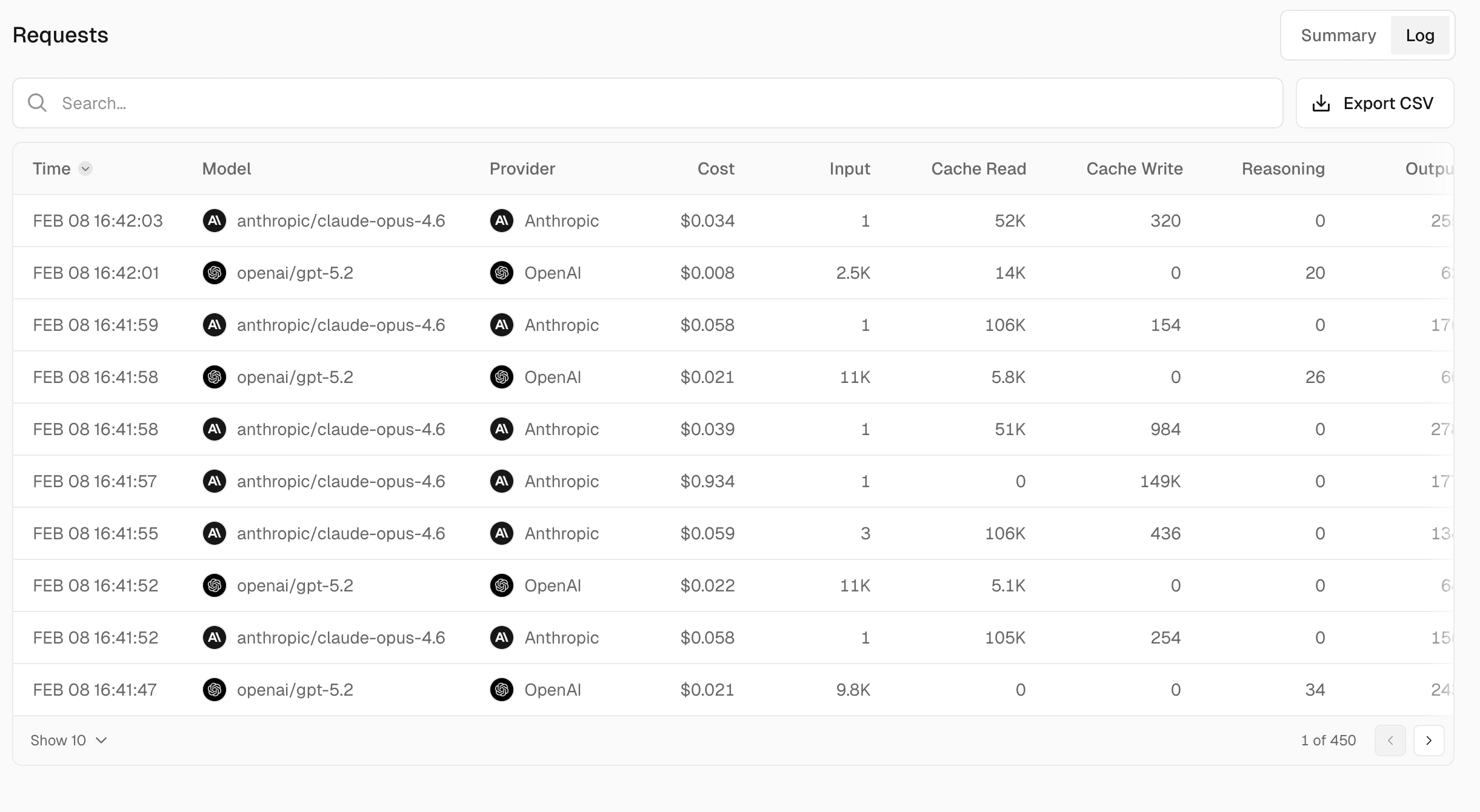

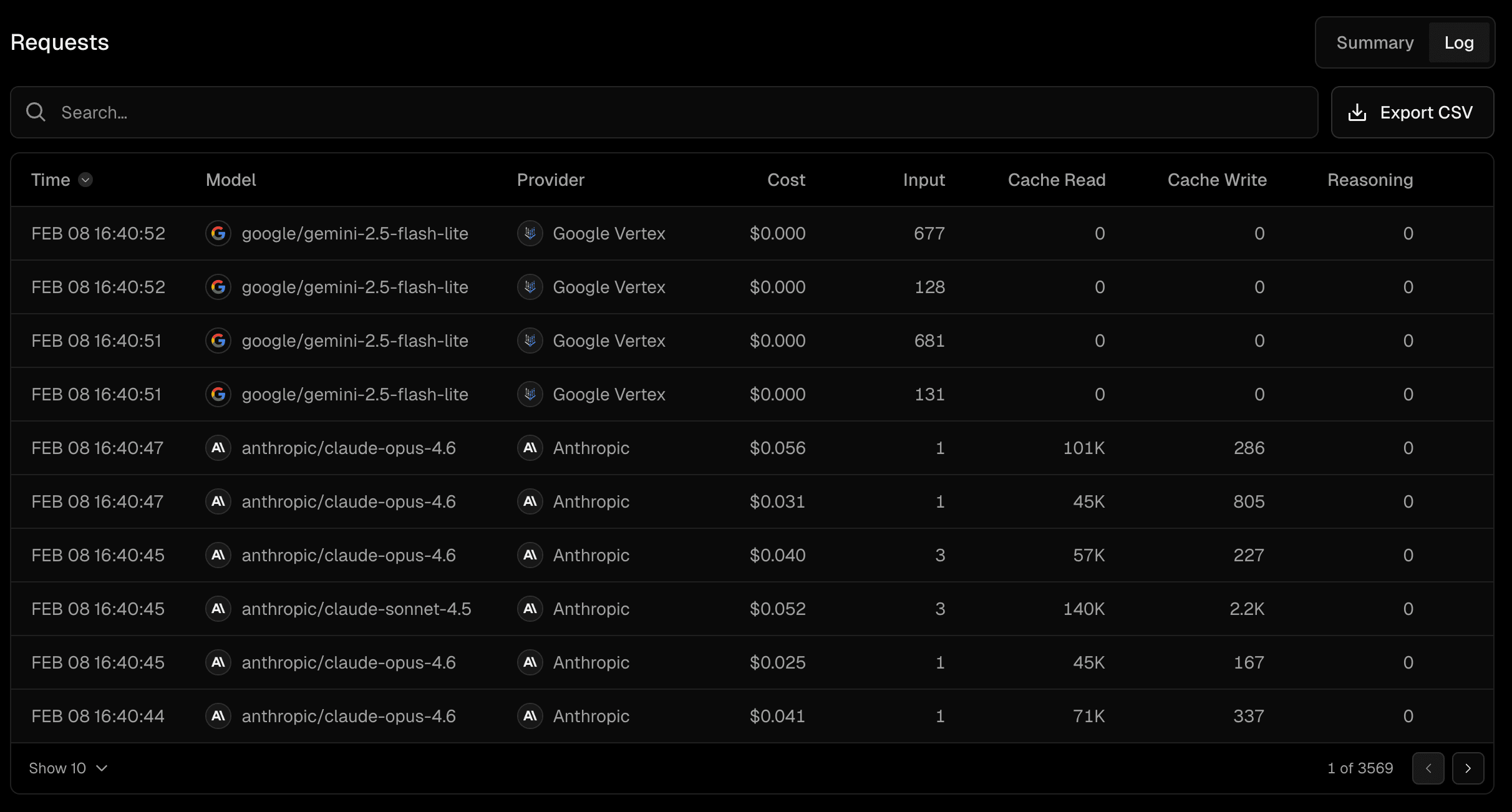

View a detailed log of all requests made to the AI Gateway, including all token types and the cost for each request. You can sort or export the logs for the selected time frame.

By default, when you access the AI Gateway Overview tab, you view metrics for all requests made across all projects in your team. This is useful for monitoring the overall usage and performance of the AI Gateway.

To view metrics for a specific project, you can access the project scope in two ways:

- Select the project from the top project dropdown in the dashboard

- Click into the project from the Projects view in the Requests section

Once in project scope, you'll see the same metrics filtered to show only the activity for that specific project.

Was this helpful?