Fluid compute

Fluid compute offers a blend of serverless flexibility and server-like capabilities. Unlike traditional serverless architectures, which can face issues such as cold starts and limited functionalities, fluid compute provides a hybrid solution. It overcomes the limitations of both serverless and server-based approaches, delivering the advantages of both worlds, including:

- Zero configuration out of the box: Fluid compute comes with preset defaults that automatically optimize your functions for both performance and cost efficiency.

- Optimized concurrency: Optimize resource usage by handling multiple invocations within a single function instance. Can be used with the Node.js and Python runtimes.

- Dynamic scaling: Fluid compute automatically optimizes existing resources before scaling up to meet traffic demands. This ensures low latency during high-traffic events and cost efficiency during quieter periods.

- Background processing: After fulfilling user requests, you can continue executing background tasks using

waitUntil. This allows for a responsive user experience while performing time-consuming operations like logging and analytics in the background. - Automatic cold start optimizations: Reduces the effects of cold starts through automatic bytecode optimization, and function pre-warming on production deployments.

- Cross-region and availability zone failover: Ensure high availability by first failing over to another availability zone (AZ) within the same region if one goes down. If all zones in that region are unavailable, Vercel automatically redirects traffic to the next closest region. Zone-level failover also applies to non-fluid deployments.

- Error isolation: Unhandled errors won't crash other concurrent requests running on the same instance, maintaining reliability without sacrificing performance.

See What is compute? to learn more about fluid compute and how it compares to traditional serverless models.

As of April 23, 2025, fluid compute is enabled by default for new projects.

You can enable fluid compute through the Vercel dashboard or by configuring your vercel.json file for specific environments or deployments.

To enable fluid compute through the dashboard:

- Navigate to your project's Functions Settings in the dashboard

- Locate the Fluid Compute section

- Toggle the switch to enable fluid compute for your project

- Click Save to apply the changes

- Deploy your project for the changes to take effect

When you enable it through the dashboard, fluid compute applies to all deployments for that project by default.

You can programmatically enable fluid compute using the fluid property in your vercel.json file. This approach is particularly useful for:

- Testing on specific environments: Enable fluid compute only for custom environments environments when using branch tracking

- Per-deployment configuration: Test fluid compute on individual deployments before enabling it project-wide

{

"$schema": "https://openapi.vercel.sh/vercel.json",

"fluid": true

}Fluid compute is available for the following runtimes:

Fluid compute allows multiple invocations to share a single function instance, this is especially valuable for AI applications, where tasks like fetching embeddings, querying vector databases, or calling external APIs can be I/O-bound. By allowing concurrent execution within the same instance, you can reduce cold starts, minimize latency, and lower compute costs.

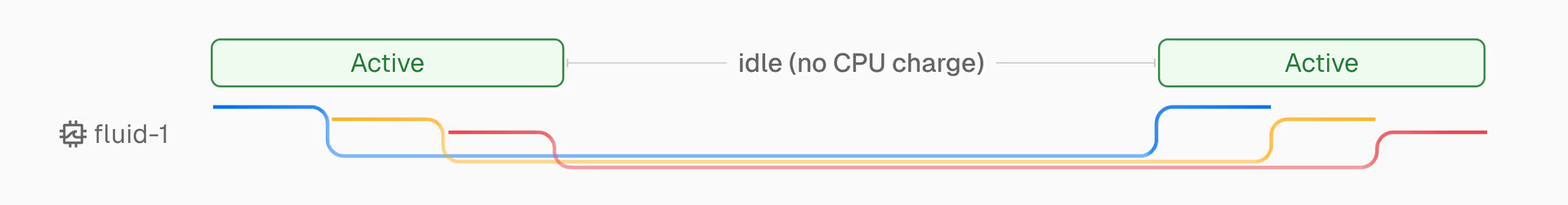

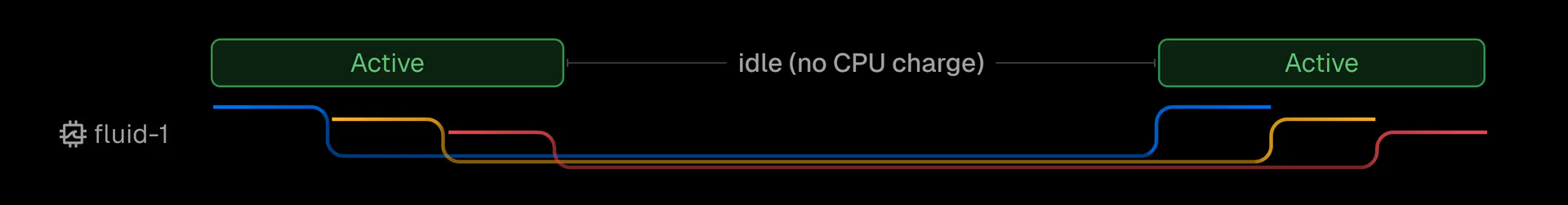

Vercel Functions prioritize existing idle resources before allocating new ones, reducing unnecessary compute usage. This in-function-concurrency is especially effective when multiple requests target the same function, leading to fewer total resources needed for the same workload.

Optimized concurrency in fluid compute is available when using Node.js or Python runtimes. See the efficient serverless Node.js with in-function concurrency blog post to learn more.

When using Node.js version 20+, Vercel Functions use bytecode caching to reduce cold start times. This stores the compiled bytecode of JavaScript files after their first execution, eliminating the need for recompilation during subsequent cold starts.

As a result, the first request isn't cached yet. However, subsequent requests benefit from the cached bytecode, enabling faster initialization. This optimization is especially beneficial for functions that are not invoked that often, as they will see faster cold starts and reduced latency for end users.

Bytecode caching is only applied to production environments, and is not available in development or preview deployments.

For frameworks that output ESM, all CommonJS dependencies

(for example, react, node-fetch) will be opted into bytecode caching.

On traditional serverless compute, the isolation boundary refers to the separation of individual instances of a function to ensure they don't interfere with each other. This provides a secure execution environment for each function.

However, because each function uses a microVM for isolation, which can lead to slower start-up times, you can see an increase in resource usage due to idle periods when the microVM remains inactive.

Fluid compute uses a different approach to isolation. Instead of using a microVM for each function invocation, multiple invocations can share the same physical instance (a global state/process) concurrently. This allows functions to share resources and execute in the same environment, which can improve performance and reduce costs.

When uncaught exceptions or unhandled rejections happen in Node.js, Fluid compute logs the error and lets current requests finish before stopping the process. This means one broken request won't crash other requests running on the same instance and you get the reliability of traditional serverless with the performance benefits of shared resources.

Fluid Compute includes default settings that vary by plan:

| Settings | Hobby | Pro | Enterprise |

|---|---|---|---|

| CPU configuration | Standard | Standard / Performance | Standard / Performance |

| Default / Max duration | 300s (5 minutes) / 300s (5 minutes) | 300s (5 minutes) / 800s (13 minutes) | 300s (5 minutes) / 800s (13 minutes) |

| Multi-region failover | |||

| Multi-region functions | Up to 3 | All |

The settings you configure in your function code, dashboard, or vercel.json file will override the default fluid compute settings.

The following order of precedence determines which settings take effect. Settings you define later in the sequence will always override those defined earlier:

| Precedence | Stage | Explanation | Can Override |

|---|---|---|---|

| 1 | Function code | Settings in your function code always take top priority. These include max duration defined directly in your code. | maxDuration |

| 2 | vercel.json | Any settings in your vercel.json file, like max duration, and region, will override dashboard and Fluid defaults. | maxDuration, region |

| 3 | Dashboard | Changes made in the dashboard, such as max duration, region, or CPU, override Fluid defaults. | maxDuration, region, memory |

| 4 | Fluid defaults | These are the default settings applied automatically when fluid compute is enabled, and do not configure any other settings. |

See the fluid compute pricing documentation for details on how fluid compute is priced, including active CPU, provisioned memory, and invocations.

Was this helpful?