Speed Insights Overview

Speed Insights is available on all plans

Vercel Speed Insights provides you with a detailed view of your website's performance metrics, based on Core Web Vitals, enabling you to make data-driven decisions for optimizing your site. For granular visitor data, use Web Analytics.

The Speed Insights dashboard offers in-depth information about scores and individual metrics without the need for code modifications or leaving the Vercel dashboard.

To get started, follow the quickstart to enable Speed Insights and learn more about the dashboard view and metrics.

When you enable Speed Insights, Vercel tracks data on all deployed environments, including preview and production deployments.

Once you enable Speed Insights, you can access the dashboard by selecting your project in the Vercel dashboard, and clicking Speed Insights in the sidebar.

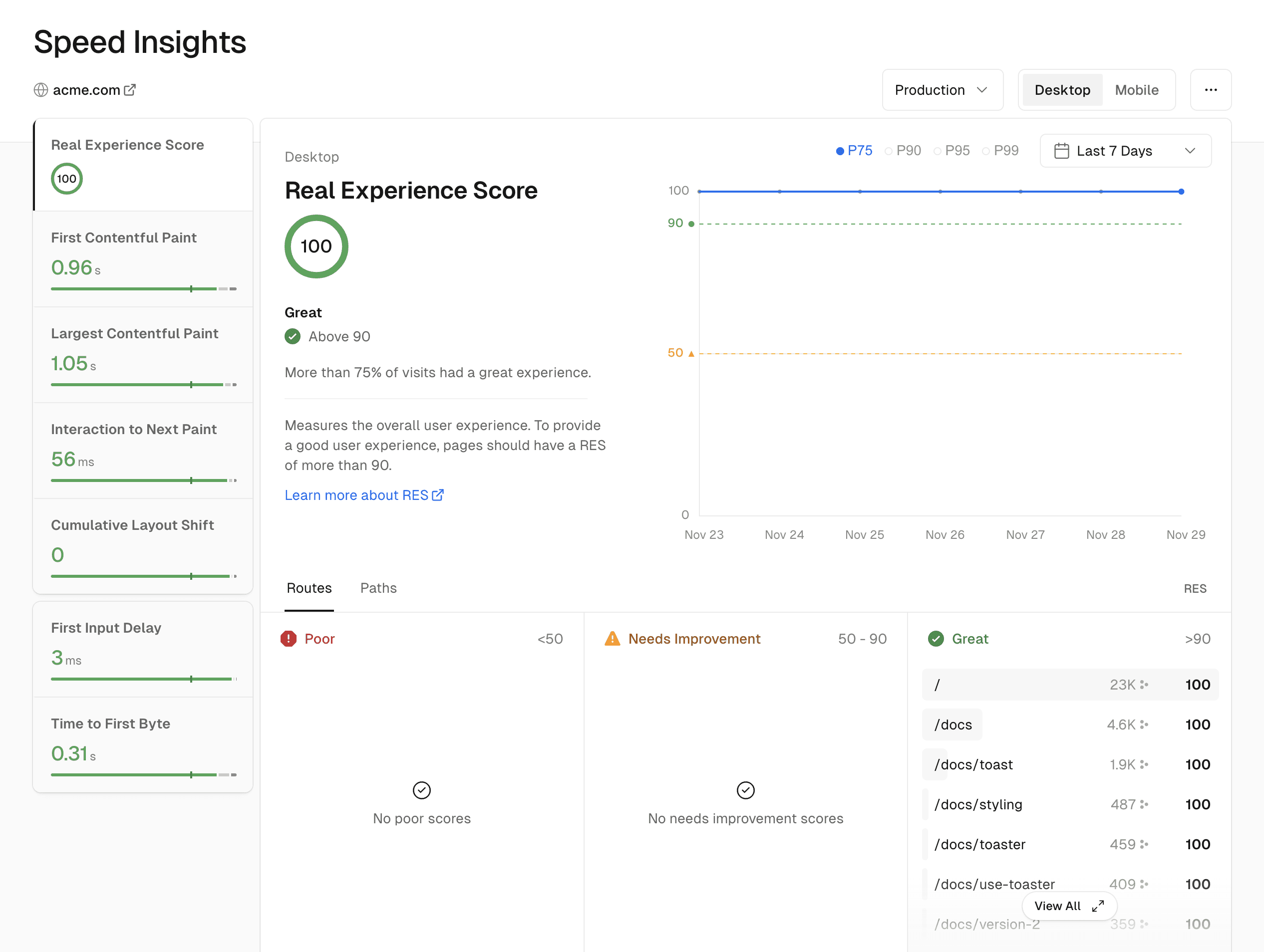

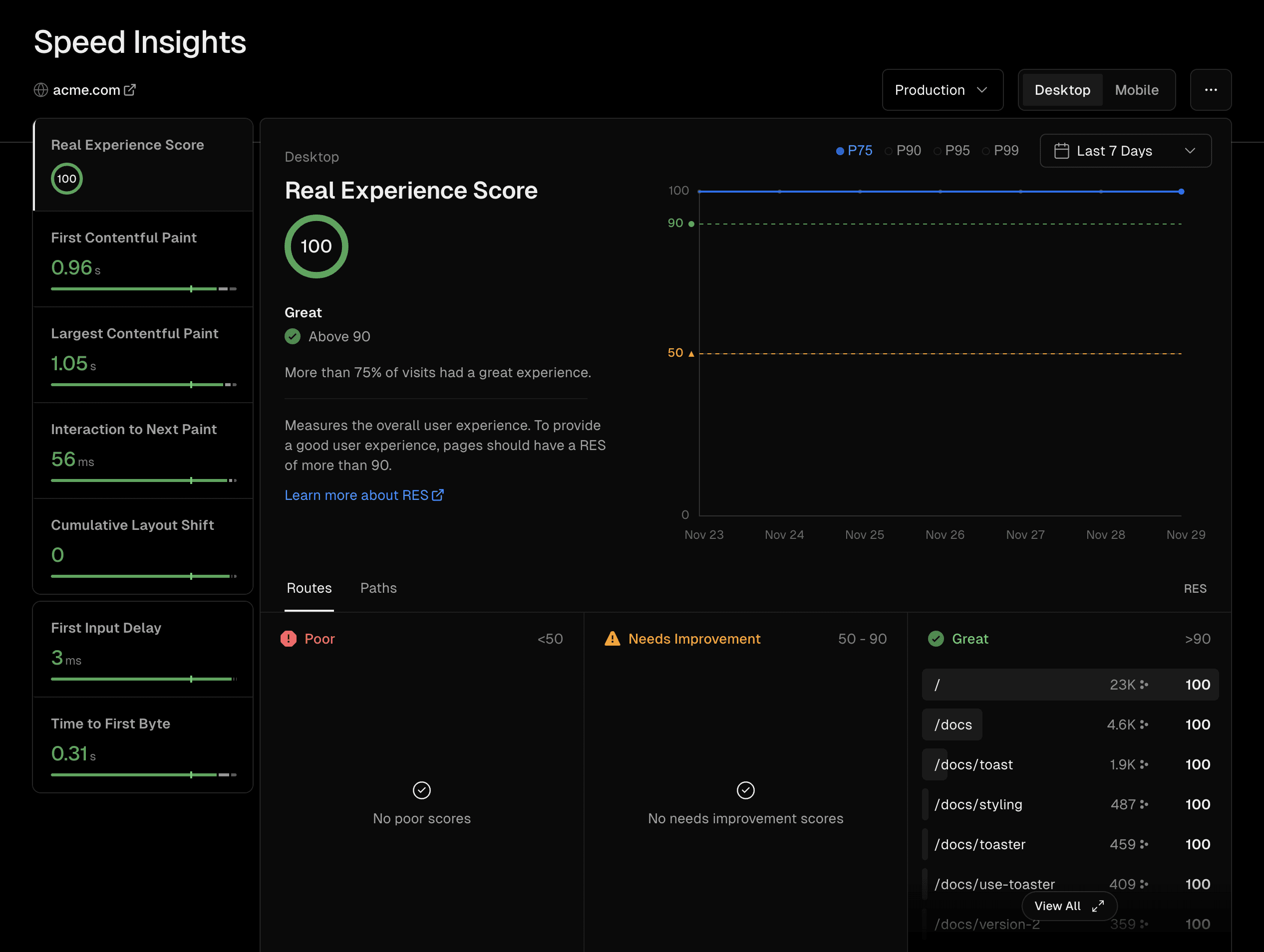

The Speed Insights dashboard displays data that you can sort and inspect based on a variety of parameters:

- Device type: Toggle between mobile and desktop.

- Environment: Filter by preview, production, or all environments.

- Time range: Select the timeframe dropdown in the top-right of the page to choose a predefined timeframe. Alternatively, select the Calendar icon to specify a custom timeframe. The available durations vary, depending on the account type.

- Performance metric: Switch between parameters that include Real Experience Score (RES), First Contentful Paint (FCP) and Largest Contentful Paint (LCP), and use the views to view more information.

- Performance metric views: When you select a performance metric, the dashboard displays three views:

- Time-based line graph that, by default, shows the P75 percentile of data for the selected metric data points and time range. You can include P90, P95 and P99 in this view.

- Kanban board that shows which routes, paths, or HTML elements need improvement (URLs that make up less than 0.5% of visits are not shown by default).

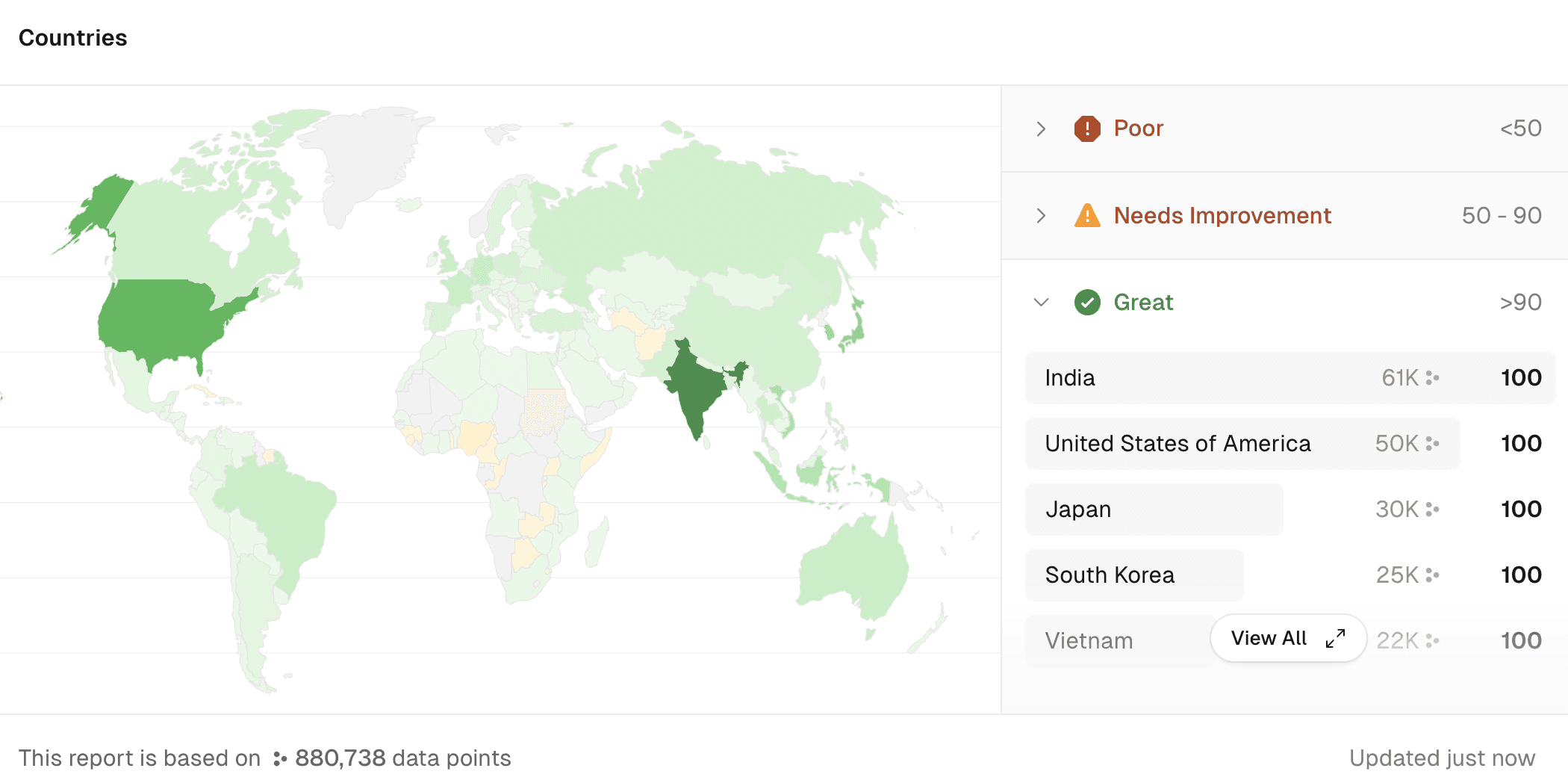

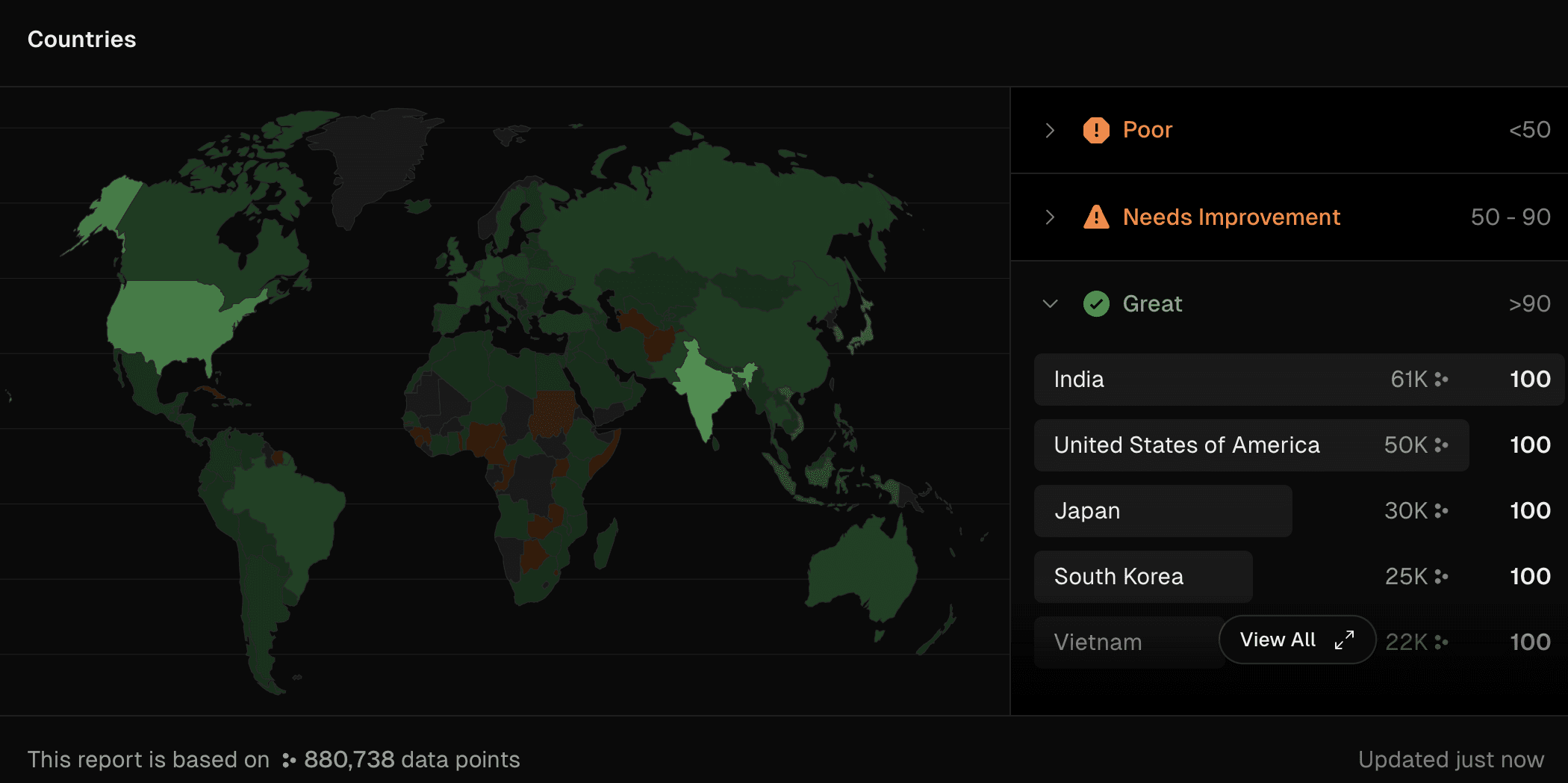

- Geographical map showing the experience metric by country:

Geographic map of the P75 score where the color intensity indicates the relative amount of data points per country

The data in the Kanban and map views is selectable so that you can filter by country, route, path and HTML element. The red, orange and green colors in the map view indicate the P75 score.

Was this helpful?