Features

- Next.js App Router

- Advanced routing for seamless navigation and performance

- React Server Components (RSCs) and Server Actions for server-side rendering and increased performance

- AI SDK

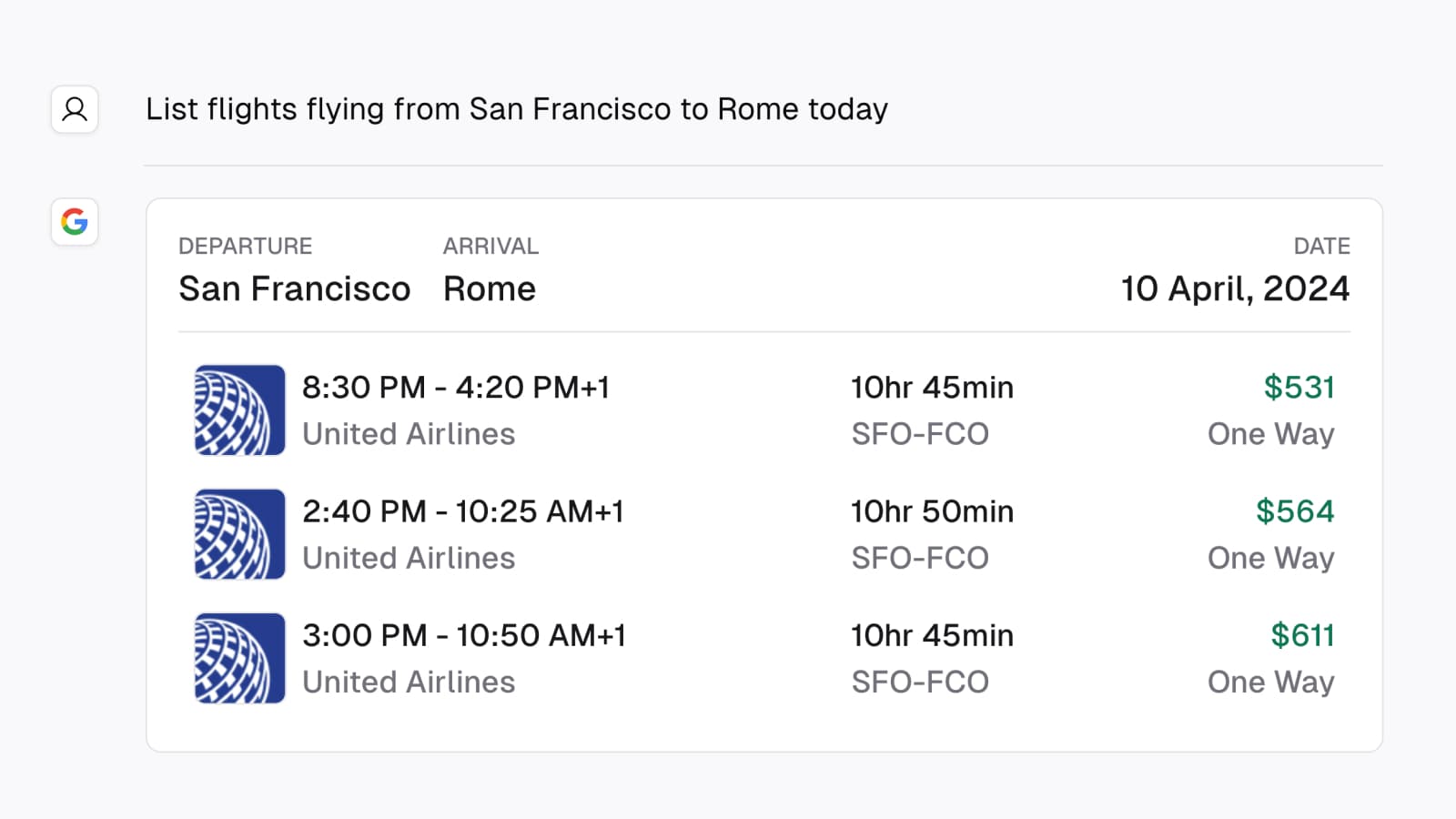

- Unified API for generating text, structured objects, and tool calls with LLMs

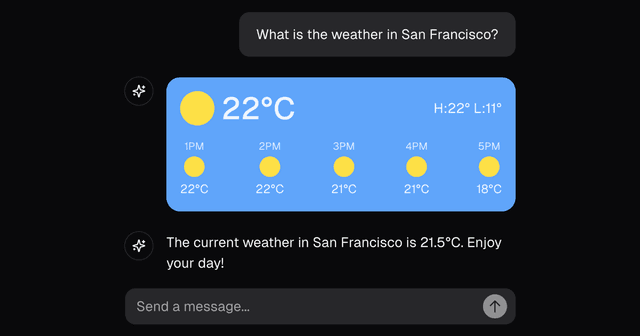

- Hooks for building dynamic chat and generative user interfaces

- Supports Google (default), OpenAI, Anthropic, Cohere, and other model providers

- shadcn/ui

- Styling with Tailwind CSS

- Component primitives from Radix UI for accessibility and flexibility

- Data Persistence

- Vercel Postgres powered by Neon for saving chat history and user data

- Vercel Blob for efficient object storage

- NextAuth.js

- Simple and secure authentication

Model Providers

This template ships with Google Gemini gemini-1.5-pro models as the default. However, with the AI SDK, you can switch LLM providers to OpenAI, Anthropic, Cohere, and many more with just a few lines of code.

Deploy Your Own

You can deploy your own version of the Next.js AI Chatbot to Vercel with one click:

Running locally

You will need to use the environment variables defined in .env.example to run Next.js AI Chatbot. It's recommended you use Vercel Environment Variables for this, but a .env file is all that is necessary.

Note: You should not commit your

.envfile or it will expose secrets that will allow others to control access to your various Google Cloud and authentication provider accounts.

- Install Vercel CLI:

npm i -g vercel - Link local instance with Vercel and GitHub accounts (creates

.verceldirectory):vercel link - Download your environment variables:

vercel env pull

Your app template should now be running on localhost:3000.

Related Templates

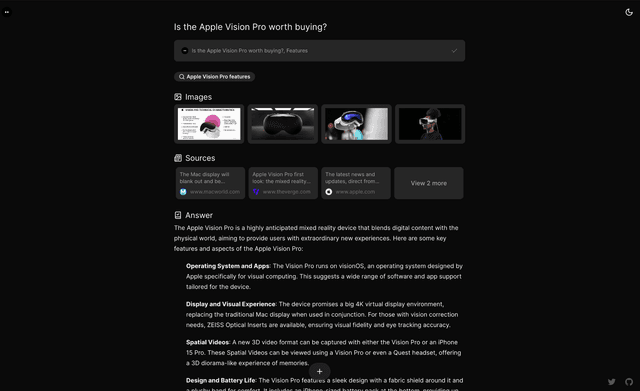

Morphic: AI-powered answer engine

Next.js AI Chatbot

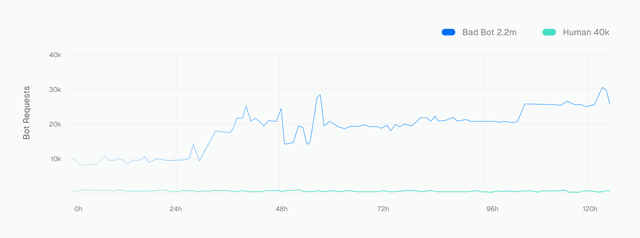

Advanced AI Bot Protection