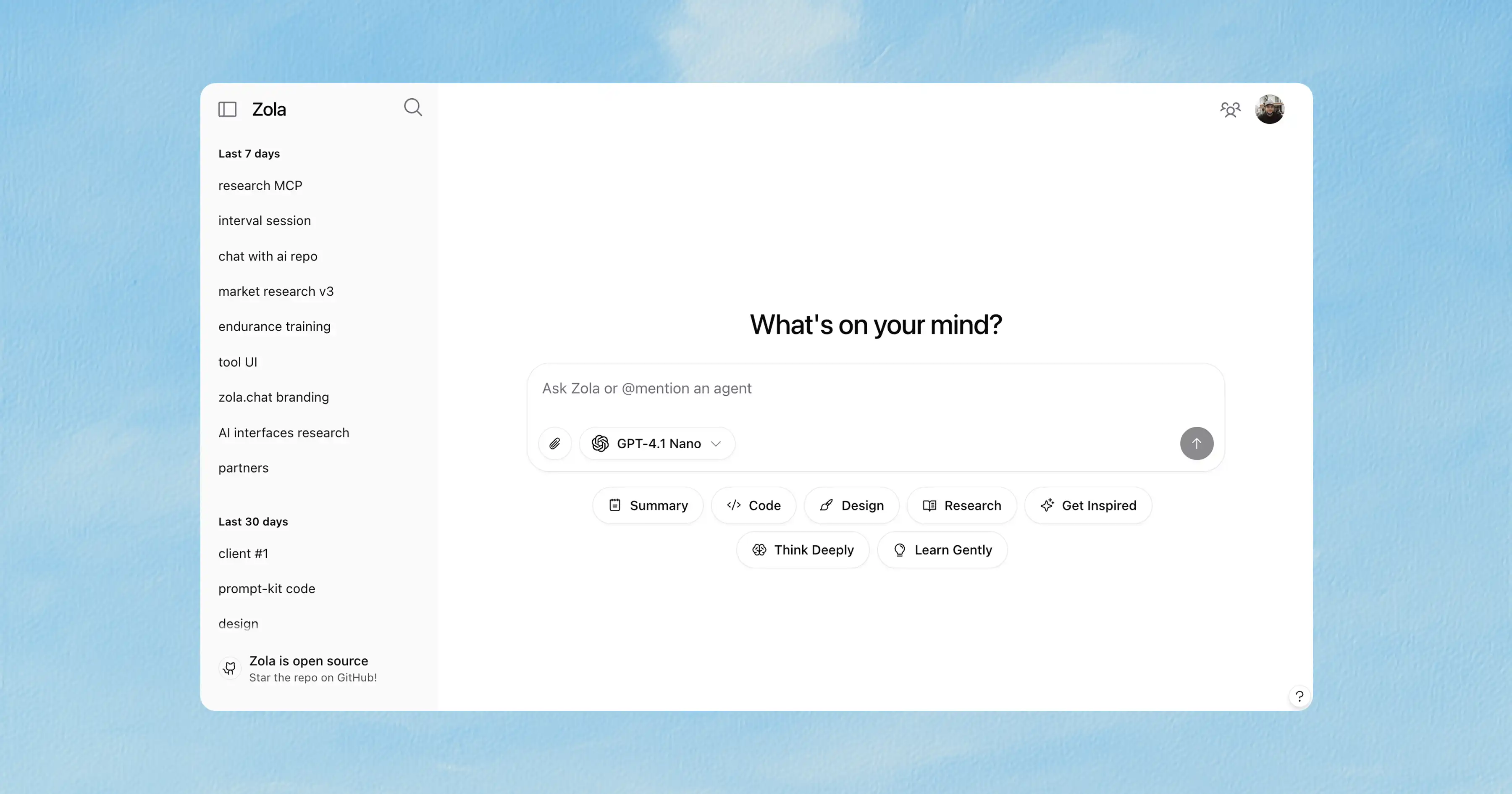

Zola

Zola is the open-source chat interface for all your models.

Features

- Multi-model support: OpenAI, Mistral, Claude, Gemini, Ollama (local models)

- Bring your own API key (BYOK) support via OpenRouter

- File uploads

- Clean, responsive UI with light/dark themes

- Built with Tailwind CSS, shadcn/ui, and prompt-kit

- Open-source and self-hostable

- Customizable: user system prompt, multiple layout options

- Local AI with Ollama: Run models locally with automatic model detection

- Full MCP support (wip)

Quick Start

Option 1: With OpenAI (Cloud)

Option 2: With Ollama (Local)

Zola will automatically detect your local Ollama models!

Option 3: Docker with Ollama

To unlock features like auth, file uploads, see INSTALL.md.

Built with

- prompt-kit — AI components

- shadcn/ui — core components

- motion-primitives — animated components

- vercel ai sdk — model integration, AI features

- supabase — auth and storage

Sponsors

License

Apache License 2.0

Notes

This is a beta release. The codebase is evolving and may change.

Related Templates

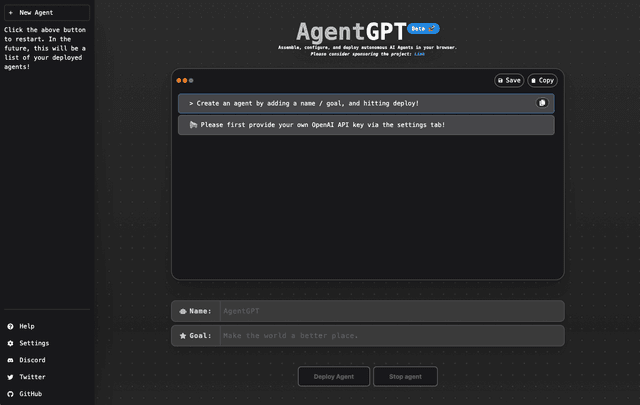

AgentGPT - AI Agents with Langchain & OpenAI

AI SDK Computer Use

Vercel x xAI Chatbot