3 min read

An interoperable, streaming-enabled, edge-ready software development kit for AI apps built with React and Svelte.

Over the past 6 months, AI companies like Scale, Jasper, Perplexity, Runway, Lexica, and Jenni have launched with Next.js and Vercel. Vercel helps accelerate your product development by enabling you to focus on creating value with your AI applications, rather than spending time building and maintaining infrastructure.

Today, we're launching new tools to improve the AI experience on Vercel.

Vercel AI SDK: Easily stream API responses from AI models

Chat & Prompt Playground: Explore models from OpenAI, Hugging Face, and more

Link to headingThe Vercel AI SDK

The Vercel AI SDK is an open-source library designed to help developers build conversational, streaming, and chat user interfaces in JavaScript and TypeScript. The SDK supports React/Next.js, Svelte/SvelteKit, with support for Nuxt/Vue coming soon.

To install the SDK, enter the following command in your terminal:

npm install aiYou can also view the source code on GitHub.

Link to headingBuilt-in LLM Adapters

Choosing the right LLM for your application is crucial to building a great experience. Each has unique tradeoffs, and can be tuned in different ways to meet your requirements.

Vercel’s AI SDK embraces interoperability, and includes first-class support for OpenAI, LangChain, and Hugging Face Inference. This means that regardless of your preferred AI model provider, you can leverage the Vercel AI SDK to create cutting-edge streaming UI experiences.

import { OpenAIStream, StreamingTextResponse } from 'ai'import { Configuration, OpenAIApi } from 'openai-edge'

// Create an OpenAI API client (that's edge friendly!)const config = new Configuration({ apiKey: process.env.OPENAI_API_KEY})const openai = new OpenAIApi(config) // IMPORTANT! Set the runtime to edgeexport const runtime = 'edge' export async function POST(req: Request) { // Extract the `messages` from the body of the request const { messages } = await req.json() // Ask OpenAI for a streaming chat completion given the prompt const response = await openai.createChatCompletion({ model: 'gpt-3.5-turbo', stream: true, messages }) // Convert the response into a friendly text-stream const stream = OpenAIStream(response) // Respond with the stream return new StreamingTextResponse(stream)}Link to headingStreaming First UI Helpers

The Vercel AI SDK includes React and Svelte hooks for data fetching and rendering streaming text responses. These hooks enable real-time, dynamic data representation in your application, offering an immersive and interactive experience to your users.

Building a rich chat or completion interface now just takes a few lines of code thanks to useChat and useCompletion:

'use client'

import { useChat } from 'ai/react'

export default function Chat() { const { messages, input, handleInputChange, handleSubmit } = useChat()

return ( <div> {messages.map(m => ( <div key={m.id}> {m.role}: {m.content} </div> ))}

<form onSubmit={handleSubmit}> <label> Say something... <input value={input} onChange={handleInputChange} /> </label> </form> </div> )}Link to headingStream Helpers and Callbacks

We've also included callbacks for storing completed streaming responses to a database within the same request. This feature allows for efficient data management and streamlines the entire process of handling streaming text responses.

export async function POST(req: Request) { // ... same as above // Convert the response into a friendly text-stream const stream = OpenAIStream(response, { onStart: async () => { // This callback is called when the stream starts // You can use this to save the prompt to your database await savePromptToDatabase(prompt) }, onToken: async (token: string) => { // This callback is called for each token in the stream // You can use this to debug the stream or save the tokens to your database console.log(token) }, onCompletion: async (completion: string) => { // This callback is called when the stream completes // You can use this to save the final completion to your database await saveCompletionToDatabase(completion) } }) // Respond with the stream return new StreamingTextResponse(stream)}Link to headingEdge & Serverless ready

Our SDK is integrated with Vercel products like Serverless and Edge Functions. You can deploy AI application that scale instantly, stream generated responses, and are cost effective.

With framework-defined infrastructure, you write application code in frameworks like Next.js and SvelteKit using the AI SDK, and Vercel converts this code into global application infrastructure.

Link to headingChat & Prompt Playground

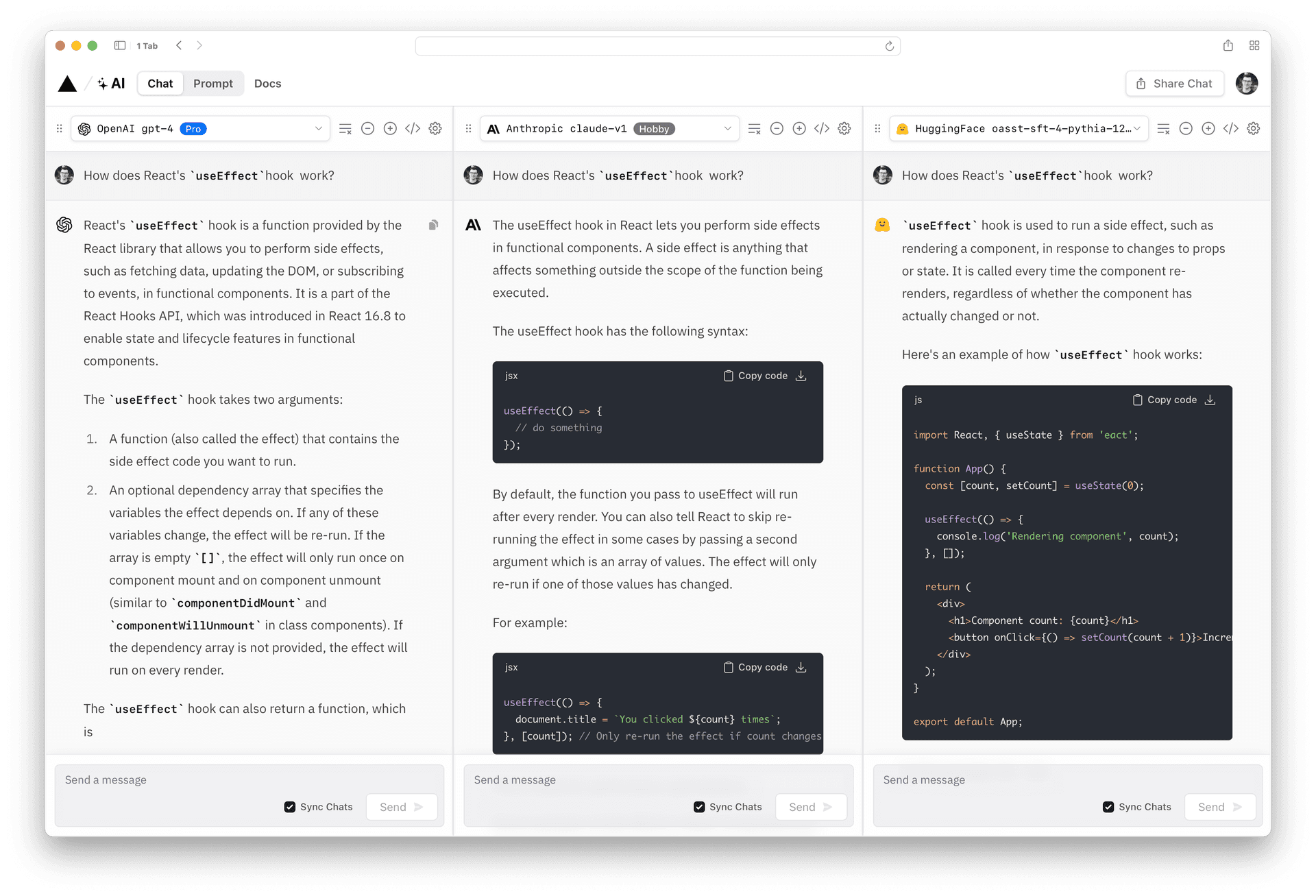

In late April, we launched an interactive online prompt playground play.vercel.ai with 20 open source and cloud LLMs.

The playground provides a valuable resource for developers to compare various language model results in real-time, tweak parameters, and quickly generate Next.js, Svelte, and Node.js code.

Today, we’ve added a new chat interface to the playground so you can simultaneously compare chat models side-by-side. We’ve also added code generation support for the Vercel AI SDK. You can now go from playground to chat app in just a few clicks.

Link to headingWhat’s Next?

We'll be adding more SDK examples in the coming weeks, as well as new templates built entirely with the AI SDK. Further, as new best practices for building AI applications emerge, we’ll lift them into the SDK based on your feedback.

Apply to the AI Accelerator

Apply to get access to over $850k in credits from Vercel and our AI partners.

Apply today