Large-language modals (LLMs) like OpenAI's GPT-4 and Anthropic's Claude are incredible at generating coherent and contextually relevant text based on given prompts. They can assist in a wide range of tasks, such as writing, translation, and even conversation.

Despite this, LLMs have limitations. In this guide, we'll go over these constraints and explain how Retrieval Augmented Generation (RAG) can alleviate these pains. We'll also dive into the ways you can build better chat experiences with this technique.

As groundbreaking as LLMs may be, they have a few limitations:

- They're limited by the amount of training data they have access to. For example, GPT-4 has a training data cutoff date, which means that it doesn't have access to information beyond that date. This limitation affects the model's ability to generate up-to-date and accurate responses.

- They're generic and lack subject-matter expertise. LLMs are trained on a large dataset that covers a wide range of topics, but they don't possess specialized knowledge in any particular field. This leads to hallucinations or inaccurate information when asked about specific subject areas.

- Citations are tricky. LLMs don't have a reliable way of returning the exact location of the text where they retrieved the information. This exacerbates the issue of hallucination, as they may not be able to provide proper attribution or verify the accuracy of their responses. Additionally, the lack of specific citations makes it difficult for users to fact-check or delve deeper into the information provided by the models.

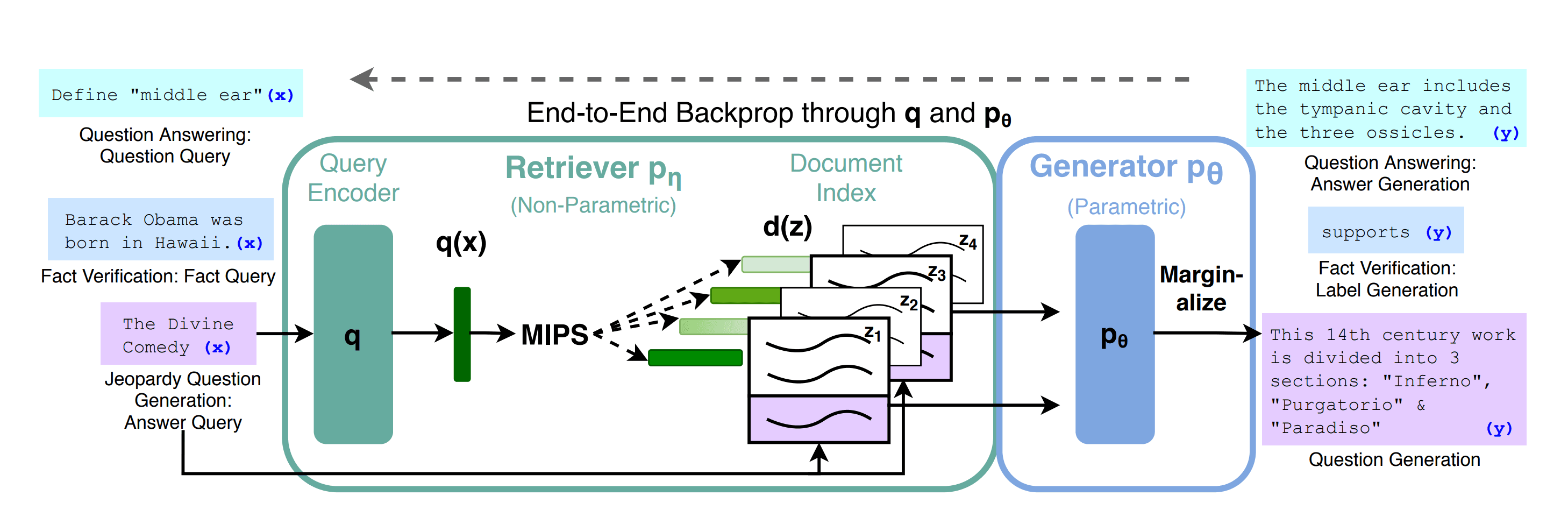

To solve this problem, researchers at Meta published a paper about a technique called Retrieval Augmented Generation (RAG), which adds an information retrieval component to the text generation model that LLMs are already good at. This allows for fine-tuning and adjustments to the LLM's internal knowledge, making it more accurate and up-to-date.

Here's how RAG works on a high level:

- RAG puts together a pre-trained system that finds relevant information (

retriever) with another system that generates text (generator). - Then, when the user inputs a question (

query), theretrieveruse a technique called "Maximum Inner Product Search (MIPS)" to find the most relevant documents. - The information from these documents will then be fed into the

generatorto create the final response. This also allows for citations, which allows the end user to verify the sources and delve deeper into the information provided.

Think of RAG as a highly efficient search engine with a built-in content writer.

The retriever in RAG is like a database index. When you input a query, it doesn't scan the entire database (or in this case, the document corpus). Instead, it uses a technique similar to a B-tree or hash index to quickly locate the most relevant documents. This is akin to how an index in a database allows for efficient retrieval of records without scanning the entire database.

Once the retriever has found the relevant documents, it's like having the raw data retrieved from a database. But raw data isn't always useful or easy to understand. That's where the generator comes in. It's like a built-in application layer that takes the raw data and transforms it into a user-friendly format. In this case, it generates a coherent and contextually relevant response to the query.

Finally, the citations are like metadata about the source of the data, allowing for traceability and further exploration if needed. So, RAG is like a search engine with a built-in content writer, providing efficient, relevant, and user-friendly responses.

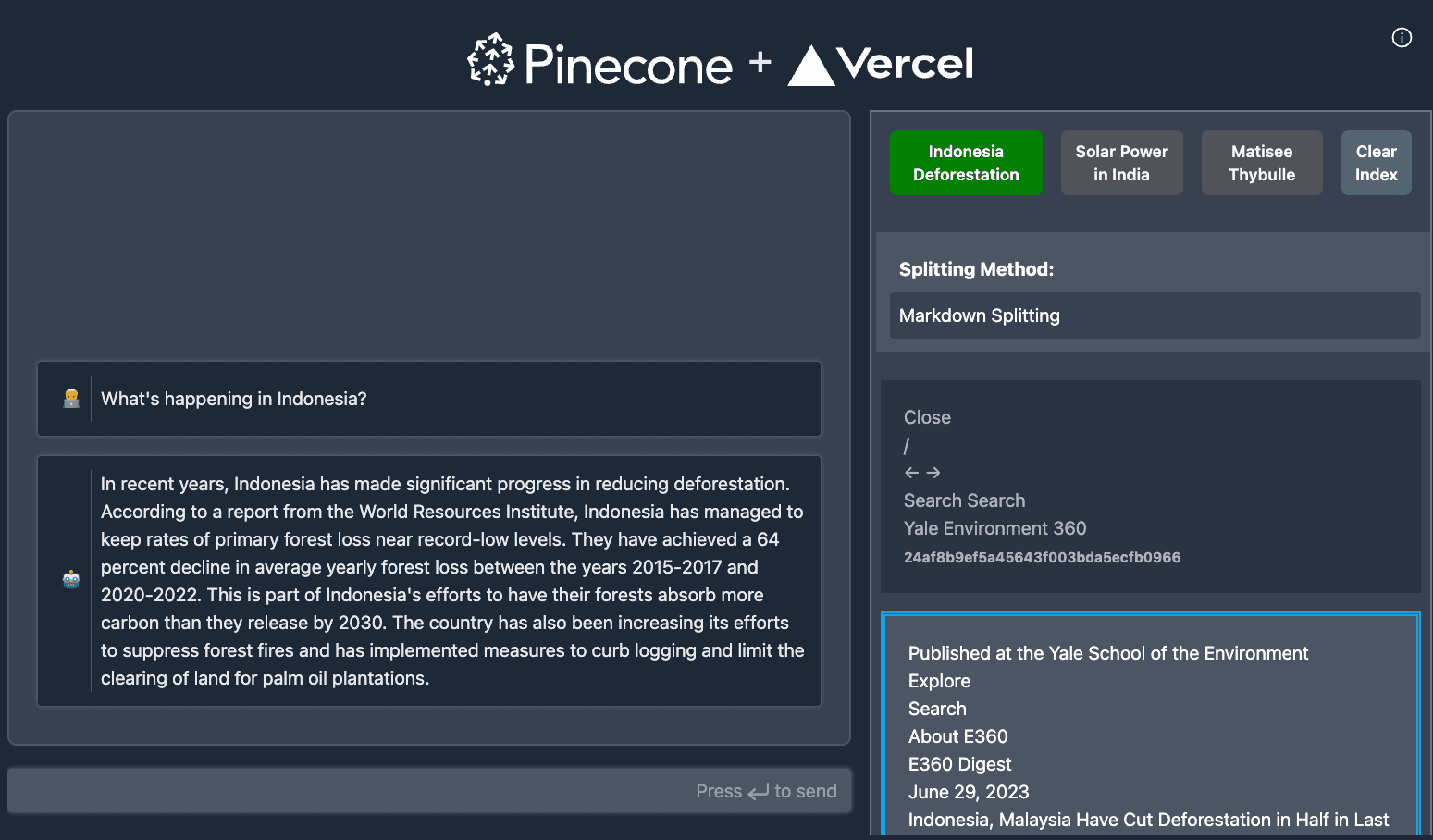

To illustrate how you can apply RAG in a real-world application, here's a chatbot template that uses RAG with a Pinecone vector store and the Vercel AI SDK to create an accurate, evidence-based chat experience.

You can deploy the template on Vercel with one click, or run the following command to develop it locally:

npx create-next-app pinecone-vercel-starter --example "https://github.com/pinecone-io/pinecone-vercel-starter"The chatbot combines retrieval-based and generative models to deliver accurate responses. The application integrates Vercel's AI SDK for efficient chatbot setup and streaming in edge environments. The guide section of the template covers the following steps:

- Setting up a Next.js application

- Creating a chatbot frontend component

- Building an API endpoint using OpenAI's API for response generation

- Progressively enhancing the chatbot with context-based capabilities, including context seeding, context retrieval, and displaying context segments.

By following the tutorial, you'll build a context-aware chatbot with improved user experience.

By integrating Retrieval Augmented Generation into chat applications like the Pinecone chatbot template above, developers can reduce hallucinations in their AI models and create more accurate and evidence-based conversational experiences.

If you're interested in learning more about RAG, check out this article about integrating RAG with Langchain and a Supabase vector database.