1 min read

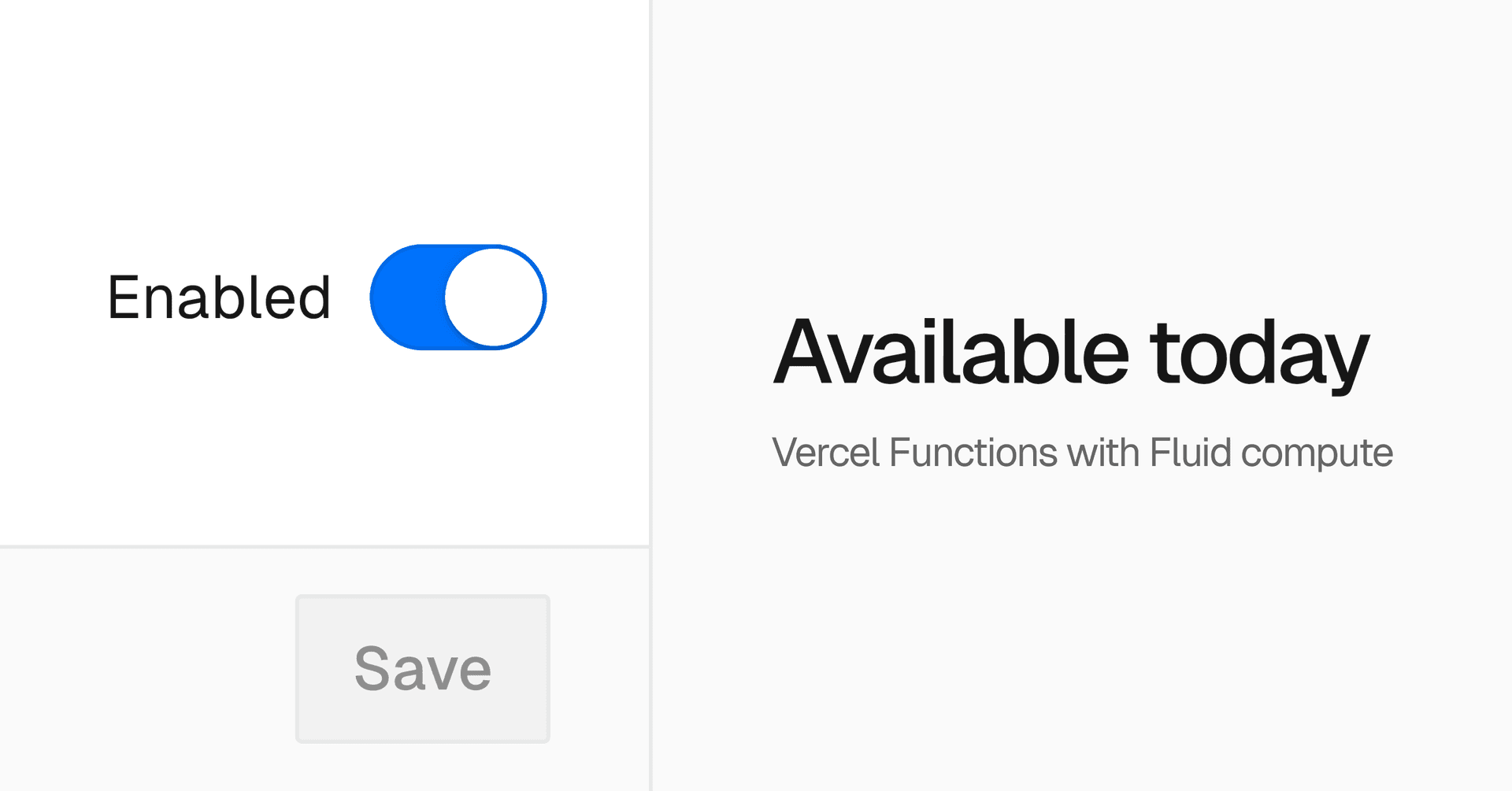

Vercel Functions can now run on Fluid compute, bringing improvements in efficiency, scalability, and cost effectiveness. Fluid is now available for all plans.

Link to headingWhat’s New

Optimized concurrency: Functions can handle multiple requests per instance, reducing idle time and lowering compute costs by up to 85% for high-concurrency workloads

Cold start protection: Fewer cold starts with smarter scaling and pre-warmed instances

Optimized scaling: Functions scale before instances, moving beyond the traditional 1:1 invocation-to-instance model

Extended function lifecycle: Use

waitUntilto run background tasks after responding to the clientRunaway cost protection: Detects and stops infinite loops and excessive invocations

Multi-region execution: Requests are routed to the nearest of your selected compute region for better performance

Node.js and Python support: No restrictions on native modules or standard libraries

Enable Fluid today or learn more in our blog and documentation.