6 min read

Vercel's Edge network means robust personalization without compromising on speed.

The world's best websites load before you've finished this sentence.

Those websites can't be static, but serving performance and personalization to a global user base has historically been complex.

The primary goal of Vercel's Frontend Cloud is to collect industry-best practices into one easy-to-use workflow, integrating new and better solutions as they come.

In this article, we'll look at why speed and personalization matter to your business, and how the Frontend Cloud gives you abundant options for both.

Speed is your bottom line.

Discover how the Frontend Cloud delivers lightning-fast websites that boost your business.

Talk to an Expert

Link to headingWhy do you need speed?

There are many reasons to care about the load speed of your application, but how do you know when your speed good enough?

Let's break it down.

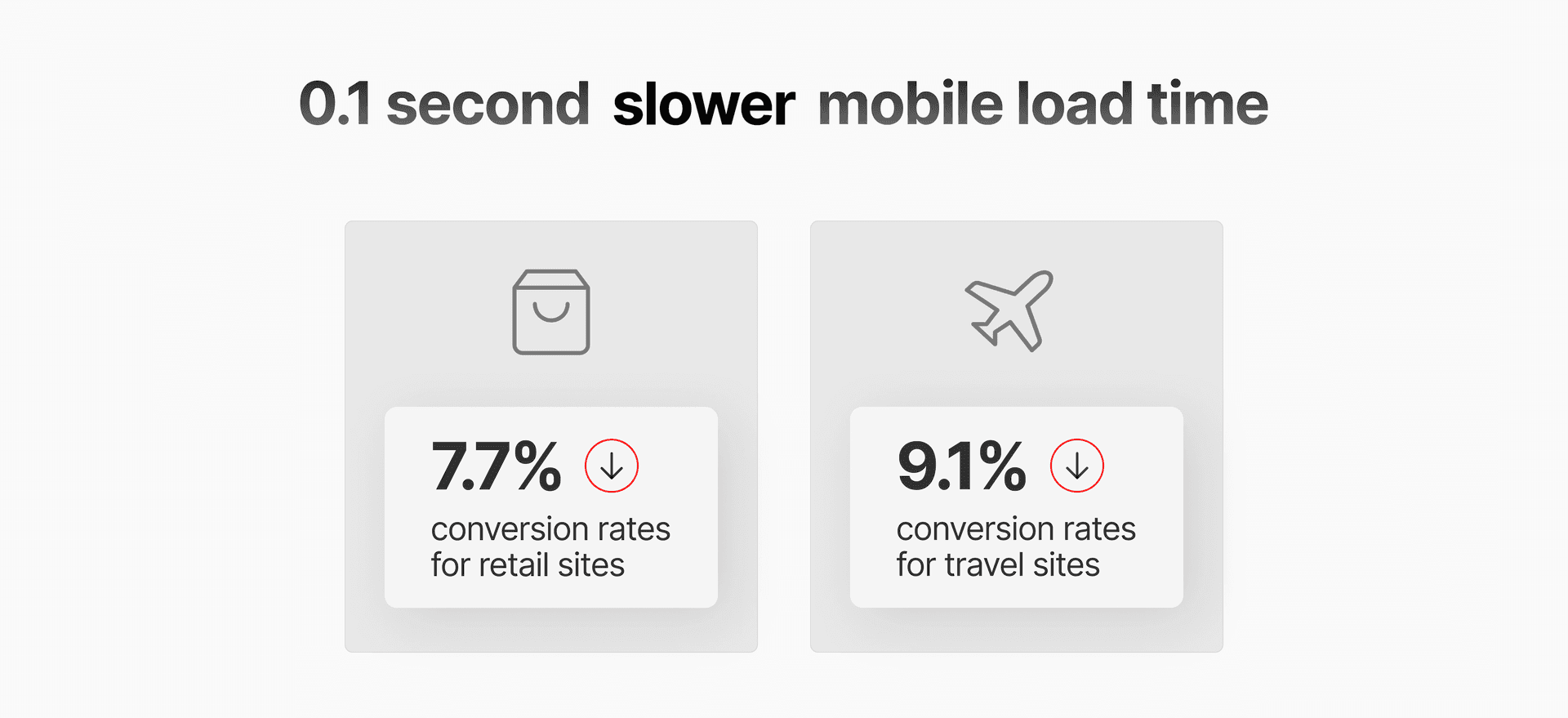

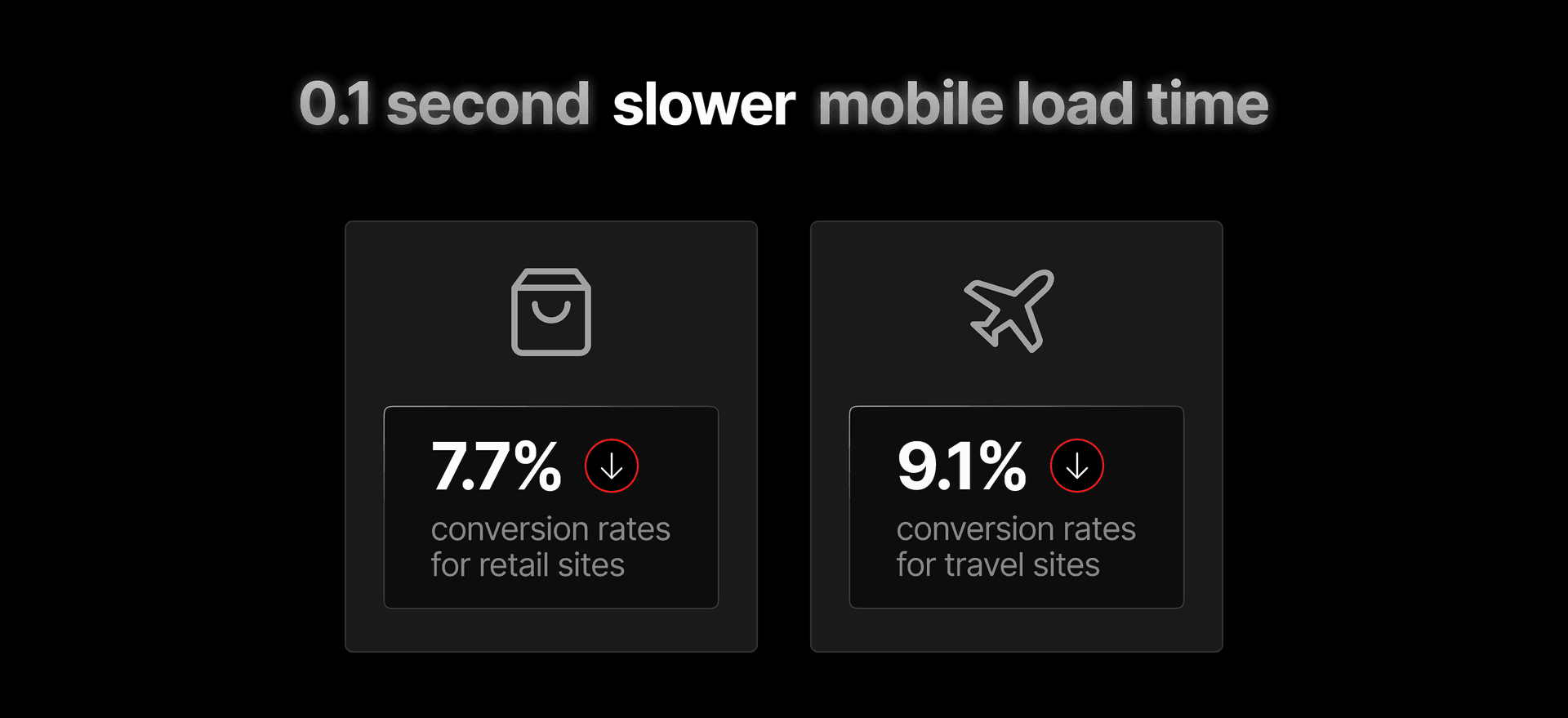

Milliseconds quite literally make millions, and 8% conversion rate lift is tied to every 100ms shaved off load time. Modern users don’t stare at a blank screen for long, and that tolerance is dropping more by the year.

Google and other search providers rank pages based on relevancy, but a large portion of that relevancy comes from speed. Search engines show performant, accessible, bug-free pages at the top, to improve their own UX.

Many of the top-ranking applications in the world load in one second or less. If you’re not around this speed, you’re likely not the first result. If your load time isn’t under five seconds, you may not be on the first page of results for all queries except the most specific to your brand.

Even as AI changes the search industry, it’s important to realize that AI’s ability to access information on the current web largely relies on existing search engines, such as Bing and Google.

In other words, if your site isn’t fast, users can only find you through paid advertising and word of mouth. Even when users do find your site, their stickiness is, in part, reliant on load times.

Adopting the Frontend Cloud, with its speed and potential to scale, uncaps your business’s potential for organic virality and drives users to convert.

Link to headingWhy does dynamic personalization matter?

All that discoverability, all the speed, is only half the story. Let’s face it: 65% of business comes from existing customers, and the best way to ensure application stickiness is with deep personalization.

Users are loyal to brands that they feel “get them.” They recommend these experiences to friends. In a world of countless anonymous digital experiences, a personalized, customizable experience sets your app apart from your competitors.

Historically, personalization has been at odds with speed. Personalization requires dynamic-to-that-user data, which can’t be cached in a typical frontend.

Vercel's Frontend Cloud solves these challenges, making access to fast-as-milliseconds external data unlimited. There’s no other solution that allows your apps to be as fast and dynamic.

If you’re discoverable through performance and you also delight users through personalization and good UX, your app stands apart from a crowded market.

So, the question is, how do we get there? Let's look at the Frontend Cloud's best answers.

Level-up your user experience.

Deliver deeply personalized, dynamic websites that drive customer loyalty and growth.

Talk to an Expert

Link to headingWhat is a content delivery network (CDN)?

When a user from Tokyo requests data from New York City, the one-way latency is, at minimum, around 200ms. This might not sound too bad, but the problem with requests is that they stack up.

Each user interaction with the server (even within the same webpage if it needs more server data) takes at least 400ms of roundtrip to accomplish—a noticeable lag on top of whatever time it takes to access data, process it, and render your data-informed code for the user.

Keep in mind that the best websites in the world take ~1000ms to be fully interactive.

This is originally why Content Delivery Networks (CDNs) were invented. By placing data centers around the world and allowing businesses to cache their static web data inside them, websites could often shave seconds off their load times.

But the limitation is this: A typical CDN only allows the storage of static web assets. They don't help with dynamic-to-that-moment data.

If you need to serve any dynamic content that can't be cached, users will have to wait for their request to travel to the application origin server and back to their location.

Link to headingVercel's Edge Network

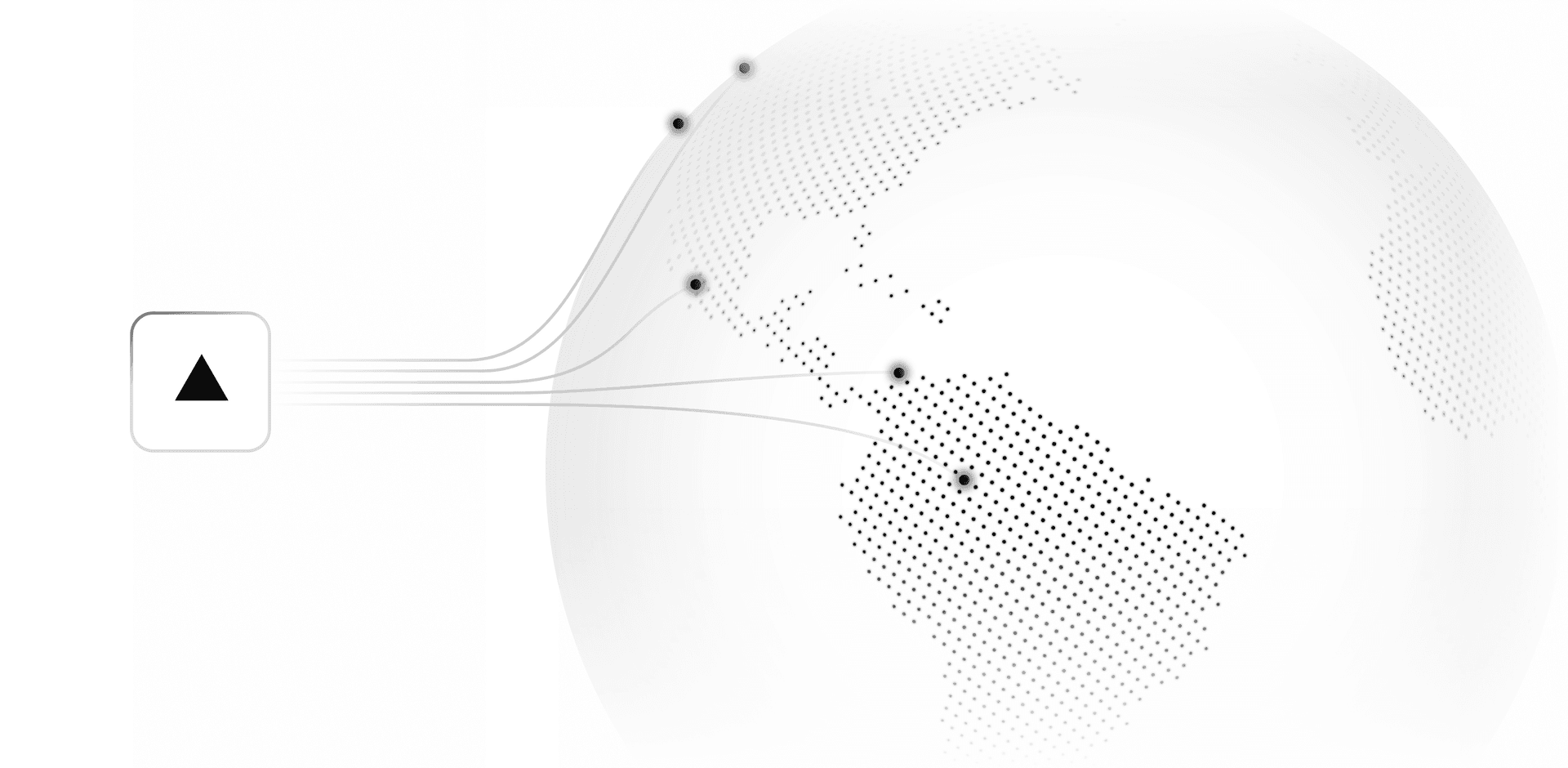

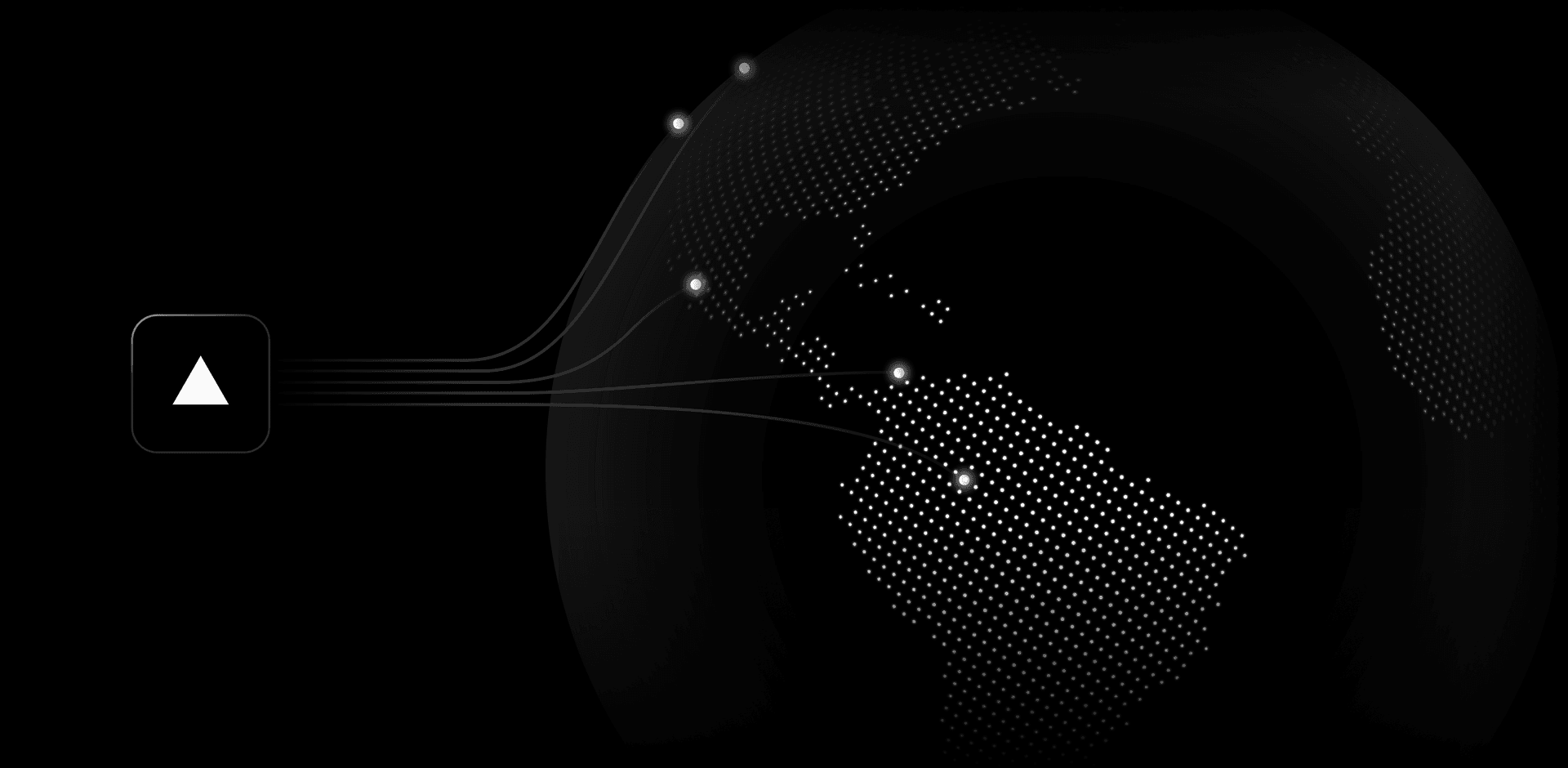

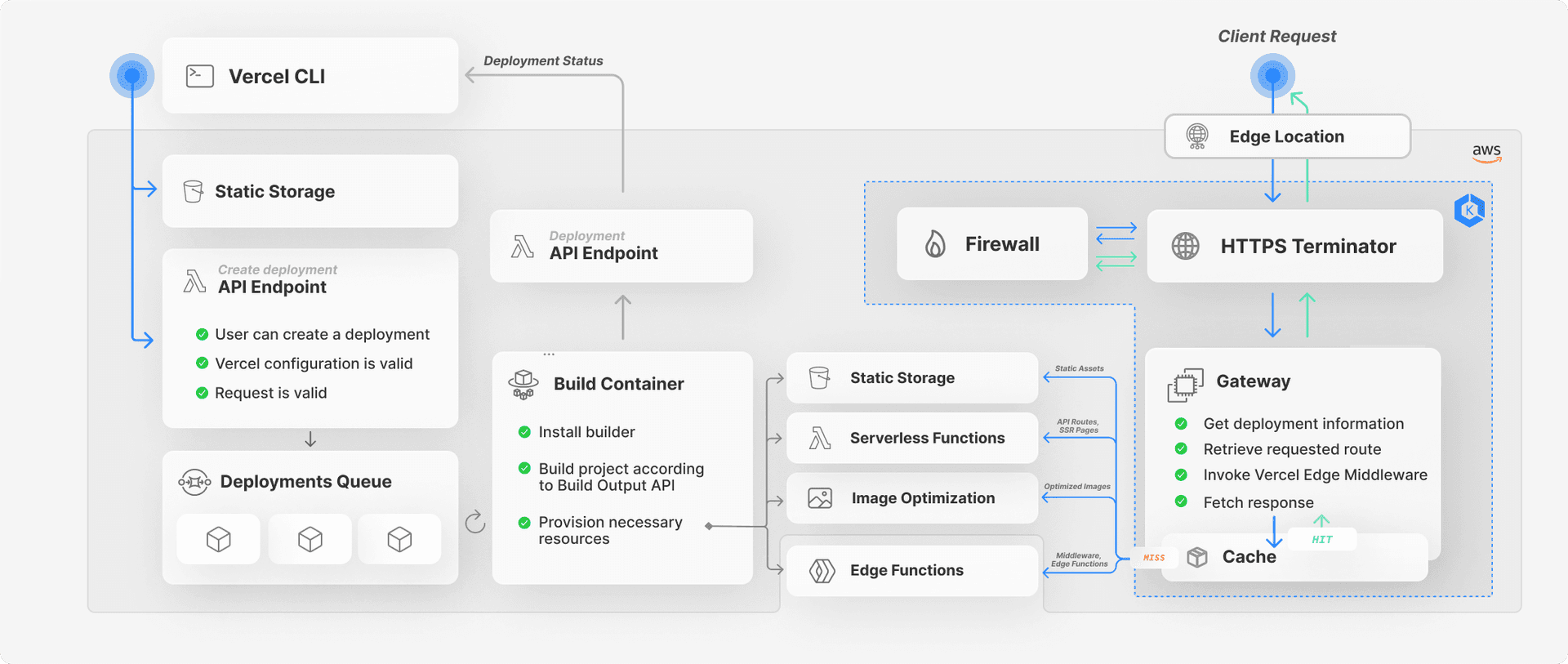

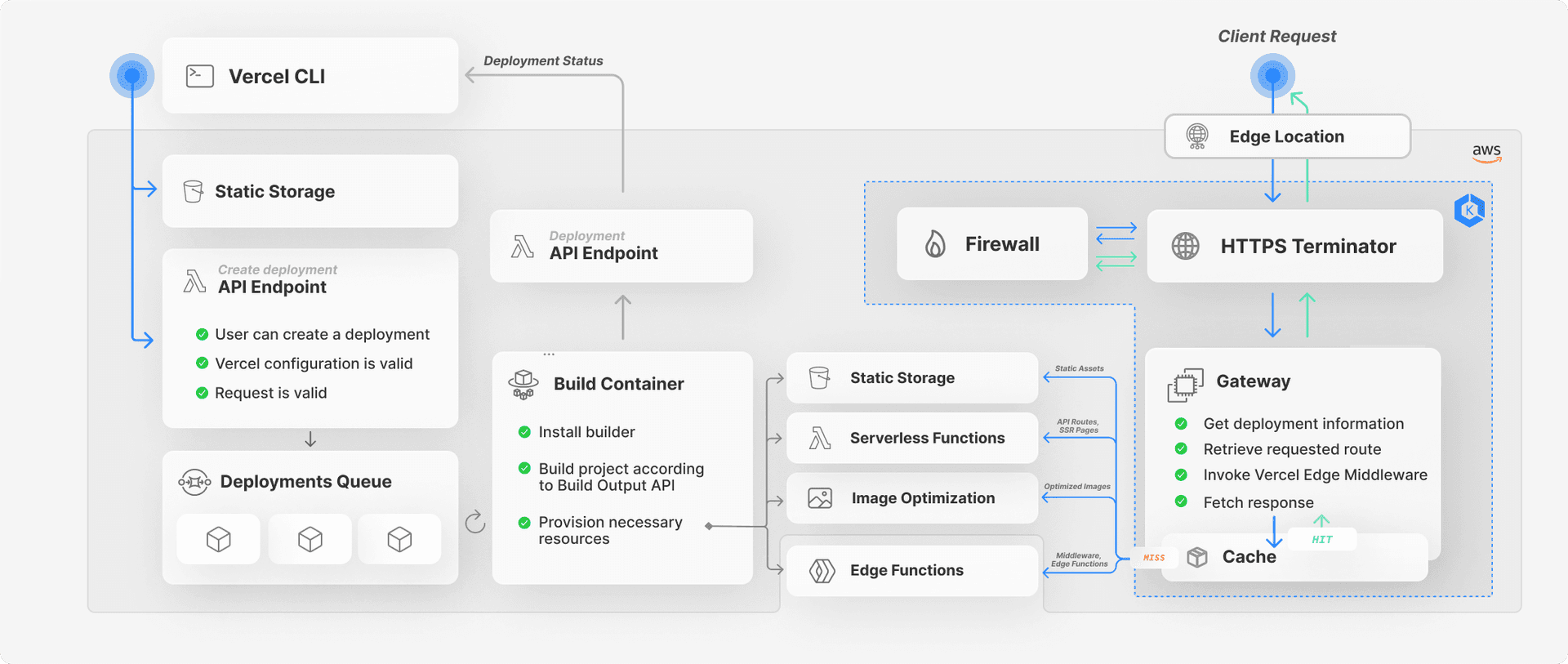

In order to optimize application performance and reliability for your end users, Vercel's Frontend Cloud uses globally distributed servers to asynchronously compute and granularly cache the result of user requests. We call this our Edge Network.

The Edge Network's caching is both to optimize for latency and to provide consistent availability, so your application can continue to perform even if external data sources experience downtime.

User requests do not have to travel back to the origin server to be handled. Instead, the Edge Network entirely handles user requests, automatically provisioning additional infrastructure and communicating with other servers as needed.

This means that the Edge Network can automatically cache (or re-cache) the results of any dynamic user request in a static format to be quickly served to the next user.

Link to headingGranular caching: Incremental Static Regeneration

The Edge Network can also cache any content piecemeal, as defined within your framework code. We call this practice Incremental Static Regeneration (ISR).

For instance, in the Next.js App Router, caching and revalidation can happen specifically at the component level. This allows you to build experiences that seamlessly merge static and dynamic content—all while keeping external data fetches on the server.

Practices like Partial Prerendering then step in to make sure the user's Time to First Byte (TTFB) is as small as possible.

When you have the power to choose the caching behavior of each piece of your application, you can ensure users receive top-speed, cached data in far more cases than not.

Caching isn’t all about UX, though. By leveraging serverless functions in your codebase without having to worry about speed or concurrency, your team gets a far better developer experience (DX) as well.

Practices like ISR, which perform their caching magic after build, drastically cut build times in instances where you previously needed to statically generate all dynamic pages. ISR can also allow your authors to preview and publish new content without developer intervention.

Link to headingThe lifecycle of a user request

Vercel's Edge Network also caches at all levels of the user request lifecycle:

When a user makes a request, all levels of that request (TLS, hostname lookup, deployment fetching, and routing) look to on-site, in-memory storage for information before making a more time-consuming database call. Cache levels include per-proxy (the instance of compute spun up to aid that particular user), by region (in a data center close to where that user is accessing from), and origin (close to long-term data storage).

When a user action needs to invoke a serverless function, the Frontend Cloud infra checks yet another threefold layer of in-memory storage to see if the dynamic information needed is already available. This, at a high level, is how features like ISR work to serve users the latest static files.

By allowing data to be re-cached and functions to be re-invoked behind the scenes, Vercel's Frontend Cloud provides performant consistency no matter how much dynamic data your codebase needs to access.

The Frontend Cloud creates a cohesive and highly customizable caching environment for all levels of infrastructure—from initial user request to data rendered.

Users see dynamic, up-to-date, personalized data, but it’s served to them as a fastest-on-the-web static page.

There’s a lot more to the caching story, including development-side tools like Turborepo and Turbopack, and individual framework capabilities, but this overview should help you move forward in the Frontend Cloud discussion.

Link to headingEdge Functions and Middleware

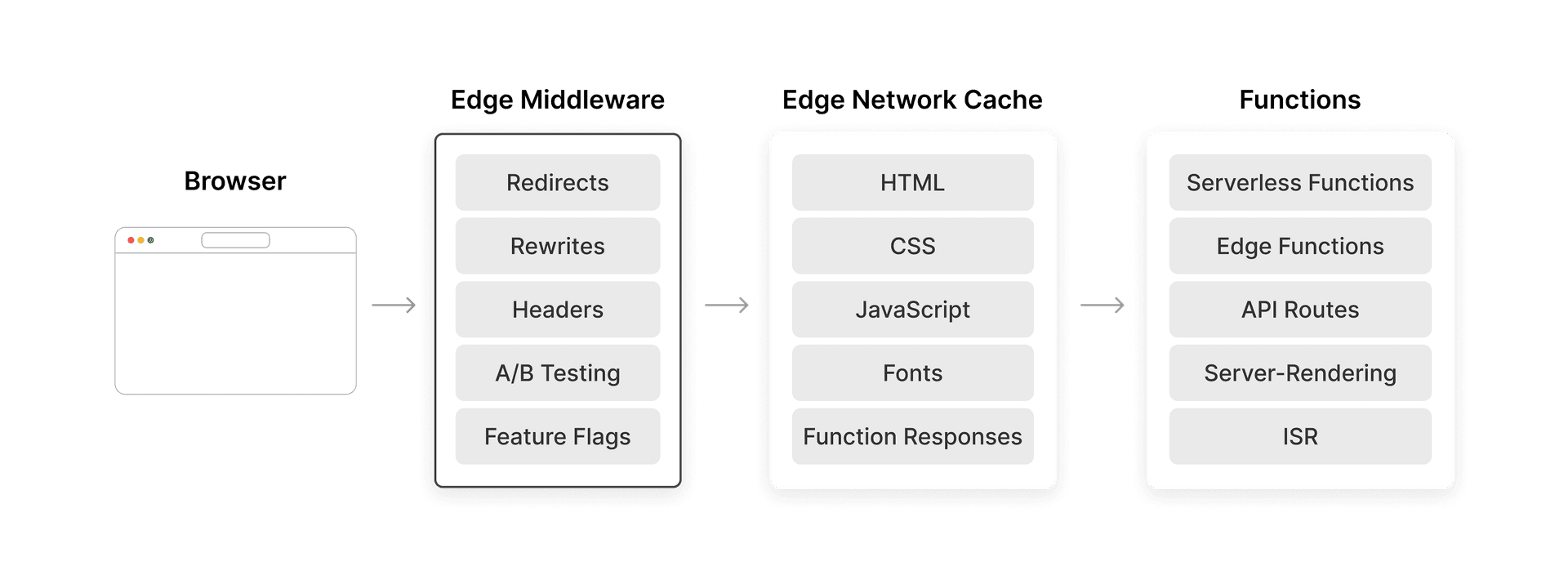

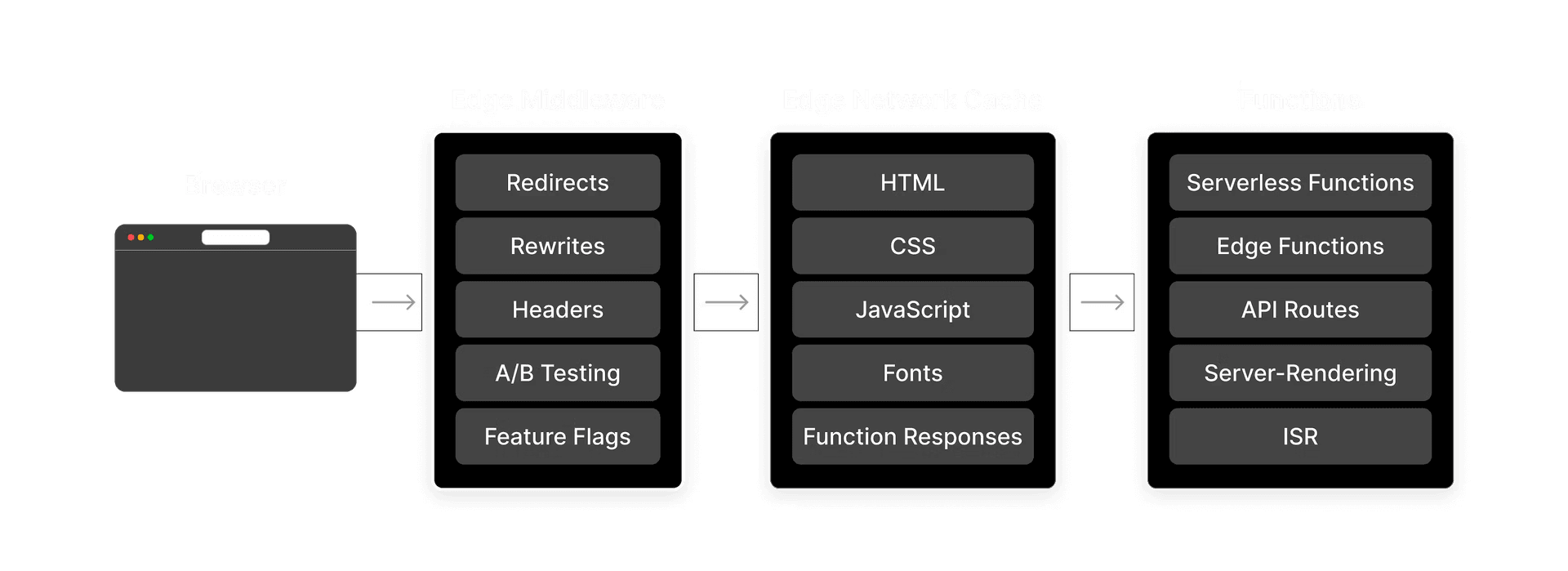

Vercel's Edge Network further differentiates itself from a typical CDN by allowing you to run dynamic server-side JavaScript as close as possible to your user with Edge Functions, or between user request and data rendering with Edge Middleware.

Across multiple frameworks, such as Next.js, SvelteKit, Astro, Nuxt, Remix, and more, Edge Functions are Serverless Functions with the edge option toggled on, and in addition to running at the edge, they benefit from all the same caching and streaming capabilities.

Edge Middleware can be written in a typical middleware pattern (in Next.js, for example), but runs before the cache, allowing you to change what is served to the user with zero layout shift or noticeable artifacts.

Both Edge Functions and Middleware allow for top-speed, global personalization of your application—all without leaving your framework code.

Link to headingPersonalization meets speed

The Frontend Cloud allows you to design user-obsessed applications that offer deeply personalized experiences without sacrificing speed for dynamic content.

With boosted search engine relevancy and user engagement, your application can convert far more users.

Transform your website's potential.

Unlock unmatched speed and personalization for your business with the Frontend Cloud.

Contact Us