3 min read

Stream React Components from LLMs to deliver richer user experiences

Last October, we launched v0.dev, a generative UI design tool that converts text and image prompts to React UIs and streamlines the design engineering process.

Today, we are open sourcing v0's Generative UI technology with the release of the Vercel AI SDK 3.0. Developers can now move beyond plaintext and markdown chatbots to give LLMs rich, component-based interfaces.

Visit our demo for a first impression or read the documentation for a preview of the new APIs.

Link to headingA new user experience for AI

Products like ChatGPT have made a profound impact: they help users write code, plan travel, translate, summarize text, and so much more. However, LLMs have faced two important UX challenges:

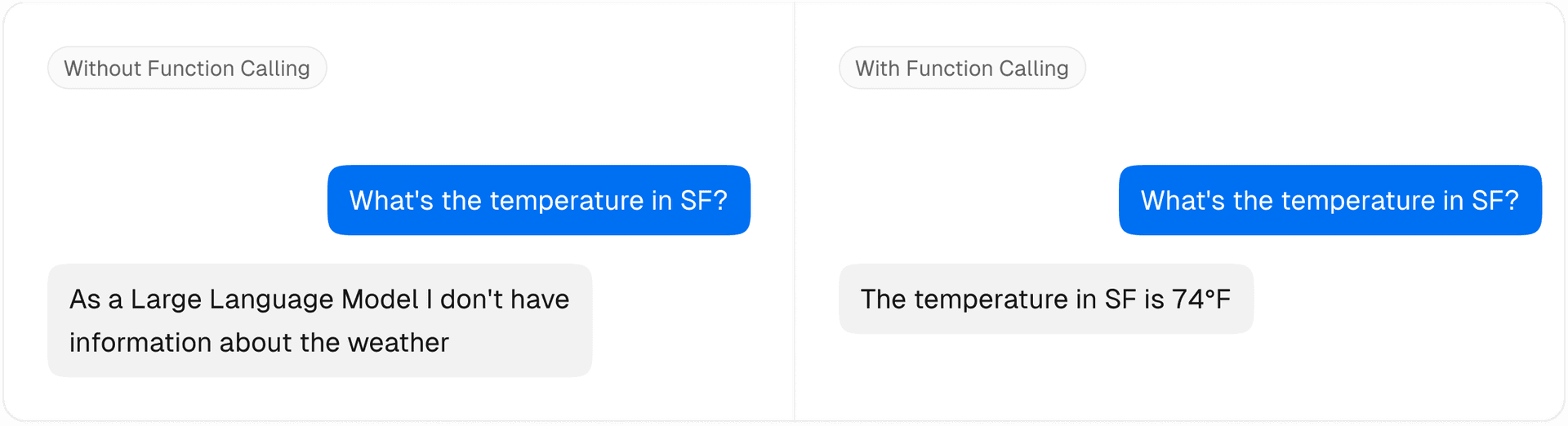

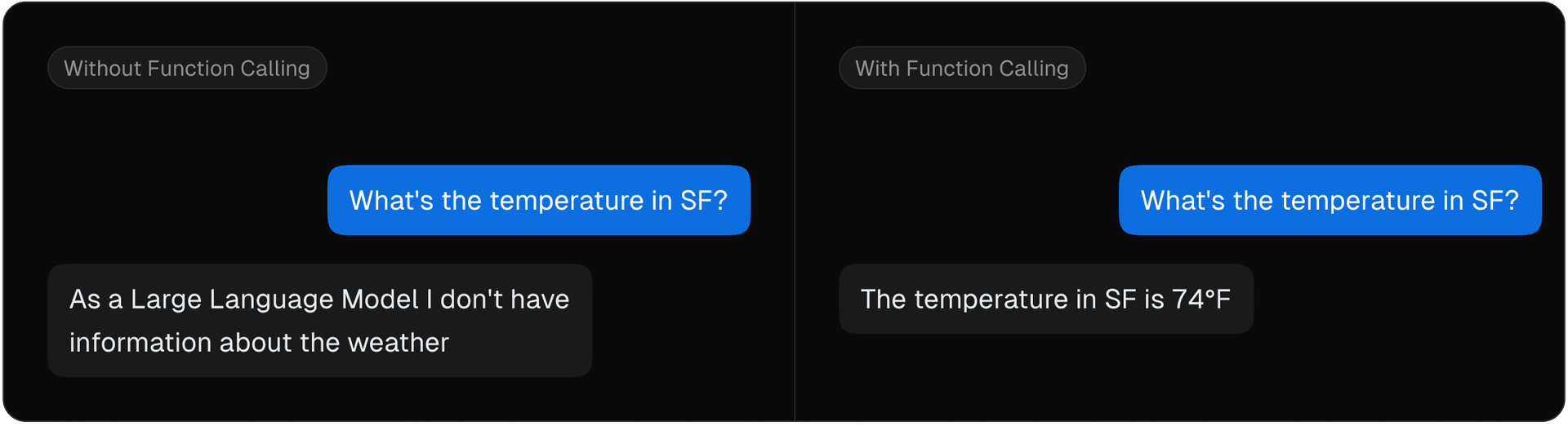

Limited or imprecise knowledge

Plain text / markdown-only responses

With the introduction of Tools and Function Calling, developers have been able to build more robust applications that are able to fetch realtime data.

These applications, however, have been challenging to write and are still lacking in richness and interactivity.

Thanks to our experience in developing v0 with React Server Components (RSC), we've arrived at a simple abstraction that can solve both these problems.

Link to headingA new developer experience for AI

With the AI SDK 3.0, you can now associate LLM responses to streaming React Server Components.

Let's start with the most basic example, streaming text without retrieval or up-to-date information.

import { render } from 'ai/rsc'import OpenAI from 'openai'

const openai = new OpenAI()

async function submitMessage(userInput) { 'use server'

return render({ provider: openai, model: 'gpt-4', messages: [ { role: 'system', content: 'You are an assistant' }, { role: 'user', content: userInput } ], text: ({ content }) => <p>{content}</p>, })}Let's now solve both original problems: retrieve the live weather and render a custom UI. If your model supports OpenAI-compatible Functions or Tools, you can use the new render method to map specific calls to React Server Components.

import { render } from 'ai/rsc'import OpenAI from 'openai'import { z } from 'zod'

const openai = new OpenAI()

async function submitMessage(userInput) { // 'What is the weather in SF?' 'use server'

return render({ provider: openai, model: 'gpt-4-0125-preview', messages: [ { role: 'system', content: 'You are a helpful assistant' }, { role: 'user', content: userInput } ], text: ({ content }) => <p>{content}</p>, tools: { get_city_weather: { description: 'Get the current weather for a city', parameters: z.object({ city: z.string().describe('the city') }).required(), render: async function* ({ city }) { yield <Spinner/> const weather = await getWeather(city) return <Weather info={weather} /> } } } })}Link to headingTowards the AI-native web

With Vercel AI SDK 3.0, we're simplifying how you integrate AI into your apps. By using React Server Components, you can now stream UI components directly from LLMs without the need for heavy client-side JavaScript. This means your apps can be more interactive and responsive, without compromising on performance.

This update makes it easier to build and maintain AI-powered features, helping you focus on creating great user experiences. We're excited to see what you ship.

Try the demo

Try an experimental preview of AI SDK 3.0 with Generative UI

Try now

Link to headingFAQ

Link to headingDo I need Next.js to use this?

The new APIs in the AI SDK 3.0 rely on React Server Components (RSC) and React Server Actions which are currently implemented in Next.js. They do not rely on any internal Next.js-specifics, so when other React frameworks like Remix or Waku complete their implementations of RSC, you'll be able to use them for Generative UI assuming they comply with React's spec.

Link to headingDo React Server Components work with Next.js Pages Router?

No. The new APIs rely on React Server Components and React Server Actions which are not implemented in Next.js Pages Router. However, as of Next.js 13, you can use both App Router and Pages Router in the same Next.js application.

Link to headingWhat LLMs are currently supported?

You can use the RSC APIs with any streaming LLM supported by the AI SDK. However, the

rendermethod expects LLMs to support OpenAI's SDK and optionally its Assistant Tools and Function Calling APIs. We also provide lower-level streaming APIs can be used independently (even without an LLM). At the time of writing though, the new RSC-basedrenderAPI can be fully used with OpenAI, Mistral, and Fireworks'firefunction-v1model because of their support of the OpenAI SDK-compatible Function Calling.

Link to headingWhat if my LLM doesn't support tools or function calling?

You can still use the AI SDK 3.0 RSC APIs to stream text and your own components, or you can prompt engineer your LLM to output structured data that can be parsed and used with the AI SDK.

Link to headingDoes Generative UI work with OpenAI Assistants?

You can use OpenAI Assistants as a persistence layer and function calling API with the AI SDK 3.0. Or you can manually perform the LLM calls with a provider or API of your choice.

Link to headingCan anything be passed from the server to the client?

Anything serializable by React can cross the network boundary between server and client. Promises, JavaScript primitives, and certain data structures like Map and Set can all be serialized by React. You can read more about React's serialization in the React docs.

Link to headingDoes this work with LangChain or LlamaIndex?

Yes, with the

createStreamableUIandcreateStreamableValueprimitives you can use any JavaScript library as long as you can call it during the execution of a React Server Action. This means you can build Generative UI products with tools like LangChain, LlamaIndex, agent abstractions, and with durable task runners like Inngest.