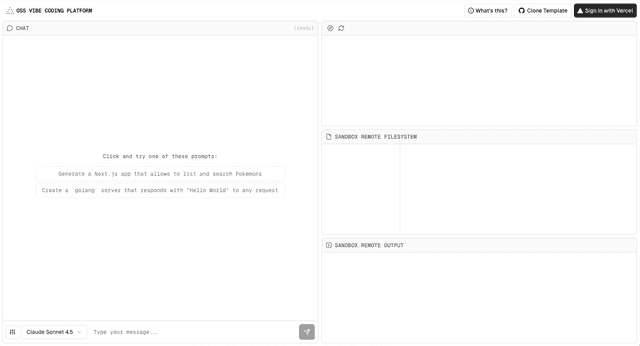

An open-source, in-browser, AI-native IDE built with Next.js, FastAPI, OpenAI Agents SDK, and the Vercel AI Cloud. The platform combines real-time AI interactions with safe sandboxed execution environments and framework-defined infrastructure.

Getting Started

First, run the backend development server:

Open http://localhost:8081/docs with your browser to see the backend.

Then, run the frontend development server:

Open http://localhost:3000 with your browser to see the frontend.

How it Works

Frontend

The frontend is built with Next.js and the Monaco Editor. All API calls are made to our FastAPI backend which handles agent and sandbox execution.

Backend

The backend runs as a decoupled FastAPI app which runs as a function on Fluid Compute, optimized for prompt-based workloads. Since LLMs often idle while reasoning, Fluid compute reallocates unused cycles to serve other requests or reduce cost.

The agent runs on the OpenAI Agents SDK which routes LLM requests through the AI Gateway to support multiple models.

Sandboxed Execution

The agent has the ability to acquire ephemeral sandboxes to run code. Each sandbox is a safe isolated environment that expires after a short timeout. Sandboxes do not have access to any code outside of their respective project in the editor, making them safe to run arbitrary code.

Agents can use these sandboxes to install dependencies, run commands, make edits, and execute the code.

The agent streams real-time updates back to the frontend so users can see the agent's progress instantly.