1 min read

The AI Gateway, currently in alpha for all users, lets you switch between ~100 AI models without needing to manage API keys, rate limits, or provider accounts.

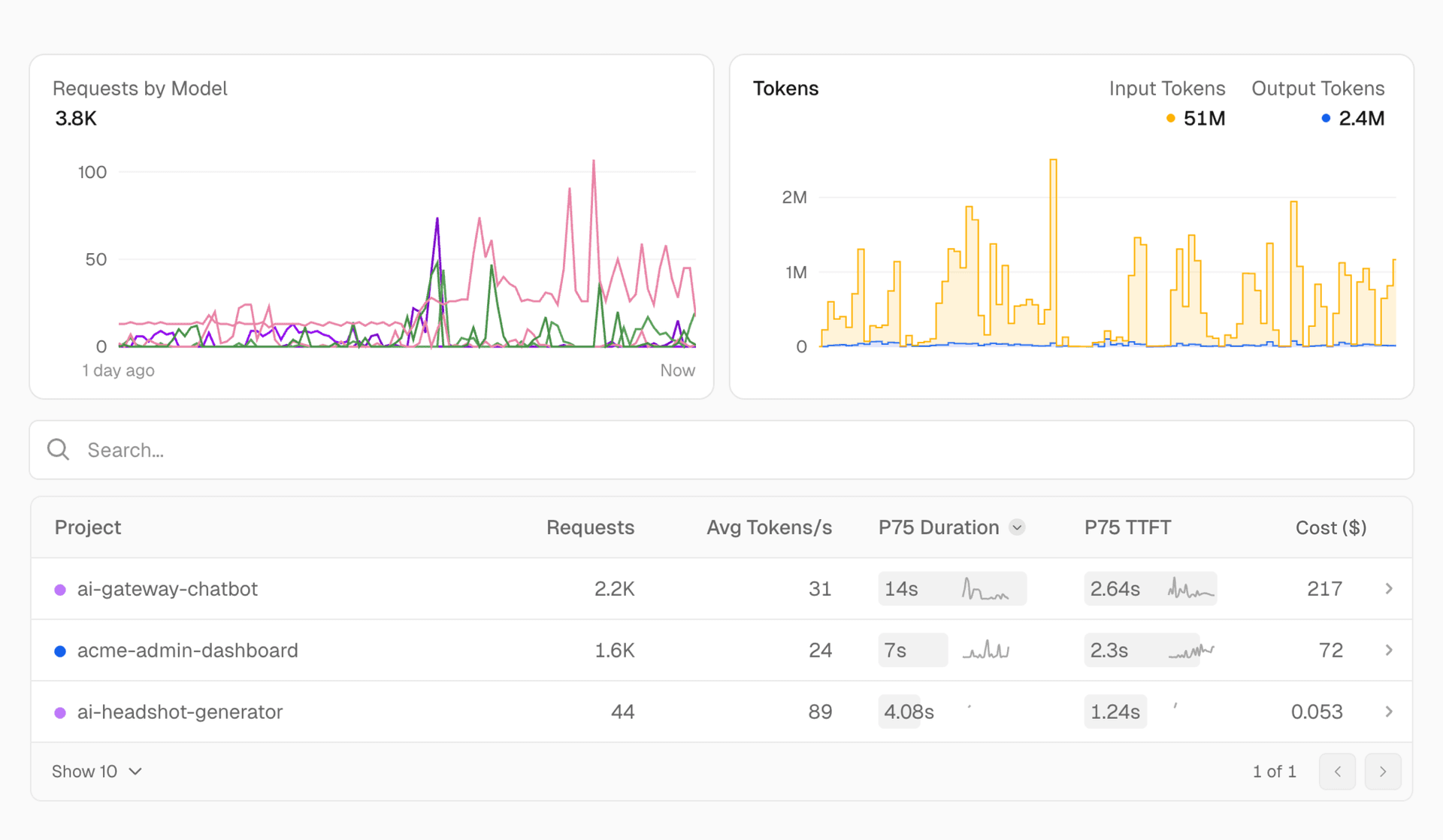

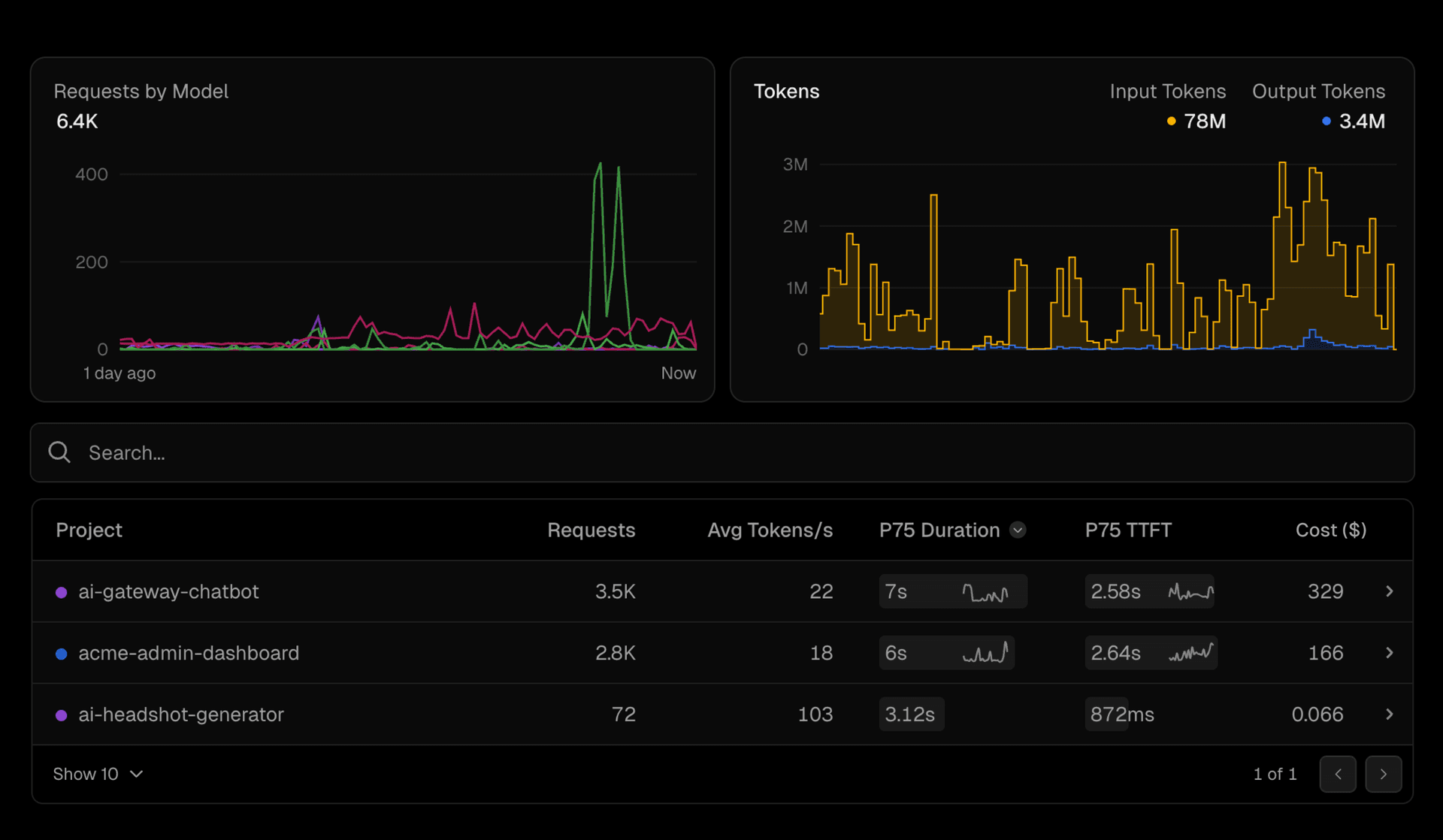

Vercel Observability now includes a dedicated AI section to surface metrics related to the AI Gateway. This update introduces visibility into:

Requests by model

Time to first token (TTFT)

Request duration

Input/output token count

Cost per request (free while in alpha)

You can view these metrics across all projects or drill into per-project and per-model usage to understand which models are performing well, how they compare on latency, and what each request would cost in production.

Learn more about Observability.