11 min read

Learn how we're cross-compiling a Rust-Go-Rust sandwich to six platforms.

We’ve been porting Turborepo, the high-performance build system for JavaScript and TypeScript, from Go to Rust. We talked about how we started the porting process, so now let’s talk about how we began porting our two main commands: run and prune.

Link to headingSince last time

When we last left off, we had begun our port by implementing global turbo and command line argument parsing in Rust. Because of issues around static linking and Go’s incompatibility with musl, an essential requirement for compatibility with Alpine Linux, we split Turborepo into two binaries: one Rust and one Go.

After that, we ported the auxiliary commands like login, link, unlink. These were fairly simple to port since they only required a basic HTTP client and some configuration management. We were able to move them in one or two pull requests at a time.

Link to headingrun and prune

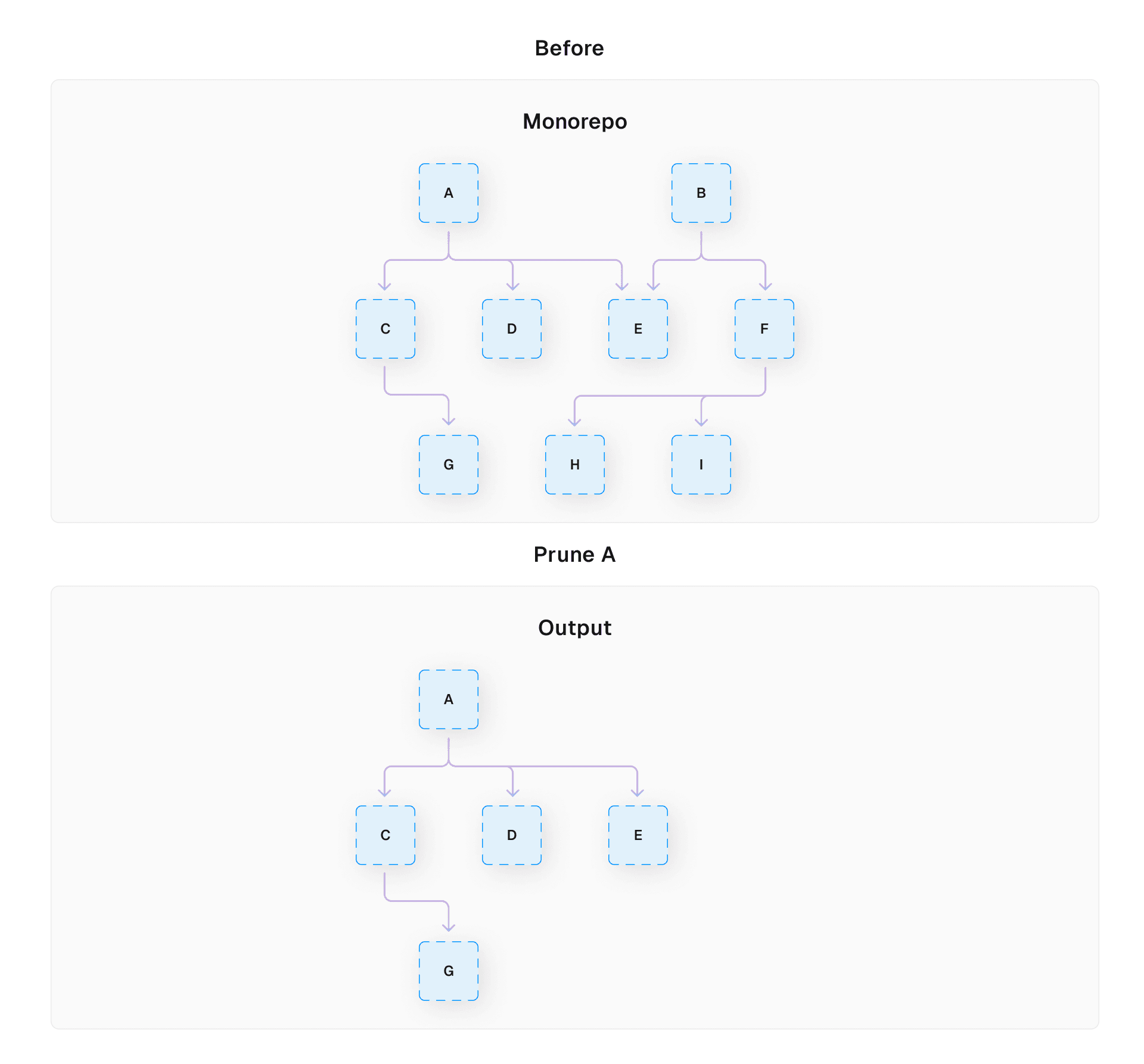

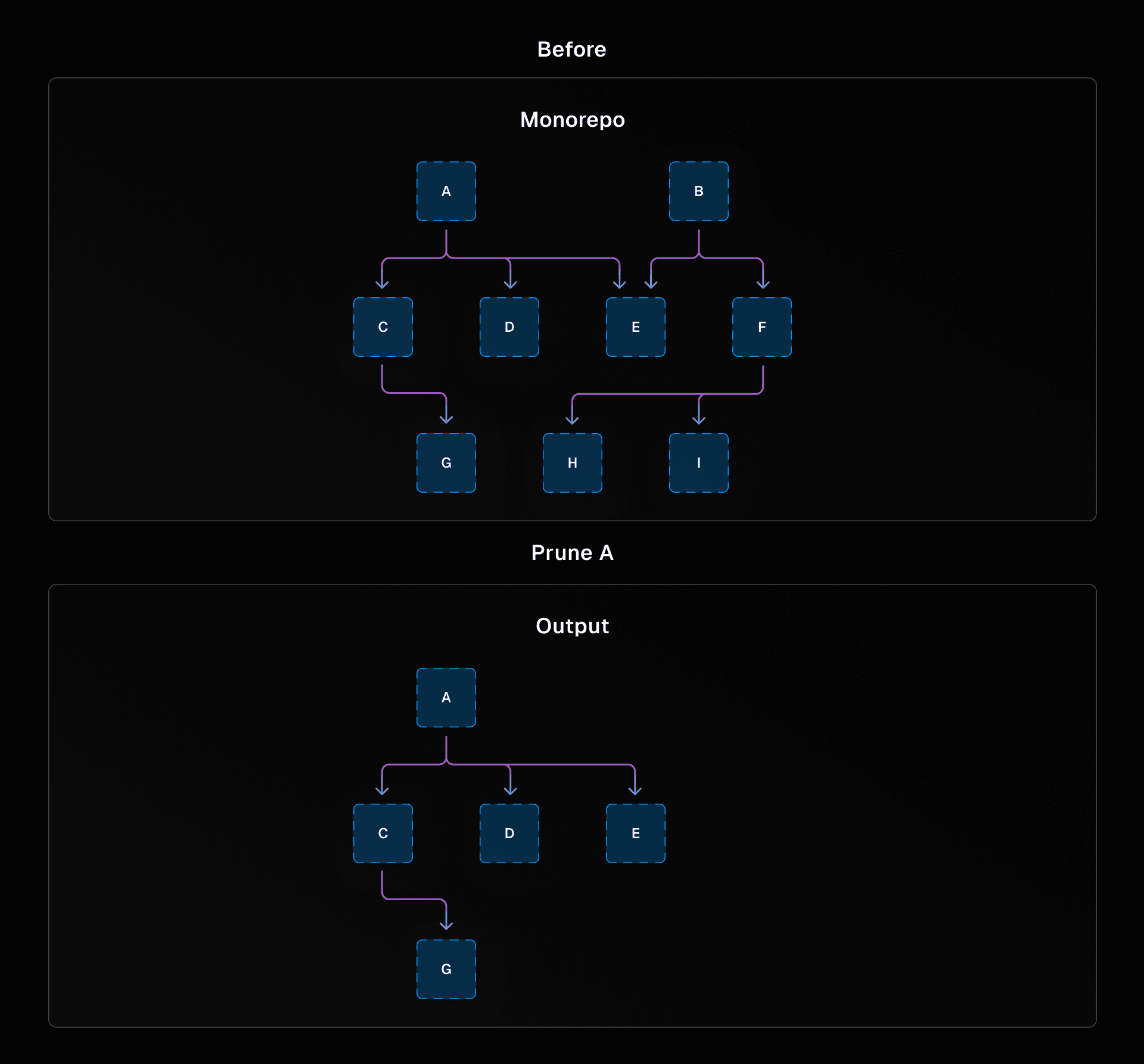

However, after these commands, we ended up with a problem: How could we port run and prune? run and prune are the commands that do the majority of the work in turbo. run, well, runs your tasks, like build, lint, and test. prune takes your Turborepo and produces a subset of it that only contains a single package and its dependencies. This is helpful when you want to only use a specific package, such as in a Docker container, and don’t want to copy the entire Turborepo into the container.

As such, they’re quite large chunks of code. run, for instance, does the following:

Processes configuration from command line arguments, your

turbo.jsonfiles, environment variables, etc.Spins up a dependency graph of the various packages in your Turborepo, i.e. the package graph.

Determines which packages have been modified.

Figures out which tasks need to be run and in which order, using a task dependency graph, i.e. the Task Graph.

Executes the tasks in parallel.

Saves the outputs of these tasks to file system cache and/or remote cache.

Produces a summary of this execution.

And this is a simplified, high-level version! In reality, there’s a lot more going on such as filtering, dry runs, hashing, and more. We couldn’t move these commands in one or two pull requests like we could with the auth commands from before. We needed a way to move them incrementally.

Link to headingStrategies for incremental migration

We considered a few strategies for moving run and prune to Rust, all based on principles of incremental migration.

Link to headingOption 1: The same strategy

The first strategy that we considered was to continue what we were already doing for command-line parsing: Do more work in the Rust code and pass the result to the Go code. However, this had a fatal flaw. Almost all of the work that we were doing in run involved either the package dependency graph or the task dependency graph. Graphs can get large extremely quickly, so serializing them over JSON would induce a lot of overhead.

Also, from a stability perspective, the package graph is the core abstraction within turbo. We needed to build up enough infrastructure and testing to make sure that it was rock-solid before we could ship a ported version.

Link to headingOption 2: Write from scratch

Another option would be to build up a Rust version of run / prune from scratch, then switch over once it’s completed. We didn’t want to do this, since, as stated in the previous blog post, we weren’t confident that we could produce a full rewrite that kept the same behavior.

Link to headingOption 3: Trampoline

We also had some ideas that involved reusing our existing infrastructure, such as creating a trampoline where the Go binary would have an entry-point function that could redirect to different parts of the run pipeline, so the Rust code could call the Go binary, get a result, process that more, then call the Go binary again. This would allow us to reuse the two binary setup that we already had. If you’re familiar with JavaScript callbacks, you can think of this as the Rust code producing events that the Go code handles with a callback.

Link to headingOption 4: Use the daemon

Similarly to the previous option, we considered adapting Turborepo’s daemon for porting. The daemon is a performance optimization that runs in the background and watches the files in your Turborepo. That way, when you run a task, turbo already knows which files have changed and, therefore, which tasks to run. Our strategy would be to port the daemon to Rust, and then add more and more functionality to the daemon, such as having it create the package graph and task graph. However, the daemon is meant as an optional performance improvement, so making it an essential part of the run pipeline would be a significant shift in architecture.

Link to headingThe winning solution: The Go sandwich

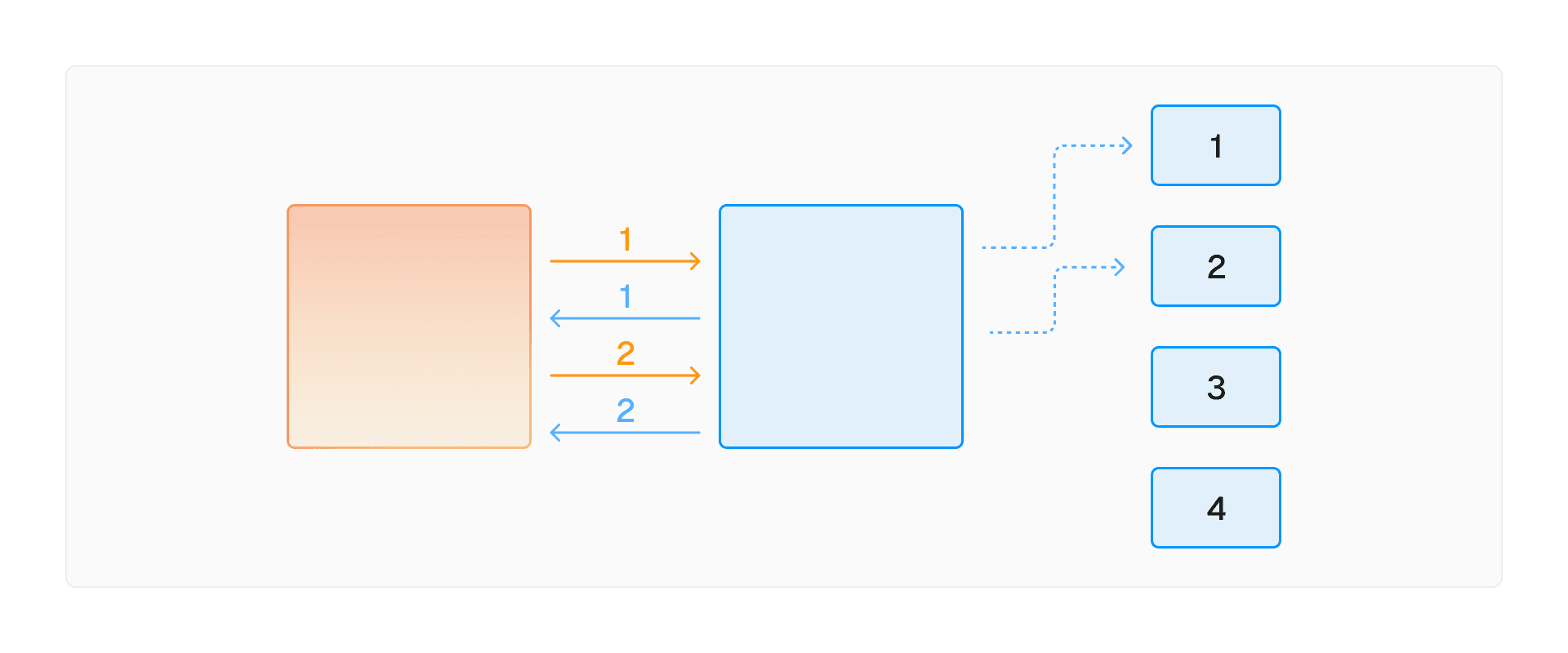

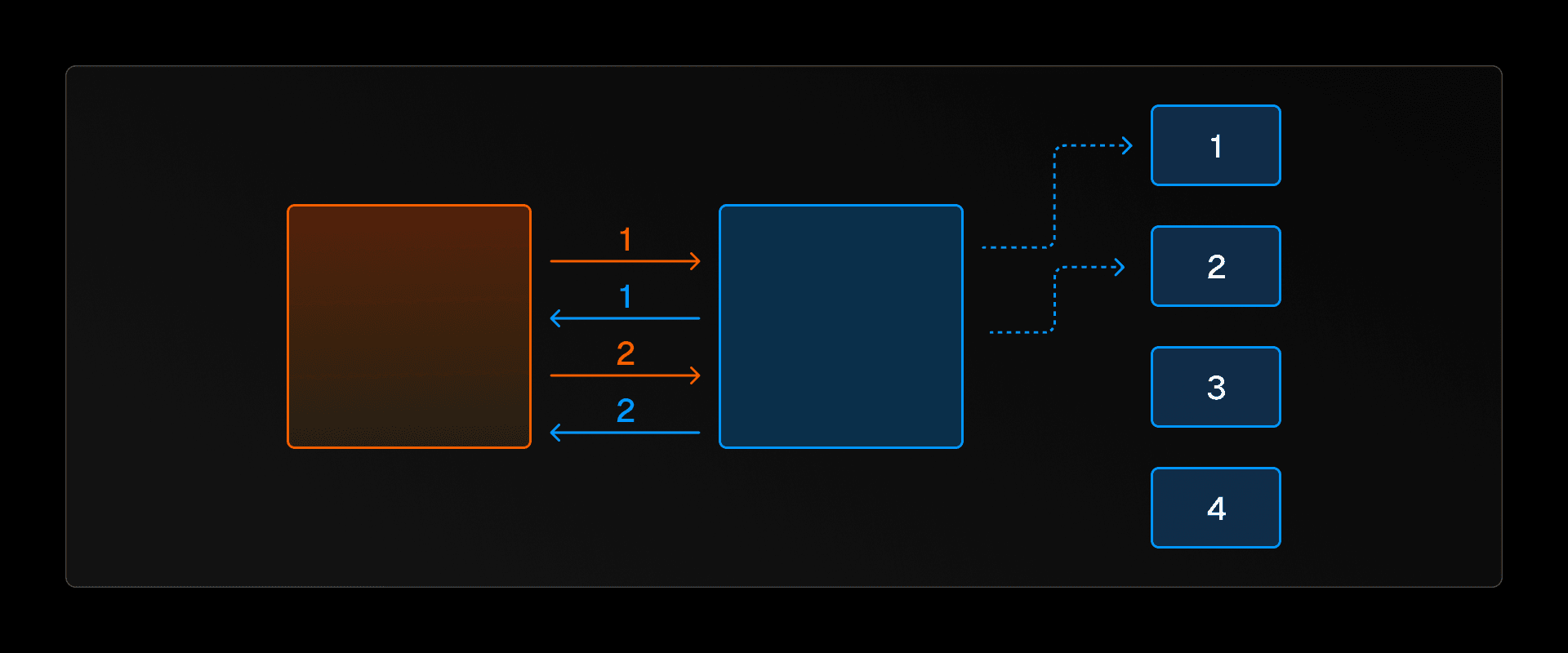

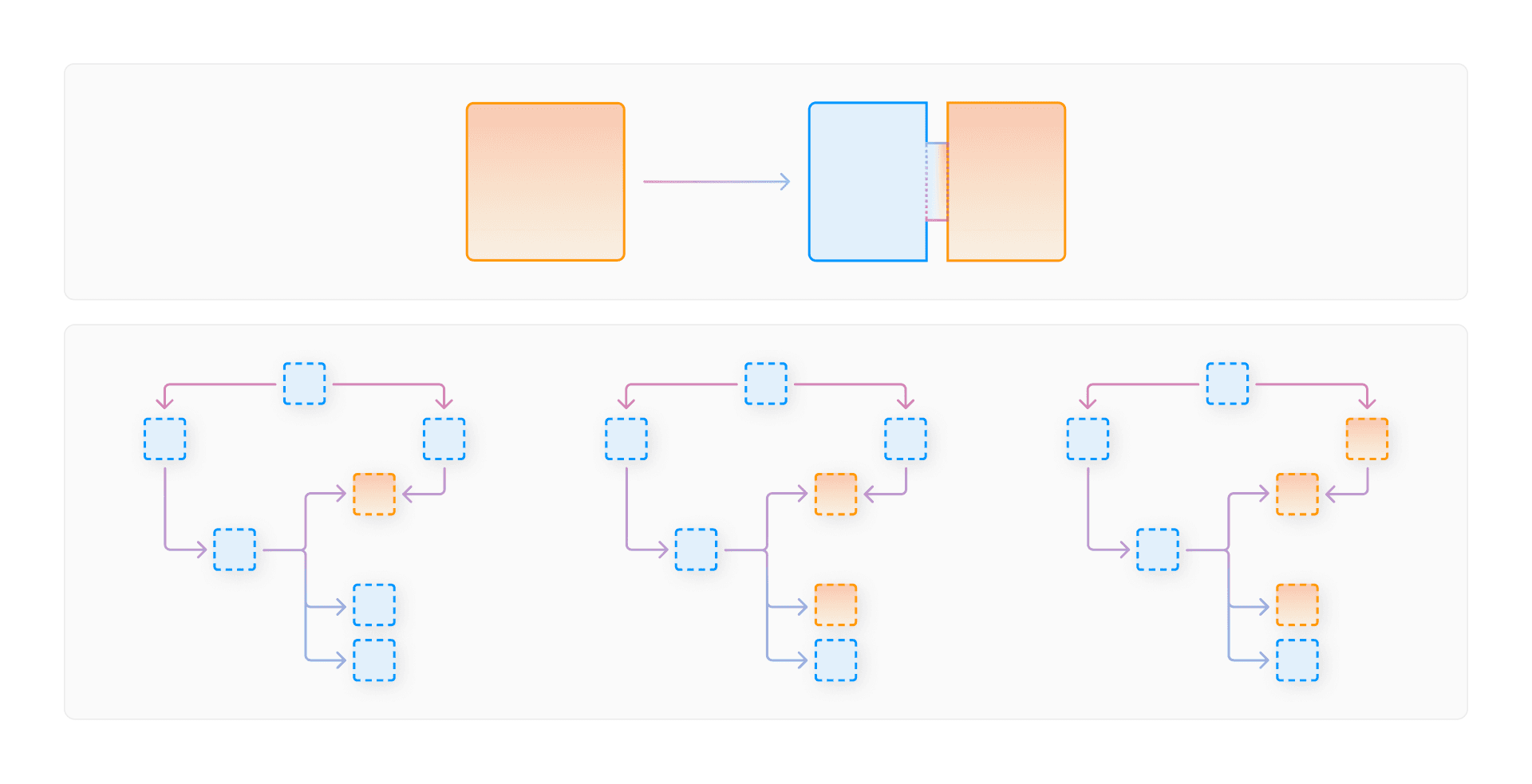

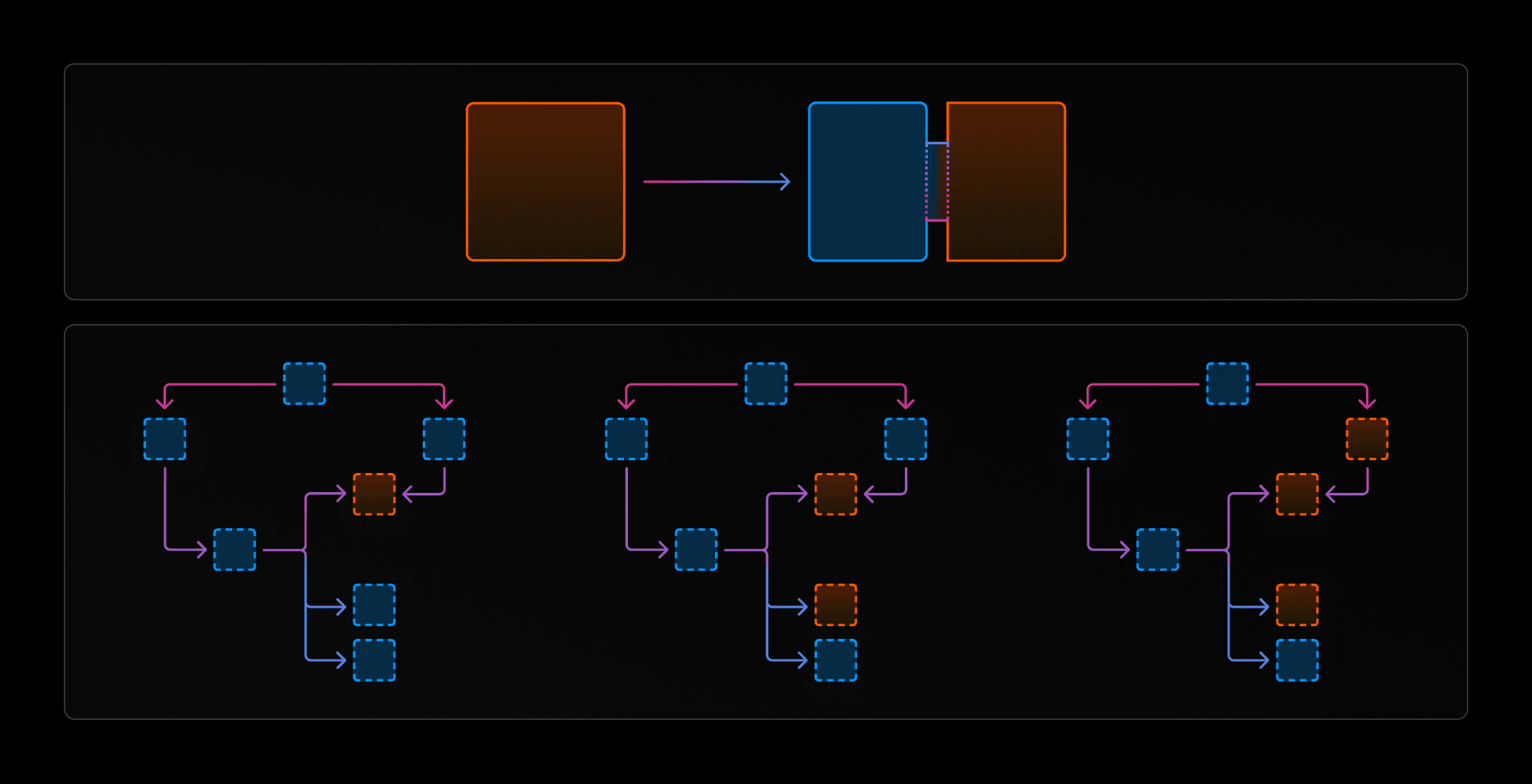

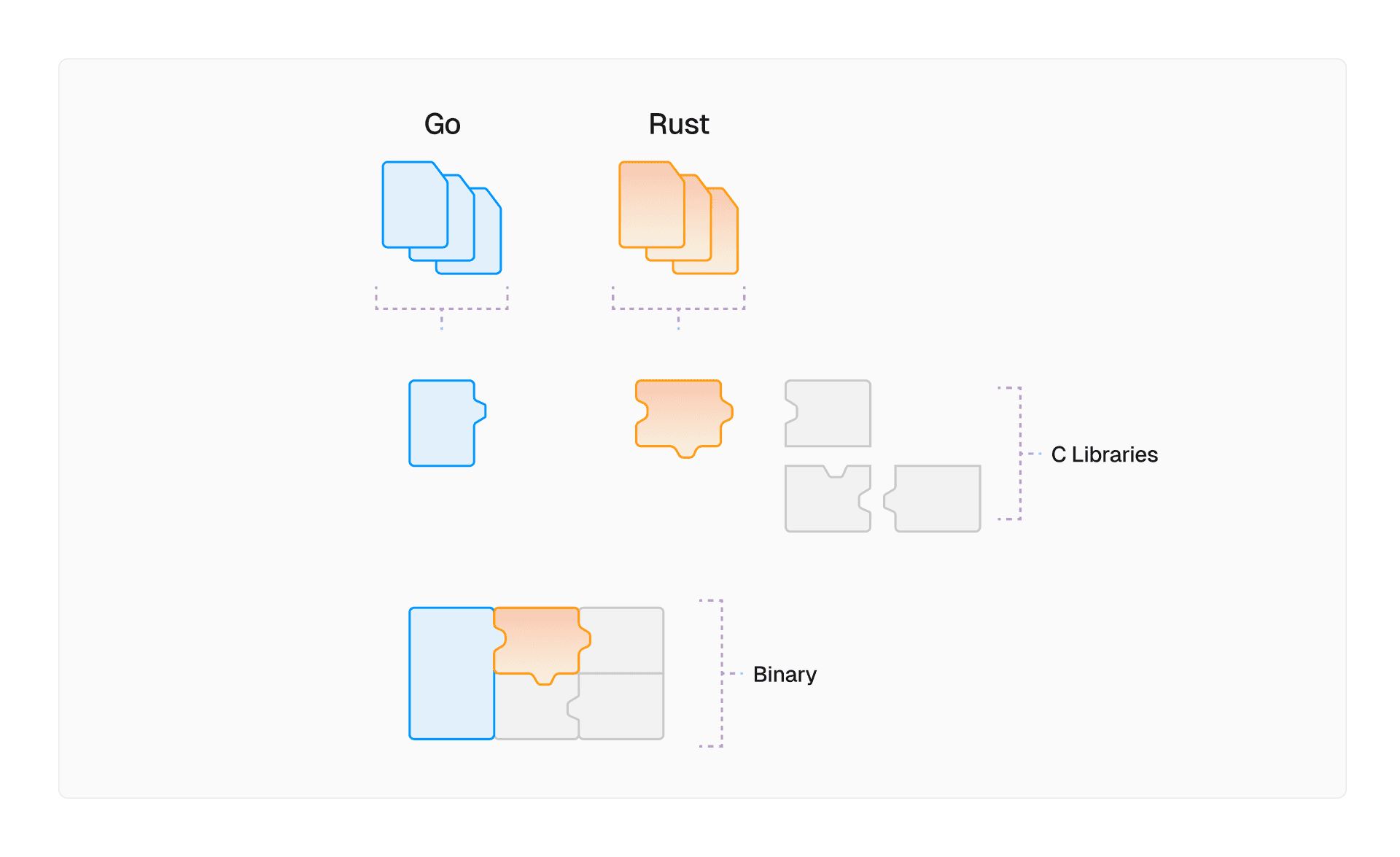

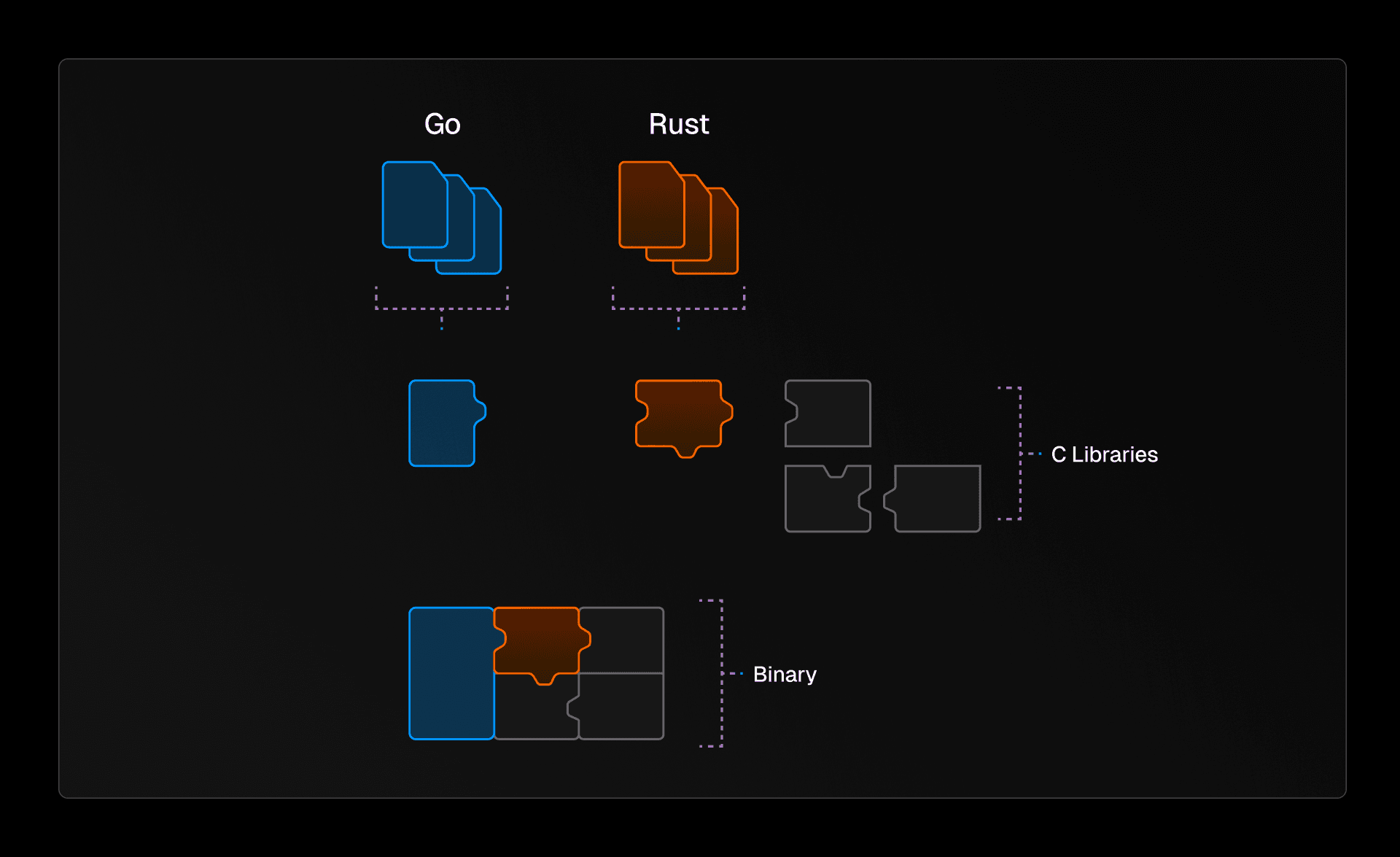

After considering these options, we settled on building what we call the “Rust-Go-Rust sandwich” or the “Go sandwich” for short. It consists of a Rust binary that calls a Go binary, which in turn calls Rust libraries that are statically linked into the Go binary. This allows us to split up the run and prune code between the two languages and port individual dependencies. This way, we could tackle run and prune incrementally, allowing for better testing and debugging through the migration.

The Rust dependencies that we’ve ported are contained in the turborepo-ffi crate. We compile that crate to a C static library, (a staticlib in Rust terms). From there, we link it to our Go binary using CGO. Unlike last time, we didn’t run into any segfault issues because, while linking Go to Rust is broken with musl, linking Rust to Go is fine.

To communicate between Go and Rust, we decided to use protobuf, as it was platform-independent, relatively compact, and had an explicit schema that could be used to generate Rust and Go types, keeping our types in sync across languages. This was especially important since we had a lot more surface area between Rust and Go than when we were porting the command line arguments.

Finally, we modified our build process so that we could toggle the Go sandwich on or off. That way, we could always revert back to the Go code if we encountered bugs in the Rust implementation. This did mean that we had to keep the Go code around, but we knew that this was ultimately temporary.

Link to headingSandwich porting process

Let’s walk through an example of porting to Rust. We have code to include environment variables in what’s called our global hash, which determines whether or not we have a global change that necessitates re-running all of our tasks. This is a nice, self-contained chunk of code, so it was a good candidate for porting.

To start, we needed to determine what exactly we wanted to port. Ideally, we should be porting non-trivial chunks, as we don’t want to be inducing the protobuf overhead for a trivial function—but it shouldn’t be so large that it’s out of the scope of a single PR. In this case, we decided to port the getGlobalHashableEnvVars function, which takes the user's environment variable configuration and determines which environment variables to provide as inputs to the global hash.

Next, we split up the existing code into the old Go implementation (global_hash_go.go) and the new Go code that calls the Rust implementation (global_hash_rust.go). We use Go build tags to decide which implementation to build so we can always toggle back to the old Go implementation if the new Rust implementation contains a bug.

Then, we wrote the Rust implementation, added the necessary input and output types to our protobuf schema, created an exported function in turborepo-ffi, and called that function in ffi.go. And of course, we made sure to write tests! If you want to check out the full process, you can find the pull request here.

With all of this setup, we were able to port more and more pieces of run and prune to Rust in a relatively straightforward process. However, when it came to shipping the Go sandwich, we ran into some release issues. Here’s how we solved them with an unlikely ally.

Link to headingThe Turborepo release process

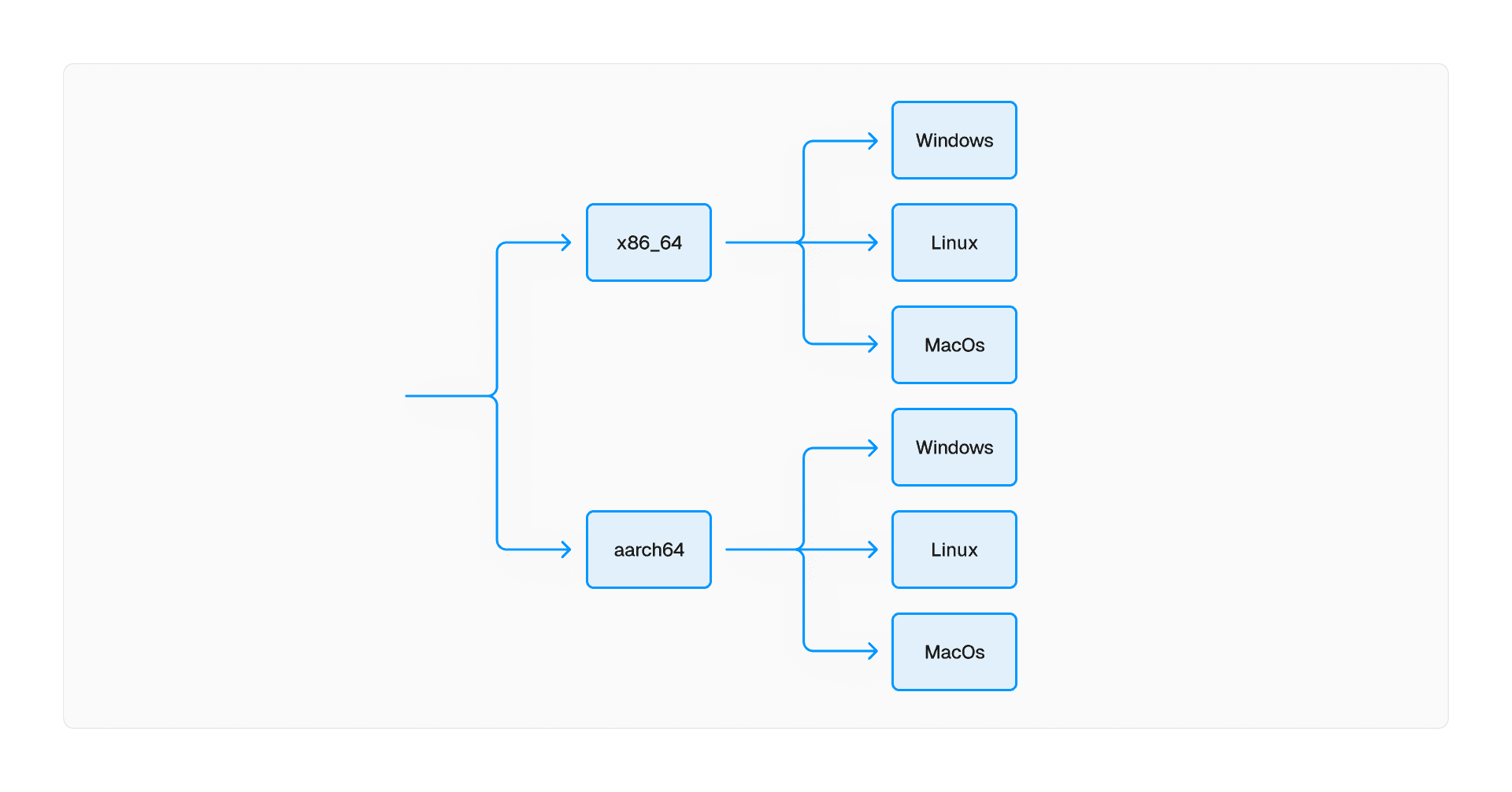

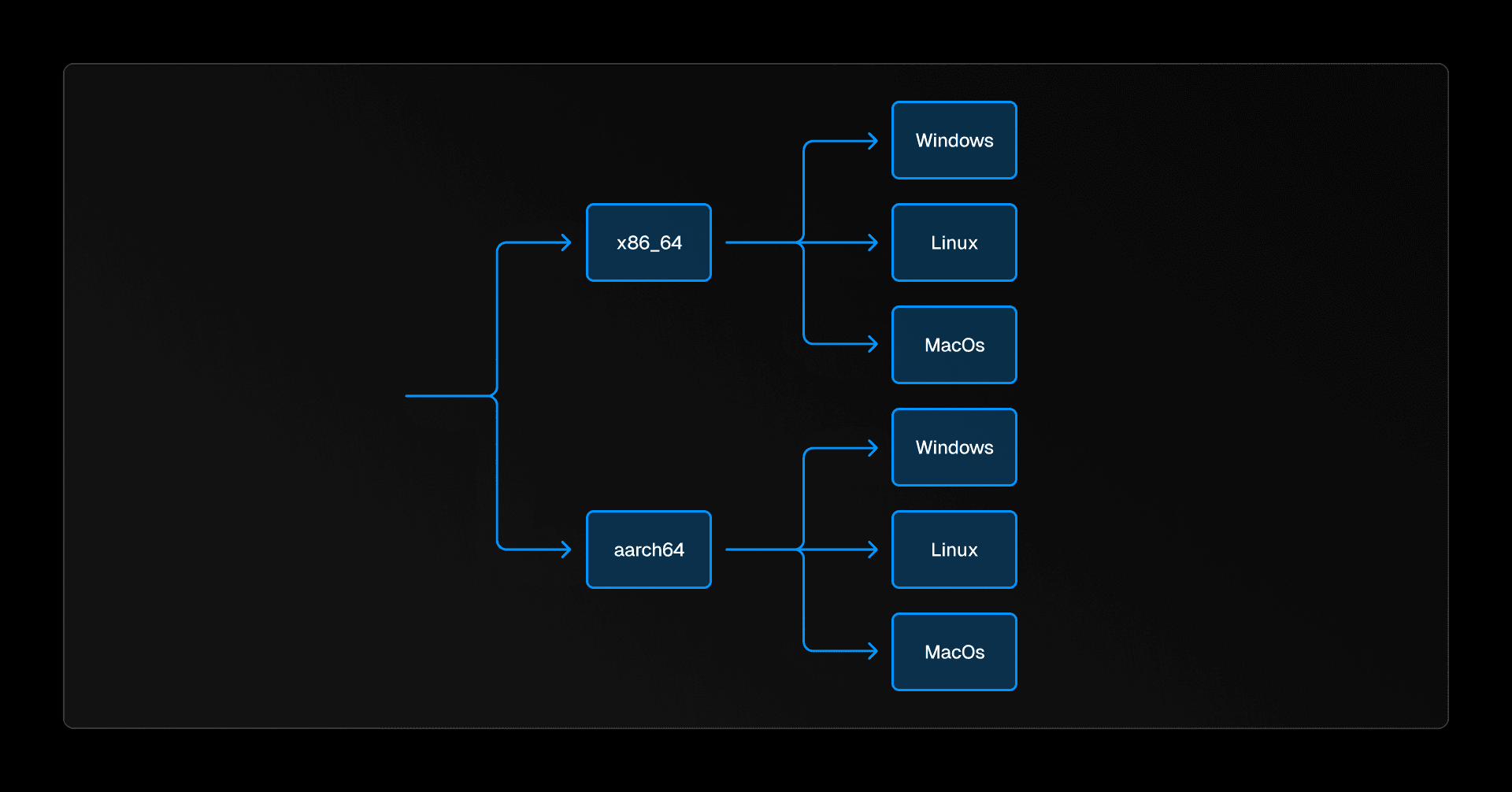

Turborepo is distributed as a native binary on Windows, Linux, and macOS for both x86-64 and aarch64, the two predominant CPU architectures. Doing the math, that's 6 targets to build and support—not an easy task. As we detailed in our post about why we’re moving from Go to Rust, it’s quite difficult to write code that handles low-level features like file systems and processes in a cross-platform manner. However, what’s more challenging is building and releasing this cross-platform code for 6 different targets, especially when you’re using two different languages with two different toolchains.

Generally, when you write code and build it, you are doing a native compilation. You're building the code for the operating system and architecture of the computer that you're currently using. This works just fine for local development; you build and immediately run it on your computer. But for releases, this process needs to change. We can’t spin up 6 different machines for each of the targets we support. It would take a really long time, and waste compute resources, and our release pipeline is on GitHub Actions which does not support aarch64 anyway.

Instead, we do what’s called a cross-compilation. Cross-compilation involves building your code for an operating system and/or architecture that is not the same as the computer you're currently using. For instance, you can cross-compile an x86-64 Linux binary on an aarch64 macOS computer.

To handle cross-compilation for our Go code, we use a tool called Go Releaser. As the name indicates, Go Releaser releases Go code by building binaries for multiple targets, then releases them (in our case to npm).

Link to headingGo sandwich complications

With the Go sandwich, we had a new problem: how can we release a hybrid Go-Rust binary?

We had designed our Go sandwich to use a Rust crate, turborepo-ffi, compiled to a staticlib, a native C library, which we then linked to our Go code via CGO. This worked just fine locally. However, when it came to releasing, we ran into a few issues.

Link to headingWindows ARM

As you may recall from the last post, there are two main toolchains with Windows: Microsoft Visual C++ (MSVC) and Minimalist GNU for Windows (MinGW). The former, MSVC, is written and maintained by Microsoft with a specific Application Binary Interface (ABI), while the latter, MinGW, ports the GNU software suite to Windows. MinGW has its own ABI meaning things compiled for MSVC and for MinGW are not interoperable.

Unfortunately, Go only uses MinGW, while Rust only has support for MSVC on ARM. This meant that we could not link the two languages. Fortunately, Windows on ARM comes with x86-64 emulation, so we could release an x86-64 binary for Windows and let the operating system work its magic to have it run on ARM. There is a performance impact associated, but only until we reach a full Rust binary, or Rust releases a MinGW ARM target.

Link to headingC Dependencies

The second challenge arose when using C dependencies within our Rust code. The process of native compilation typically involves compilers creating object files, which are then linked—or combined—together to form a binary. However, in our case, turborepo-ffi is compiled into an object file, not a binary. As a result, it can't directly link its own C dependencies.

Let's take zlib as an example. If turborepo-ffi uses zlib as a C dependency, it doesn't directly link zlib within its own object file. Instead, it expects that when you integrate turborepo-ffi into a binary (in this case, the Go binary) you'll also supply it with an object file containing the compiled version of zlib. This expectation arises from the fact that C static libraries are not standalone entities but are designed to be inputs to a linker, serving as building blocks in the creation of a binary.

Okay, understood, don’t use C dependencies in turborepo-ffi. Well, that’s not so easy. Many libraries such as git2 and openssl use C dependencies. Even Rust itself uses C dependencies! To handle stack unwinding after a panic, Rust uses a library called libunwind. There’s no escaping C no matter how hard you try.

As we’ve emphasized before, this is all much simpler with native compilation. Either the pre-built libraries are already installed on your computer, or they’re easily installable with your system package manager (apt-get, brew, apk, etc.).

With cross-compilation, this all goes out the window. You can’t easily install pre-built libraries for different platforms than your native one. You can build from source (more cross-compilation!) but C compilers are not usually the greatest at statically linked cross-compilation.

But it turns out there’s an exception.

Link to headingEnter zig cc

You may have heard of Zig, a new systems programming language that’s been gaining some adoption recently. It’s fast, simple, and most importantly, has great interoperability with C. So much so, that it actually comes with its own C compiler, zig cc.

But zig cc is no ordinary C compiler. It’s a C compiler that has easy, out-of-the-box support for cross-compilation. As we just saw, cross-compilation in C is quite painful, requiring installing a compiler for a specific target (or building it from source), finding versions of libraries for the target platform, and writing a whole lot of compiler flag magic.

Erasing all of these concerns practically made us jump with joy. zig cc even comes with a nice long list of targets that it handles out of the box, with bundled libraries—including, you guessed it, all of the targets that we need to build!

I’ll admit, there was some skepticism about using Zig to build our codebase. Would using Zig and Go and Rust be too much programmer hipsterism? Would Hacker News and developer Twitter judge us too harshly?

But the ultimate test is whether the code works. And indeed it worked! Zig allowed us to build our Go sandwich for all our supported platforms, including the C dependencies. Zig is even smart enough to bundle a platform-specific version of libunwind, so all you have to do is pass the flag -lunwind and it’ll just work.

On the Go side, we had to pass some flags to indicate that instead of Go linking everything, we wanted to rely on an external linker, i.e. Zig. The magic combination here was linkmode external -extldflags="-static".

With the help of just one more language toolchain, we were able to ship our Go sandwich to our users and continue on our porting journey. The first version of turbo shipped with the Go sandwich was version 1.8.6.

Link to headingWhy all the effort?

This may seem like a lot of complexity to accommodate a port—an entire new toolchain along with protobuf is nothing to sneeze at—but it allowed us to fulfill our top-line goal: keep shipping code to users. A complete rewrite would have stalled the release of new features for months and risked accumulating unused code. No matter how much testing, no matter how rigorous your type system, code that is not used is code that is ripe for bugs. The Go sandwich ensured that as we ported code, each new piece was actively used and validated.