8 min read

Bring your creativity to life with the web's 3D graphic rendering API.

WebGL is a JavaScript API for rendering 3D graphics within a web browser, giving developers the ability to create unique, delightful graphics, unlike anything a static image is capable of. By leveraging WebGL, we were able to take what would have been a static conference signup and turned it into the immersive Next.js Conf registration page.

In this post, we will show you how to recreate the centerpiece for this experience using open-source WebGL tooling—including a new tool created by Vercel engineers to address performance difficulties around 3D rendering in the browser.

Link to headingThe big idea

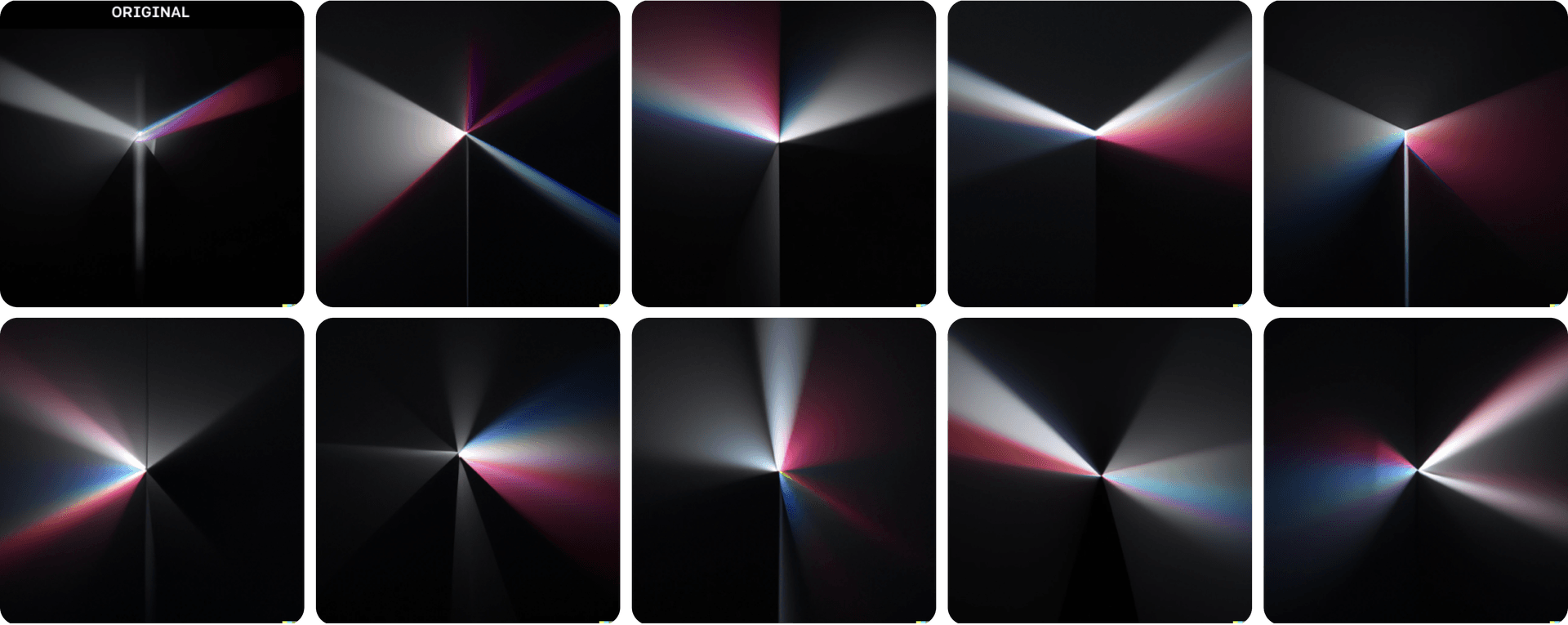

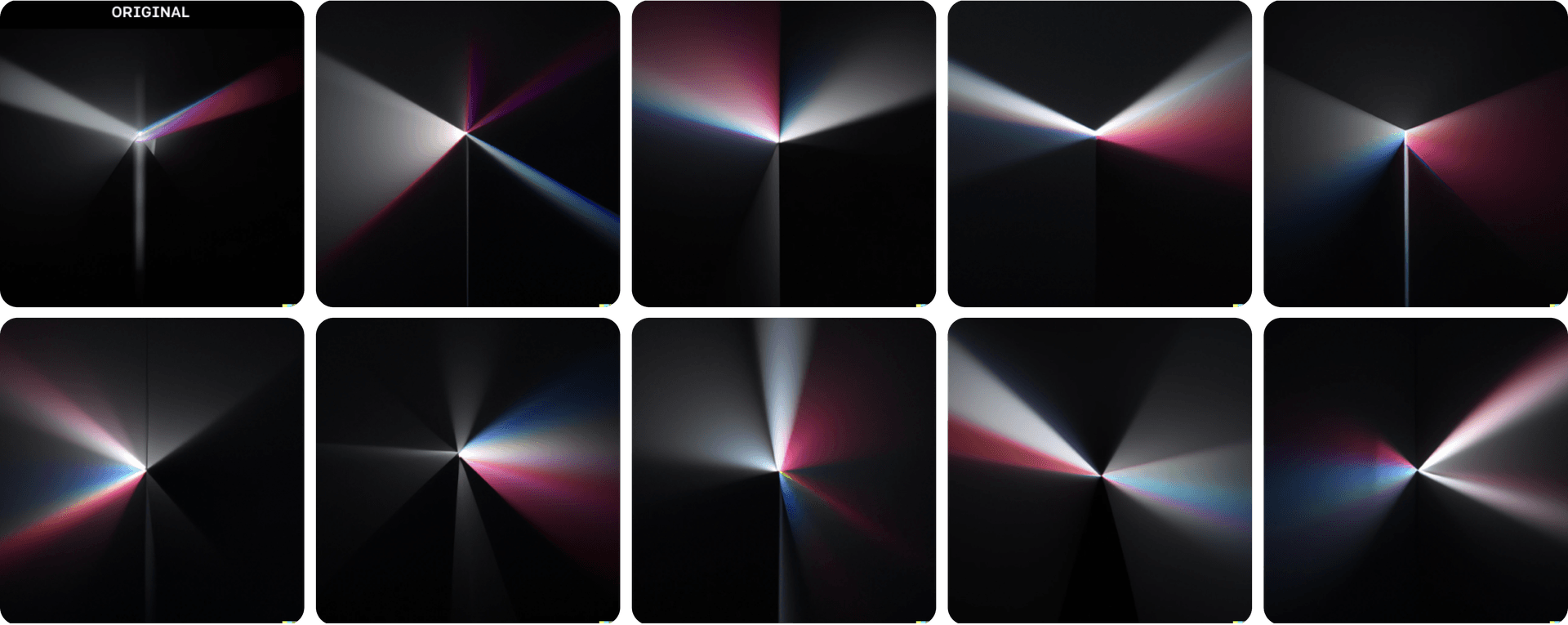

To begin brainstorming the design, we started generating images with DALL-E using prompts around ideas of 3D rendering, triangles, pyramids, and beams of light. Here are a few examples of the images DALL-E gave us:

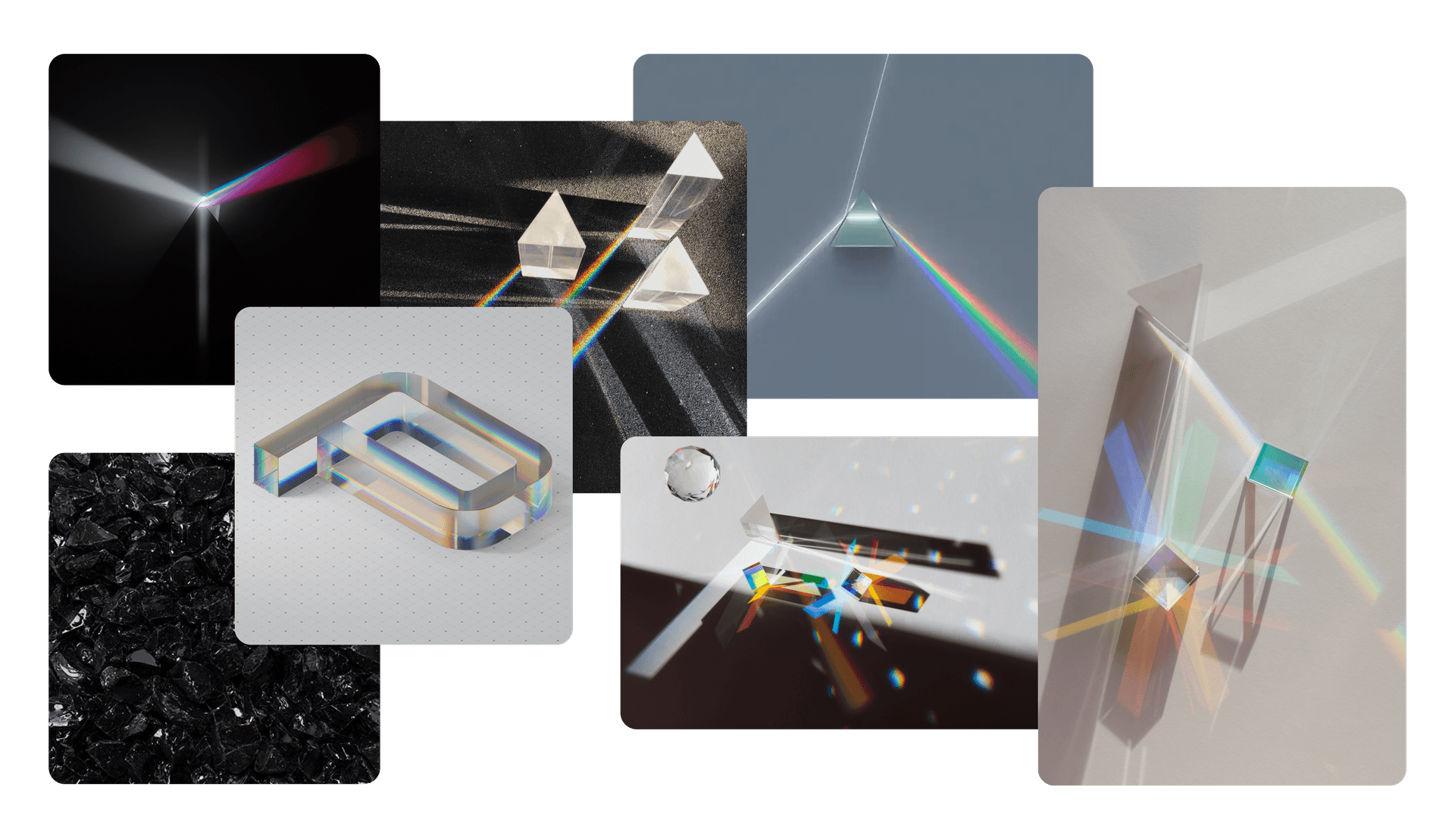

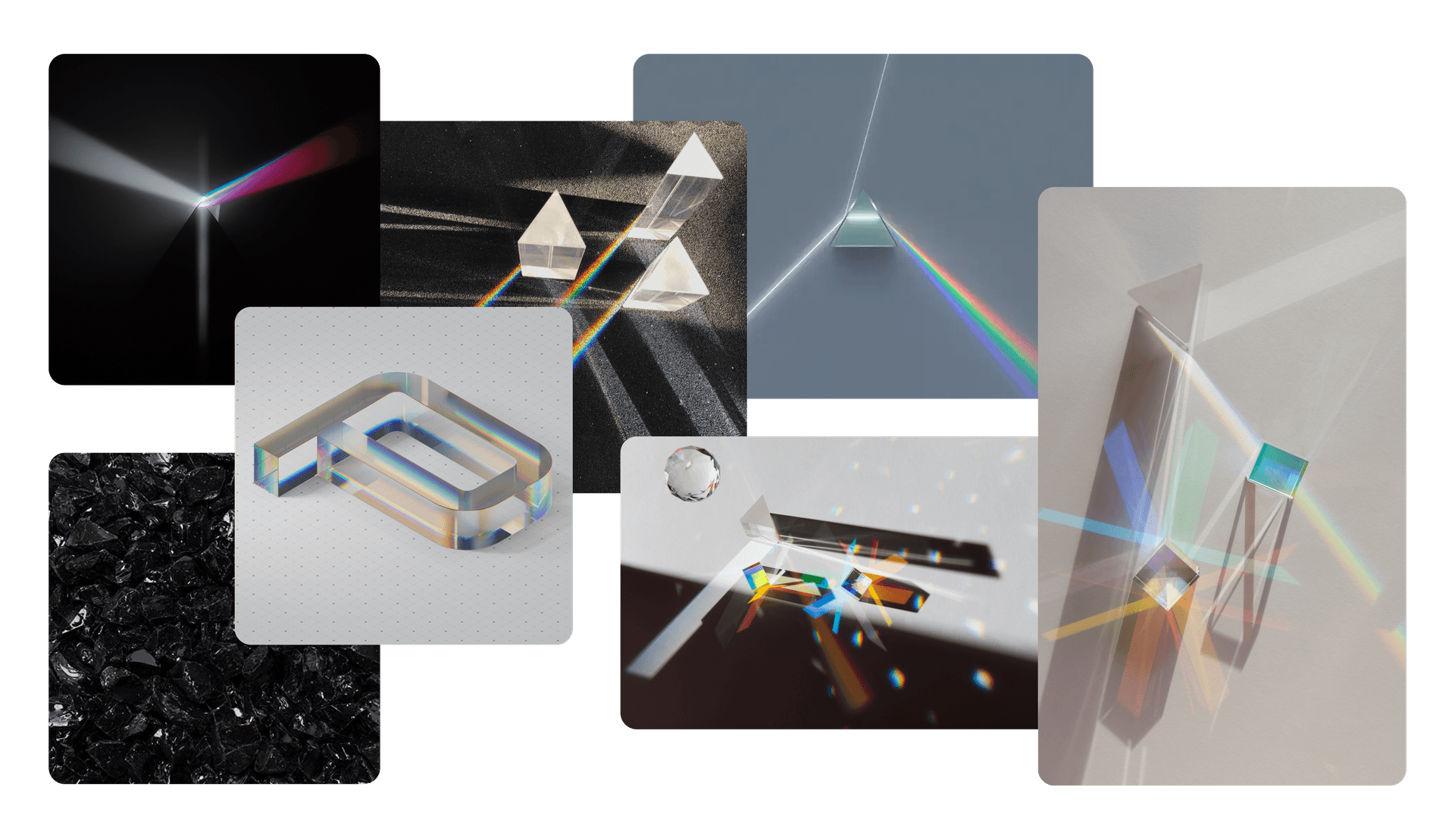

At this point, we began discussing the beauty of a rainbow and how it expresses everything that light is capable of in the visible spectrum. We began tying this idea back to the future of the Web and how we want to help the Web reach its full potential—like the rainbow. Our designers settled on a rich, striking mood board:

We also knew we wanted this experience to be interactive and accessible. We began discussing how beams of light can reveal hidden particles in the air. Putting it all together, the idea became: "What if a user could control a light source to reveal objects in a scene like a light beam does? And what if the light bounced off of other objects, hit a prism, and created a gorgeous rainbow?"

Our next challenge: how to replicate this in the browser. After looking at art from the WebGL community, we sat down with WebGL to see how we could achieve this effect.

Link to heading1. Put together a prototype

To build a proof of concept, we reached for a common, open-source set of tooling in the WebGL space. Let's get to know the tools we used so you can build with us.

Two packages from Poimandres, a developer collective, were key tools for our creative JavaScript project.:

react-three-fiber: A library for using three.js in React components (where Vercel engineer Paul Henschel is a lead maintainer)

drei: A “kitchen sink” library of convenience helpers letting the three.js community share common patterns

Link to headingGetting familiar with react-three-fiber

react-three-fiber lets developers create and interact with objects in a scene using React components. Below is a sandbox to get you started with the basics.

import { useRef, useState } from 'react' import { Canvas, useFrame } from '@react-three/fiber' function Box (props) { // This reference gives us direct access to the THREE.Mesh object. const ref = useRef() // Hold state for hovered and clicked events. const [hovered, hover] = useState(false) const [clicked, click] = useState(false) // Subscribe this component to the render-loop and rotate the mesh every frame. useFrame((state,delta) => (ref.current.rotation.x += delta)) // Return the view. // These are regular three.js elements expressed in JSX. return ( <mesh {...props} ref={ref} scale={clicked ? 1.5 : 1} onClick={(event) => click(!clicked)} onPointerOver={(event) => hover(true)} onPointerOut={(event) => hover(false)} > <boxGeometry args={[1, 1, 1]} /> <meshStandardMaterial color={hovered ? 'hotpink' : 'orange' } /> </mesh> ) } export default function App() { return ( <Canvas> <color attach="background" args={['#fff']} /> <ambientLight intensity={0.5} /> <spotLight position={[10, 10, 10]} angle={0.15} penumbra={1} /> <pointLight position={[-10, -10, -10]} /> <Box position={[-1.2, 0, 0]} /> <Box position={[1.2, 0, 0]} /> </Canvas> ) }

An interactive code sandbox showing the basics of three.js and how to make two, 3D rotating blocks that spin.

A few things to try with this sandbox:

Hover a cube to turn it pink

On line 27, change `hotpink` to `green` and hover the cube again

Click a cube to toggle its size

Comment out the

<Box />on line 40 to see the cube on the right disappear

This brings the power of WebGL and three.js to React developers in a way that feels familiar, allowing them to create awesome 3D visuals in the browser. react-three-fiber also:

Has no limitations when compared to raw

three.js. If you can build it withthree.js, you can build it withreact-three-fiber.Exposes component props like

onRayHitthat can be used to respond to events in your scene similar to native React events (e.g.onClick).Outperforms

three.jsat scale by leveraging React’s scheduler.

With enough practice, a React developer can build remarkable experiences in the browser that are only limited by their imagination.

Link to headingProving the concept

Now that we know we can build 3D art with React, let’s create the groundwork for an interactive beam of light that bounces off of objects. Once we have this part figured out, we will have something that resembles the core of the mechanics for the game you played on the Next.js Conf registration page.

In your next sandbox, you will find a "light beam" that follows your cursor and reflects off of a few 2D objects in the scene.

import * as THREE from 'three' import { useRef, useState } from 'react' import { Canvas, useFrame } from '@react-three/fiber' import { useTexture } from '@react-three/drei' import { Reflect } from './Reflect' export default function App() { return ( <Canvas orthographic camera={{ zoom: 100 }}> <color attach="background" args={['#000']} /> <Scene /> </Canvas> ) } function Scene() { const streaks = useRef() const glow = useRef() const reflect = useRef() const [streakTexture, glowTexture] = useTexture(['https://assets.vercel.com/image/upload/contentful/image/e5382hct74si/1LRW0uiGloWqJcY0WOxREA/61737e55cab34a414d746acb9d0a9400/download.png', 'https://assets.vercel.com/image/upload/contentful/image/e5382hct74si/2NKOrPD3iq75po1v0AA6h2/fc0d49ba0917bcbfd3d8a63688045a0c/download.jpeg']) const obj = new THREE.Object3D() const f = new THREE.Vector3() const t = new THREE.Vector3() const n = new THREE.Vector3() let i = 0 let range = 0 useFrame((state) => { reflect.current.setRay([(state.pointer.x * state.viewport.width) / 2, (state.pointer.y * state.viewport.height) / 2, 0]) range = reflect.current.update() for (i = 0; i < range - 1; i++) { // Position 1 f.fromArray(reflect.current.positions, i * 3) // Position 2 t.fromArray(reflect.current.positions, i * 3 + 3) // Calculate normal n.subVectors(t, f).normalize() // Calculate mid-point obj.position.addVectors(f, t).divideScalar(2) // Stretch by using the distance obj.scale.set(t.distanceTo(f) * 3, 6, 1) // Convert rotation to euler z obj.rotation.set(0, 0, Math.atan2(n.y, n.x)) obj.updateMatrix() streaks.current.setMatrixAt(i, obj.matrix) } streaks.current.count = range - 1 streaks.current.instanceMatrix.updateRange.count = (range - 1) * 16 streaks.current.instanceMatrix.needsUpdate = true // First glow isn't shown. obj.scale.setScalar(0) obj.updateMatrix() glow.current.setMatrixAt(0, obj.matrix) for (i = 1; i < range; i++) { obj.position.fromArray(reflect.current.positions, i * 3) obj.scale.setScalar(0.75) obj.rotation.set(0, 0, 0) obj.updateMatrix() glow.current.setMatrixAt(i, obj.matrix) } glow.current.count = range glow.current.instanceMatrix.updateRange.count = range * 16 glow.current.instanceMatrix.needsUpdate = true }) return ( <> <Reflect ref={reflect} far={10} bounce={10} start={[10, 5, 0]} end={[0, 0, 0]}> {/* Any object in here will receive ray events */} <Block scale={0.5} position={[0.25, -0.15, 0]} /> <Block scale={0.5} position={[-1.1, .9, 0]} rotation={[0, 0, -1]} /> <Triangle scale={0.4} position={[-1.1, -1.2, 0]} rotation={[Math.PI / 2, Math.PI, 0]} /> </Reflect> {/* Draw stretched pngs to represent the reflect positions. */} <instancedMesh ref={streaks} args={[null, null, 100]} instanceMatrix-usage={THREE.DynamicDrawUsage}> <planeGeometry /> <meshBasicMaterial map={streakTexture} transparent opacity={1} blending={THREE.AdditiveBlending} depthWrite={false} toneMapped={false} /> </instancedMesh> {/* Draw glowing dots on the contact points. */} <instancedMesh ref={glow} args={[null, null, 100]} instanceMatrix-usage={THREE.DynamicDrawUsage}> <planeGeometry /> <meshBasicMaterial map={glowTexture} transparent opacity={1} blending={THREE.AdditiveBlending} depthWrite={false} toneMapped={false} /> </instancedMesh> </> ) } function Block({ onRayOver, ...props }) { const [hovered, hover] = useState(false) return ( <mesh onRayOver={(e) => hover(true)} onRayOut={(e) => hover(false)} {...props}> <boxGeometry /> <meshBasicMaterial color={hovered ? 'orange' : 'white'} /> </mesh> ) } function Triangle({ onRayOver, ...props }) { const [hovered, hover] = useState(false) return ( <mesh {...props} onRayOver={(e) => (e.stopPropagation(), hover(true))} onRayOut={(e) => hover(false)} onRayMove={(e) => null /*console.log(e.direction)*/}> <cylinderGeometry args={[1, 1, 1, 3, 1]} /> <meshBasicMaterial color={hovered ? 'hotpink' : 'white'} /> </mesh> ) }

A code sandbox showing how a strategy to make lines appear more like beams of light.

Let's break down this sandbox into its key parts so we have a strong foundation to build on.

First, the

Appfunction creates a canvas for the scene to be painted onto. We can set anorthographicproperty to make 2D rendering more simple and create a camera with a set zoom level. We also give the background a dark color.Next,

Sceneestablishes a line that will follow your cursor as you move it. We useuseLayoutEffectfrom React anduseFrame, a custom React hook fromreact-three-fiberthat runs every time a new frame is drawn.We also need to draw a few shapes to bounce our light ray.

BlockandTriangleare React components that have props foronRayOverandonRayOutthat handle their respective events for the activity of the light beam.Last, we'll bring in two

.pngfiles as textures to create the light beam itself as well as the splash of glow where the light ray intersects with an object. Instead of demanding that WebGL do more rendering work, we can let the browser adjust the position of a.pngfor much better performance.

The code here is relatively simple for creating 3D graphics, unlocking a world that lets us create more complex elements, interactions, and scenes through the declarative nature of React.

Next, let's look at bringing in some color.

Link to headingCreating a rainbow

We need to produce a rainbow for the final ray exiting the prism. Here, we returned to the open source community for inspiration.

On shadertoy, we found two excellent shaders that we thought would combine to create a powerful effect:

@alanzucconi’s rainbow: A highly performant visible color spectrum.

Juliapoo’s iridescence: Amorphous blob creator to give the rainbow a flowing effect.

The combination of these two shaders can be found in the sandbox below:

import { useRef } from 'react' import { Canvas, useFrame } from '@react-three/fiber' import { Rainbow } from './Rainbow' export default function App() { return ( <Canvas> <color attach="background" args={['black']} /> <Scene /> </Canvas> ) } function Scene() { const ref = useRef() useFrame((state, delta) => (ref.current.rotation.z += delta / 5)) return <Rainbow ref={ref} startRadius={0} endRadius={0.65} fade={0} /> }

A code sandbox showing how two shaders can be brought together to create an iridescent rainbow.

Link to heading2. Enhance the visuals

Often, the end result of heavy graphical computing doesn’t come out perfectly. To create a final, appealing outcome, you can use a postprocessor to execute filters and effects on the render output before it is sent to the browser to be painted on screen.

For three.js projects, postprocessing is typically considered a must and can enhance visuals tremendously. For this project we used:

react-postprocessing: Another package from Poimandres, this library can save developers hundreds of lines of code for usual postprocessing tasks.

screen-space-reflections: This package captures existing screen data and uses it to create reflections.

react-postprocessing is a popular alternative to the three.js EffectComposer method due to its performance benefits. Most notably, EffectComposer merges all effects into a single one, minimizing the number of render operations.

For our postprocessing, we used three different effects:

Bloom: Give bright areas a glowing effect

Color Lookup Table: Achieve a desired color range

Screen Space Reflections: Create reflections of existing graphical data

Link to headingBloom

Bloom is an effect that puts light fringes around the brightest spots of an image. In digital art, this shader is mimicking the natural effect of real-life camera lenses when reacting to bright light. A camera lens can’t perfectly handle areas of bright light in an image and ends up distributing this light across the rest of the image, creating a halo effect.

In our case, this shader brings extra life to the bright parts of the light refracting through the prism as well as the beams of white light being mixed into the rainbow.

Link to headingColor Lookup Tables

Color Lookup Tables (often abbreviated as LUT) are a method for filtering an original set of colors to a new range for the entire rendered image.

For this project, we used the LUT to give the scene a more blue and cinematic look. If you don't want to dial in the colors yourself, premade LUTs are available from resources like IWLTBAP.

Link to headingScreen Space Reflections

Screen Space Reflections were used to make the scene look more dynamic by having the environment reflected within the prism.

Reflections like these can be calculated through a process called “raymarching”. In raymarching, every pixel on the screen that can have a reflection is calculated, step-by-step, to see where the best point of reflection exists, according to the original point of interest. This process is rather resource intensive so we limited these reflections to only the prism and, additionally, turned them off entirely for mobile devices.

This shader noticeably reflects the rainbow within the prism—but will also reflect other objects like the mirror boxes and the light ray itself when those objects are in the right positions.

Link to headingAll together now

We now have:

A ray of light

Objects to bounce it off of

A prism

And a rainbow

It's time to put it all together. We've added one last touch for the prism, a beveled model from drei on the prism to give it a final bit of detail.

import * as THREE from 'three' import { useRef, useCallback, useState } from 'react' import { Canvas, useLoader, useFrame } from '@react-three/fiber' import { Center, Text3D } from '@react-three/drei' import { Bloom, EffectComposer, LUT } from '@react-three/postprocessing' import { LUTCubeLoader } from 'postprocessing' import { Beam } from './components/Beam' import { Rainbow } from './components/Rainbow' import { Prism } from './components/Prism' import { Flare } from './components/Flare' import { Box } from './components/Box' export function lerp(object, prop, goal, speed = 0.1) { object[prop] = THREE.MathUtils.lerp(object[prop], goal, speed) } const vector = new THREE.Vector3() export function lerpV3(value, goal, speed = 0.1) { value.lerp(vector.set(...goal), speed) } export function calculateRefractionAngle(incidentAngle, glassIor = 2.5, airIor = 1.000293) { const theta = Math.asin((airIor * Math.sin(incidentAngle)) / glassIor) || 0 return theta } export default function App() { const texture = useLoader(LUTCubeLoader, 'https://uploads.codesandbox.io/uploads/user/b3e56831-8b98-4fee-b941-0e27f39883ab/DwlG-F-6800-STD.cube') return ( <Canvas orthographic gl={{ antialias: false }} camera={{ position: [0, 0, 100], zoom: 70 }}> <color attach="background" args={['black']} /> <Scene /> <EffectComposer disableNormalPass> <Bloom mipmapBlur levels={9} intensity={1.5} luminanceThreshold={1} luminanceSmoothing={1} /> <LUT lut={texture} /> </EffectComposer> </Canvas> ) } function Scene() { const [isPrismHit, hitPrism] = useState(false) const flare = useRef(null) const ambient = useRef(null) const spot = useRef(null) const boxreflect = useRef(null) const rainbow = useRef(null) const rayOut = useCallback(() => hitPrism(false), []) const rayOver = useCallback((e) => { // Break raycast so the ray stops when it touches the prism. e.stopPropagation() hitPrism(true) // Set the intensity really high on first contact. rainbow.current.material.speed = 1 rainbow.current.material.emissiveIntensity = 20 }, []) const vec = new THREE.Vector3() const rayMove = useCallback(({ api, position, direction, normal }) => { if (!normal) return // Extend the line to the prisms center. vec.toArray(api.positions, api.number++ * 3) // Set flare. flare.current.position.set(position.x, position.y, -0.5) flare.current.rotation.set(0, 0, -Math.atan2(direction.x, direction.y)) // Calculate refraction angles. let angleScreenCenter = Math.atan2(-position.y, -position.x) const normalAngle = Math.atan2(normal.y, normal.x) // The angle between the ray and the normal. const incidentAngle = angleScreenCenter - normalAngle // Calculate the refraction for the incident angle. const refractionAngle = calculateRefractionAngle(incidentAngle) * 6 // Apply the refraction. angleScreenCenter += refractionAngle rainbow.current.rotation.z = angleScreenCenter // Set spot light. lerpV3(spot.current.target.position, [Math.cos(angleScreenCenter), Math.sin(angleScreenCenter), 0], 0.05) spot.current.target.updateMatrixWorld() }, []) useFrame((state) => { // Tie beam to the mouse. boxreflect.current.setRay([(state.pointer.x * state.viewport.width) / 2, (state.pointer.y * state.viewport.height) / 2, 0], [0, 0, 0]) // Animate rainbow intensity. lerp(rainbow.current.material, 'emissiveIntensity', isPrismHit ? 2.5 : 0, 0.1) spot.current.intensity = rainbow.current.material.emissiveIntensity // Animate ambience. lerp(ambient.current, 'intensity', 0, 0.025) }) return ( <> {/* Lights */} <ambientLight ref={ambient} intensity={0} /> <pointLight position={[10, -10, 0]} intensity={0.05} /> <pointLight position={[0, 10, 0]} intensity={0.05} /> <pointLight position={[-10, 0, 0]} intensity={0.05} /> <spotLight ref={spot} intensity={1} distance={7} angle={1} penumbra={1} position={[0, 0, 1]} /> {/* Prism + blocks + reflect beam */} <Beam ref={boxreflect} bounce={10} far={20}> <Prism scale={.6} position={[0, -0.5, 0]} onRayOver={rayOver} onRayOut={rayOut} onRayMove={rayMove} /> <Box position={[-1.4, 1, 0]} rotation={[0, 0, Math.PI / 8]} /> <Box position={[-2.4, -1, 0]} rotation={[0, 0, Math.PI / -4]} /> </Beam> {/* Rainbow and flares */} <Rainbow ref={rainbow} startRadius={0} endRadius={0.5} fade={0} /> <Flare ref={flare} visible={isPrismHit} renderOrder={10} scale={1.25} streak={[12.5, 20, 1]} /> </> ) }

Link to heading3. Optimize performance

This rendering looks great but we also have to consider something else important: performance.

Building up all of these great effects can take a toll on device resources. We noticed that older devices were starting to have trouble, and, after going through lots of tweaks and variations, we still had reports coming in that performance was an issue.

The usual approach to handling device performance in the WebGL community has been to watch GPU utilization. However, this is known to be fraught with buggy behavior since different devices calculate GPU usage differently. This would cause some devices to under- or over-render according to their perceived resource usage.

Link to headingBuilding a new performance monitor

Instead of monitoring resource utilization, we asked “Why not check on the final result of the rendered application to address what our users are actually seeing?”

drei now features a <PerformanceMonitor /> component that watches the frames per second (FPS) of the render to see if it is performing within a developer-defined acceptable range. If the FPS drops too low, the application can be made to ease the characteristics of the render to reduce the workload. If the FPS is high and the application can offer a better render, the rendering will improve.

This adaptive approach is a major improvement compared to sniffing GPU utilization or forcing a user to choose a quality level. Here is a code example of how the Performance Monitor works:

import { PerformanceMonitor, usePerformanceMonitor } from '@react-three/drei'import { EffectComposer } from '@react-three/postprocessing'

// The postprocessing effects that will be used for your render.function Effects() { // A switch that turns effects on and off. const [hasEffects, setHasEffects] = useState(true)

// A callback destructuring for the onChange parameter. // `factor` is the current quality scale between 0 and 1. usePerformanceMonitor({ onChange: ({ factor }) => { // If effects are currently enabled and // the factor is higher than average... if (hasEffects && factor > 0.5) { // ...decrease quality. effect.qualityScale = round(0.5 + 0.5 * factor, 1) }

// Handle other conditions // when PerformanceMonitor says // to decline or incline

}})

return ( <EffectComposer> { /** Your effects */ } </EffectComposer> )}

function App() { // Starting out with the highest resolution, // reduce the resolution if the framerate is too low. const [dpr, setDpr] = useState(2)

return ( <Canvas dpr={dpr}> <PerformanceMonitor onDecline={() => setDpr(1.5)} onIncline={() => setDpr(2)} > <Scene /> <Effects /> </PerformanceMonitor /> </Canvas> )}Link to headingSee it live

Check out the Next.js conference registration page for the full experience—and don’t forget to claim your free ticket while you’re there. Can you collect all three tickets by playing the games?

¹ We'd like to give a thanks to outside contributors to our mood board, including artists like Davo Galavotti.