6 min read

Introducing PDF support, computer use, and an xAI Grok provider

The AI SDK is an open-source toolkit for building AI applications with JavaScript and TypeScript. Its unified provider API allows you to use any language model and enables powerful UI integrations into leading web frameworks such as Next.js and Svelte.

Since our 3.4 release, we've seen the community build amazing products with the AI SDK:

Val Town's Townie is an AI assistant that helps developers turn ideas into deployed TypeScript apps and APIs right from their browser

Chatbase has scaled to 500K monthly visitors and $4M ARR, using the AI SDK to power customizable chat agents for customer support and sales

ChatPRD helps product managers craft Product Requirements Documents (PRDs) and roadmaps with AI, growing to 20,000 users in just nine months

Today, we're announcing the release of AI SDK 4.0. This version introduces several new capabilities including:

Let's explore these new features and improvements.

Link to headingPDF support

Supporting PDF documents is essential for AI applications as this format is the standard way people share and store documents. Organizations and individuals have built up large collections of important documents in PDF format—from contracts and research papers to manuals and reports—which means AI systems need to handle PDFs well if they're going to help with analyzing documents, pulling out information, or automating workflows.

AI SDK 4.0 introduces PDF support across multiple providers, including Anthropic, Google Generative AI, and Google Vertex AI. With PDF support, you can:

Extract text and information from PDF documents

Analyze and summarize PDF content

Answer questions based on PDF content

To send a PDF to any compatible model, you can pass PDF files as part of the message content using the file type. Here’s an example with Anthropic’s Claude Sonnet 3.5 model:

import { generateText } from 'ai';import { anthropic } from '@ai-sdk/anthropic';

const result = await generateText({ model: anthropic('claude-3-5-sonnet-20241022'), messages: [ { role: 'user', content: [ { type: 'text', text: 'What is an embedding model according to this document?', }, { type: 'file', data: fs.readFileSync('./data/ai.pdf'), mimeType: 'application/pdf', }, ], }, ],});Thanks to the AI SDK's unified API, to use this functionality with Google or Vertex AI, all you need to change is the model string in the code above.

The code example demonstrates how PDFs integrate seamlessly into your LLM calls. They're treated as just another message content type, requiring no special handling beyond including them as part of the message.

Check out our quiz generator template to see PDF support in action. Using useObject and Google's Gemini Pro 1.5 model, it generates an interactive multiple choice quiz based on the contents of a PDF that you upload.

Link to headingComputer use support (Anthropic)

Enabling AI to interact with apps and interfaces naturally, instead of using special tools, unlocks new automation and assistance opportunities.

AI SDK 4.0 introduces computer use support for the latest Claude Sonnet 3.5 model, allowing you to build applications that can:

Control mouse movements and clicks

Input keyboard commands

Capture and analyze screenshots

Execute terminal commands

Manipulate text files

Anthropic provides three predefined tools designed to work with the latest Claude 3.5 Sonnet model: the Computer Tool for basic system control (mouse, keyboard, and screenshots), the Text Editor Tool for file operations, and the Bash Tool for terminal commands. These tools are carefully designed so that Claude knows exactly how to use them.

While Anthropic defines the tool interfaces, you'll need to implement the underlying execute function for each tool, defining how your application should handle actions like moving the mouse, capturing screenshots, or running terminal commands on your specific system.

Here's an example using the computer tool with generateText:

import { generateText } from 'ai';import { anthropic } from '@ai-sdk/anthropic';import { executeComputerAction, getScreenshot } from '@/lib/ai'; // user-defined

const computerTool = anthropic.tools.computer_20241022({ displayWidthPx: 1920, displayHeightPx: 1080, execute: async ({ action, coordinate, text }) => { switch (action) { case 'screenshot': { return { type: 'image', data: getScreenshot(), }; } default: { return executeComputerAction(action, coordinate, text); } } }, experimental_toToolResultContent: (result) => { return typeof result === 'string' ? [{ type: 'text', text: result }] : [{ type: 'image', data: result.data, mimeType: 'image/png' }]; },});

const result = await generateText({ model: anthropic('claude-3-5-sonnet-20241022'), prompt: 'Move the cursor to the center of the screen and take a screenshot', tools: { computer: computerTool },});You can combine these tools with the AI SDK's maxSteps feature to enable more sophisticated workflows. By setting a maxSteps value, the model can make multiple consecutive tool calls without user intervention, continuing until it determines the task is complete. This is particularly powerful for complex automation tasks that require a sequence of different operations:

const result = await generateText({ model: anthropic('claude-3-5-sonnet-20241022'), prompt: 'Summarize the AI news from this week.', tools: { computer: computerTool, textEditor: textEditorTool, bash: bashTool }, maxSteps: 10,});Note that Anthropic computer use is currently in beta, and it's recommended to implement appropriate safety measures such as using virtual machines and limiting access to sensitive data when building applications with this functionality. To learn more, check out our computer use guide.

Link to headingContinuation support

Many AI applications—from writing long-form content to generating code—require outputs that exceed the generation limits of language models. While these models can understand large amounts of content in their context window, they're typically limited in how much they can generate in a single response.

To address this common challenge, AI SDK 4.0 introduces continuation support, which detects when a generation is incomplete (i.e. when the finish reason is “length”) and continues the response across multiple steps, combining them into a single unified output. With continuation support, you can:

Generate text beyond standard output limits

Maintain coherence across multiple generations

Automatically handle word boundaries for clean output

Track combined token usage across steps

This feature works across all providers and can be used with both generateText and streamText by enabling the experimental_continueSteps setting. Here's an example of generating a long-form historical text:

import { generateText } from 'ai';import { openai } from '@ai-sdk/openai';

const result = await generateText({ model: openai('gpt-4o'), maxSteps: 5, experimental_continueSteps: true, prompt: 'Write a book about Roman history, ' + 'from the founding of the city of Rome ' + 'to the fall of the Western Roman Empire. ' + 'Each chapter MUST HAVE at least 1000 words.',});When using continuation support with streamText, the SDK ensures clean word boundaries by only streaming complete words. Both generateText and streamText may trim trailing tokens from some calls to prevent whitespace issues.

Link to headingNew xAI Grok provider

The AI SDK now supports x.AI through a new official provider. To use the provider, install the package:

pnpm install ai @ai-sdk/xaiYou can then use the provider with all AI SDK Core methods. For example, here's how you can use it with generateText:

import { xai } from '@ai-sdk/xai';import { generateText } from 'ai';

const { text } = await generateText({ model: xai('grok-beta'), prompt: 'Write a vegetarian lasagna recipe for 4 people.',});For more information, please see the AI SDK xAI provider documentation.

Link to headingAdditional provider updates

We've expanded our provider support to offer more options and improve performance across the board:

Cohere: v2 support and added tool calling capabilities, expanding supported functionality

OpenAI: Predicted output support, enabling more accurate and context-aware completions. Added prompt caching support for improved performance and efficiency

Google Generative AI & Vertex AI: Support for file inputs, fine-tuned models, schemas, tool choice, and frequency penalty. Added text embedding support to Vertex AI

Amazon Bedrock: Introduces support for Amazon Titan embedding models

Groq: Adds first-party Groq provider, replacing previous OpenAI-compatible provider

xAI Grok: Adds first-party xAI Grok provider, replacing previous OpenAI-compatible provider

LM Studio, Baseten, Together AI: Adds OpenAI-compatible providers

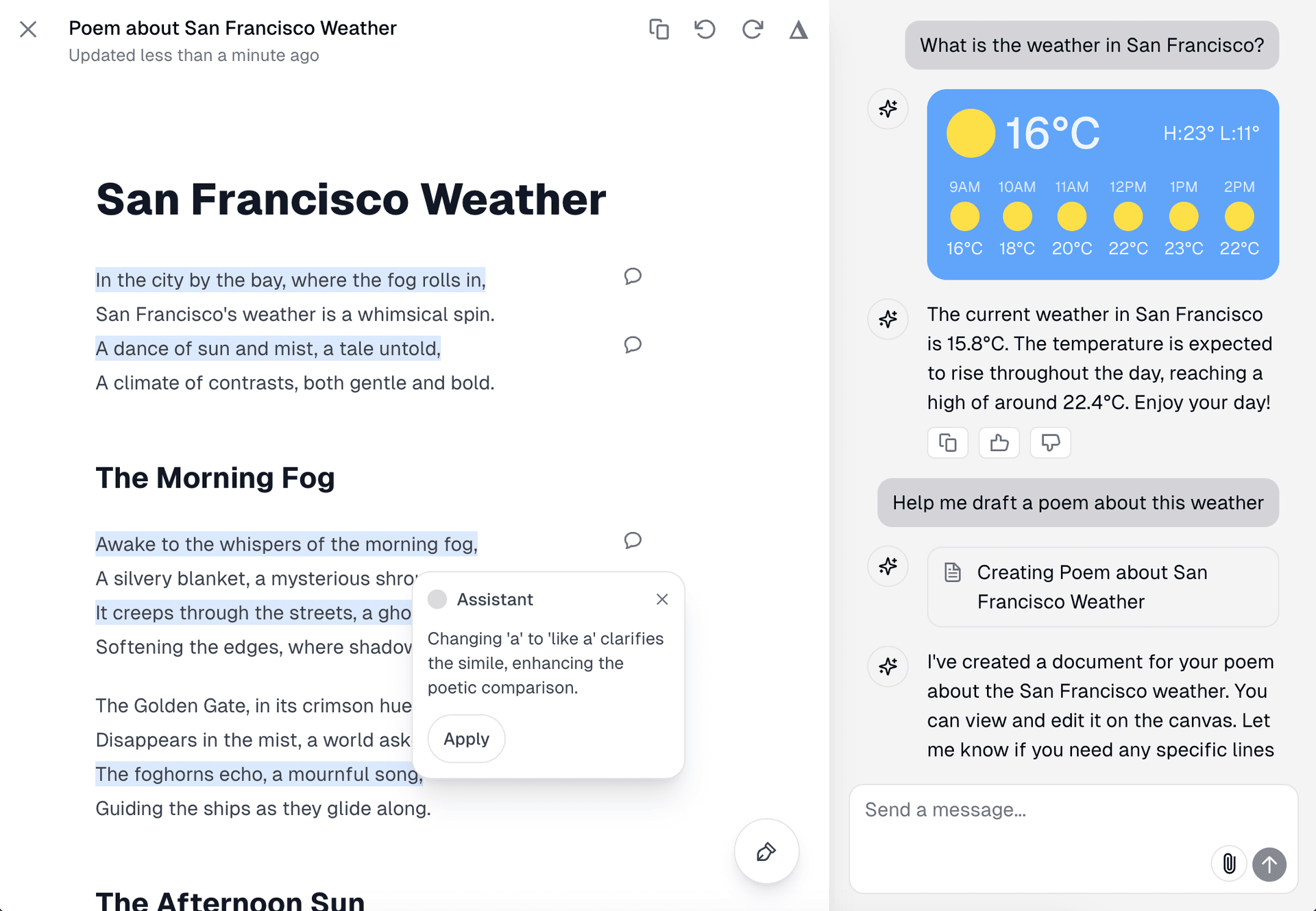

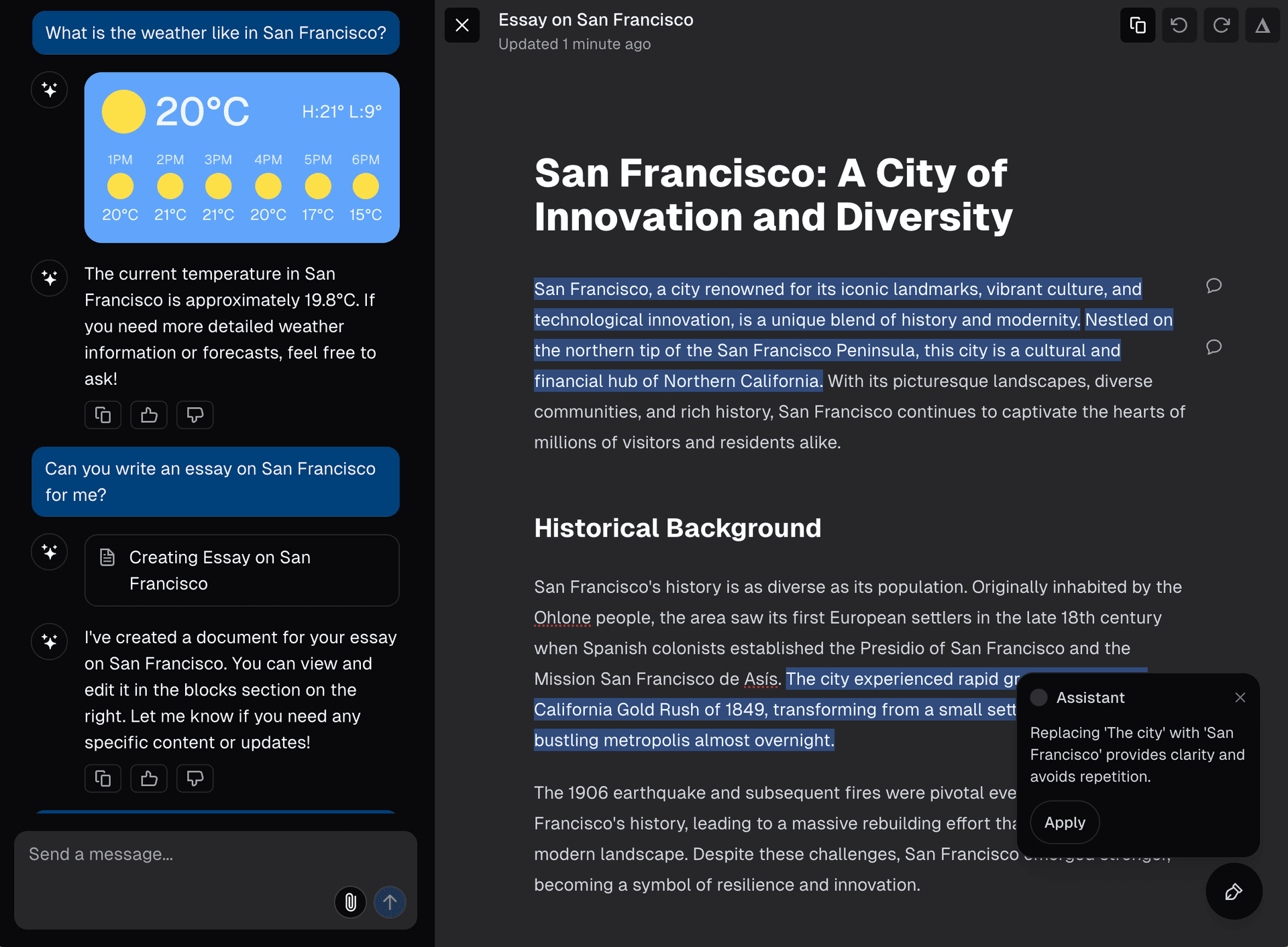

Link to headingUpdated chatbot template

The Next.js AI Chatbot template has been updated, incorporating everything we've learned from building v0 and the latest framework advances. Built with Next.js 15, React 19, and Auth.js 5, this new version represents the most comprehensive starting point for production-grade AI applications.

The template ships with production-ready features, including a redesigned UI with model switching, persistent PostgreSQL storage, and much more. It showcases powerful patterns for generative UI and newer variations of the chat interface with interactive workspaces (like v0 blocks) that allow you to integrate industry specific workflows and tools to design hybrid AI-user collaborative experiences.

Try the AI Chatbot demo to see these features in action or deploy your own instance to start building sophisticated AI applications with battle-tested patterns and best practices.

Link to headingMigrating to AI SDK 4.0

AI SDK 4.0 includes breaking changes that remove deprecated APIs. We've made the migration process easier with automated migration tools. You can run our automated codemods to handle the bulk of the changes.

For a detailed overview of all changes and manual steps that might be needed, refer to our AI SDK 4.0 migration guide. The guide includes step-by-step instructions and examples to ensure a smooth update.

Link to headingGetting started

With new features like PDF, computer use, and the new xAI Grok provider, there's never been a better time to start building AI applications with the AI SDK.

Start a new AI project: Ready to build something new? Check out our latest guides

Explore our templates: Visit our Template Gallery to see the AI SDK in action

Join the community: Share what you're building in our GitHub Discussions

Link to headingContributors

AI SDK 4.0 is the result of the combined work of our core team at Vercel (Lars, Jeremy, Walter, and Nico) and many community contributors. Special thanks for contributing merged pull requests:

minpeter, hansemannn, HarshitChhipa, skull8888888, nalaso, bhavya3024, gastonfartek, michaeloliverx, mauhai, yoshinorisano, Saran33, K-Mistele, MrHertal, h4r5h4, tonyfarney.

Your feedback and contributions are invaluable as we continue to evolve the AI SDK.x