3 min read

You can just ship backends

The same ease of use you expect from Vercel, now extended to your backends.

Since we introduced the AI Cloud at Vercel Ship, teams have been building AI applications that go beyond simple prompt-to-response patterns. These apps orchestrate multi-step workflows, spawn sub-agents, and run processes that take hours or days. They need backends that process data, run inference, and respond to real-time events.

You can now deploy the most popular Python and TypeScript backend frameworks with zero configuration. Vercel reads your framework and automatically provisions the infrastructure to run it.

Link to headingWhy AI applications need powerful backends

Backends process and move data, run inference, and respond to real-time events. They handle everything from pre- and post-processing of data to managing uploads, compression, caching, and logs. Increasingly, they also power agents that plan, reason, and act over time.

A year ago, most AI apps sent a prompt to a model and returned a response. Today's backends run as orchestration layers that spawn multiple sub-agents or execute workflows spanning minutes, hours, or days. This growing complexity makes reliability and iteration speed more important than ever.

Over the past four months, we’ve introduced a series of updates to make Vercel a first-class platform for these backends and background agents.

Link to headingFramework-defined Infrastructure for backends

Framework Defined Infrastructure (FDI) means Vercel reads your backend framework and understands your intent. When you deploy Next.js, the platform automatically knows how to build, route, and optimize your app. That same model now extends to backends.

Vercel supports frameworks such as FastAPI and Flask for Python, or Express, Hono, NestJS, and Nitro for TypeScript. Each comes with conventions that describe routing, concurrency, and execution. Vercel uses that context to infer how your code should scale, how requests should be routed, and what compute model to use. Your framework defines the infrastructure.

You write your backend code and Vercel figures out how it should run. No configuration files, Docker images, or custom setup required.

This means:

You can deploy any supported backend framework with zero configuration

Your backend automatically scales per endpoint, per function, and per request

Your observability, including logs, traces, and metrics, lives in the same dashboard as your frontend

The platform continuously optimizes compute allocation to match your workload's behavior

FDI eliminates the gap between writing backend code and running it in production. Developers focus entirely on application logic while Vercel ensures it runs efficiently and reliably at scale.

Link to headingA better experience for backends

We've improved the experience of building, testing, and serving backends on Vercel. These updates make backend development just as ergonomic and fast as frontend development.

Link to headingZero configuration support for popular frameworks

Vercel now supports a growing library of Python and Node.js frameworks, including Express, Hono, FastAPI, and Flask. Deploy them without a single line of configuration. The platform automatically detects and optimizes your code at build time.

In addition to the zero-config, framework-defined experience, we’re introducing a native Vercel Python SDK.

Now available in beta via pip install vercel, the SDK provides natural API abstractions to access Vercel products and services, including Sandboxes to run untrusted code, a Runtime Cache to store and retrieve data across functions, and Blob storage for frequently read resources.

Build a Python vibe coding IDE

Use this template to build an in-browser, AI-native IDE with Next.js, FastAPI, OpenAI Agents SDK, and the Vercel AI Cloud.

Deploy now

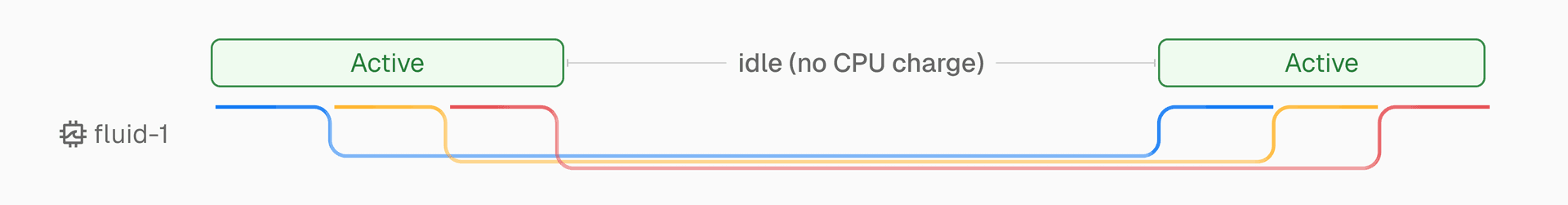

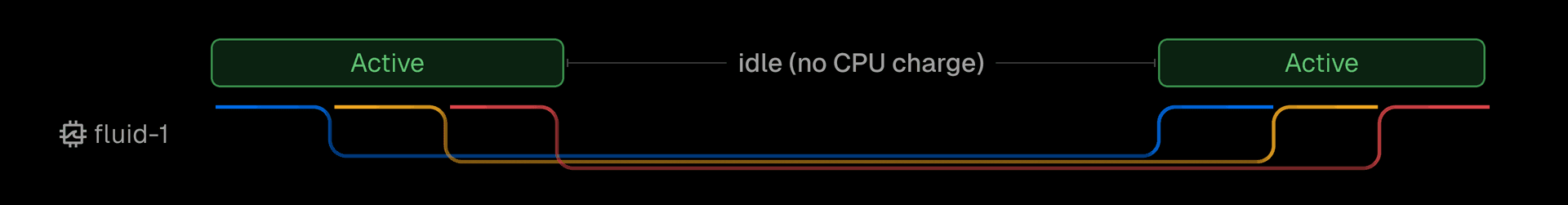

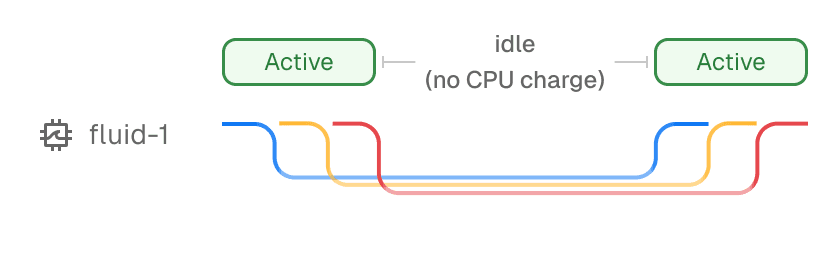

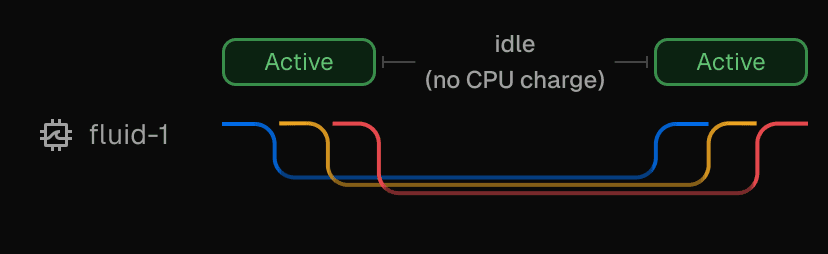

Link to headingActive CPU pricing and Fluid compute

For workloads that run intermittently, like agents that pause for input or wait on external APIs, Fluid compute ensures you only pay for time when your code is actively running. Active CPU Pricing measures execution time precisely, not wall time. No cost for idle waiting.

AI workloads on Fluid compute are optimized for on-demand scalability with efficiency gains and a pricing model that reduces costs for long-running LLM calls.

See how Fluid compute works on Vercel

Fluid compute boosts serverless performance by reusing idle compute during LLM calls, keeping functions active and scaling dynamically.

Learn more

Link to headingDurable orchestration for long-running backends

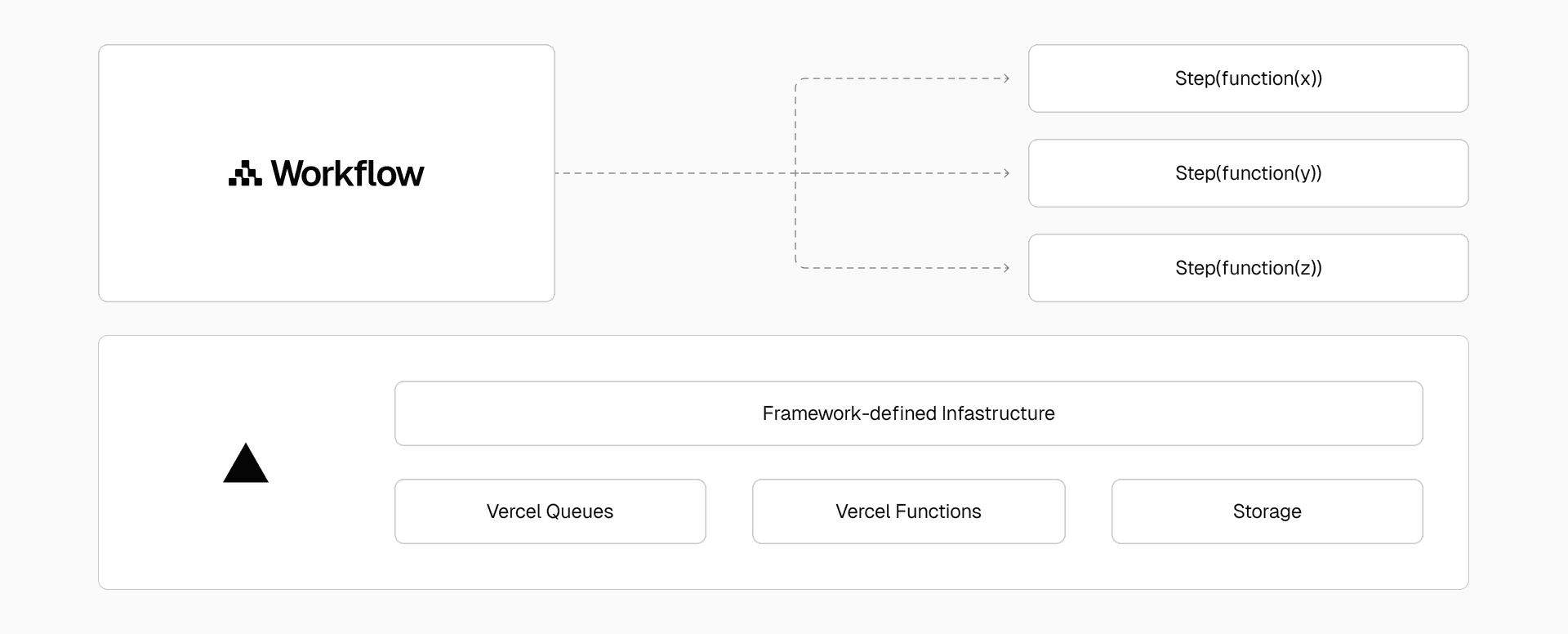

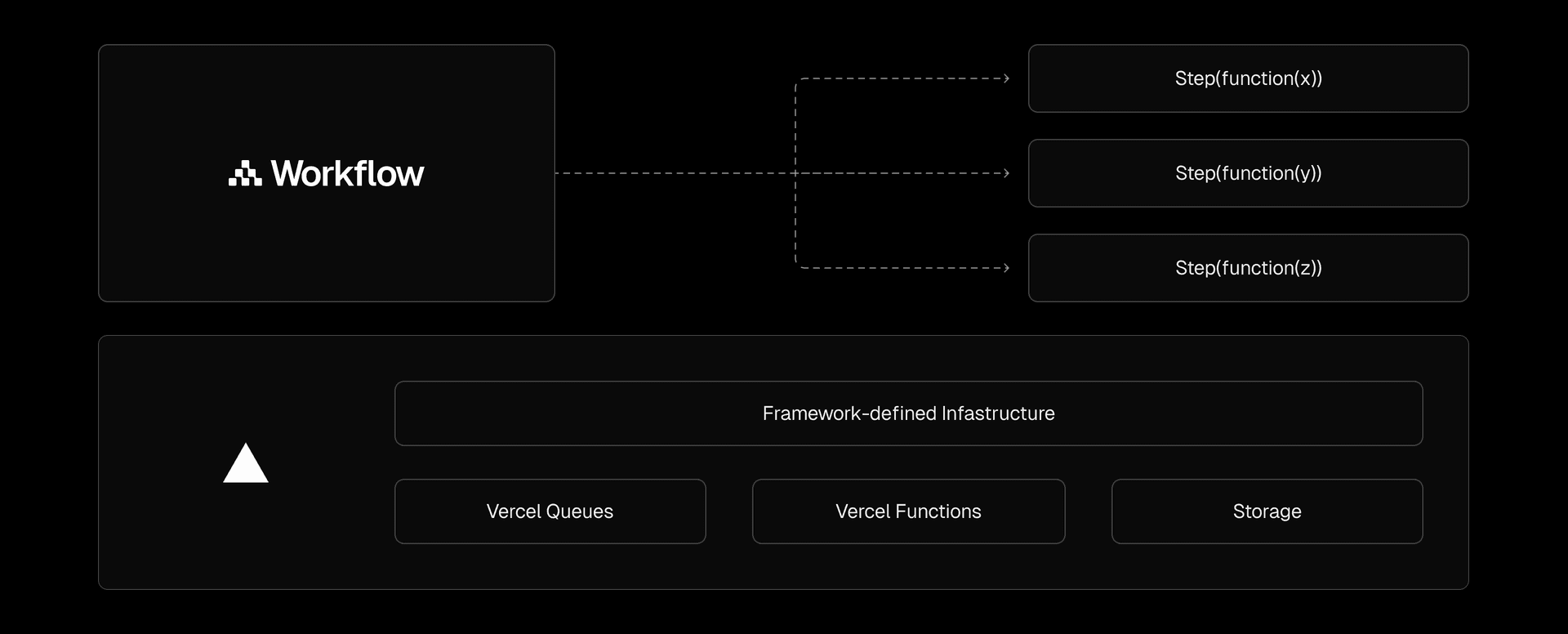

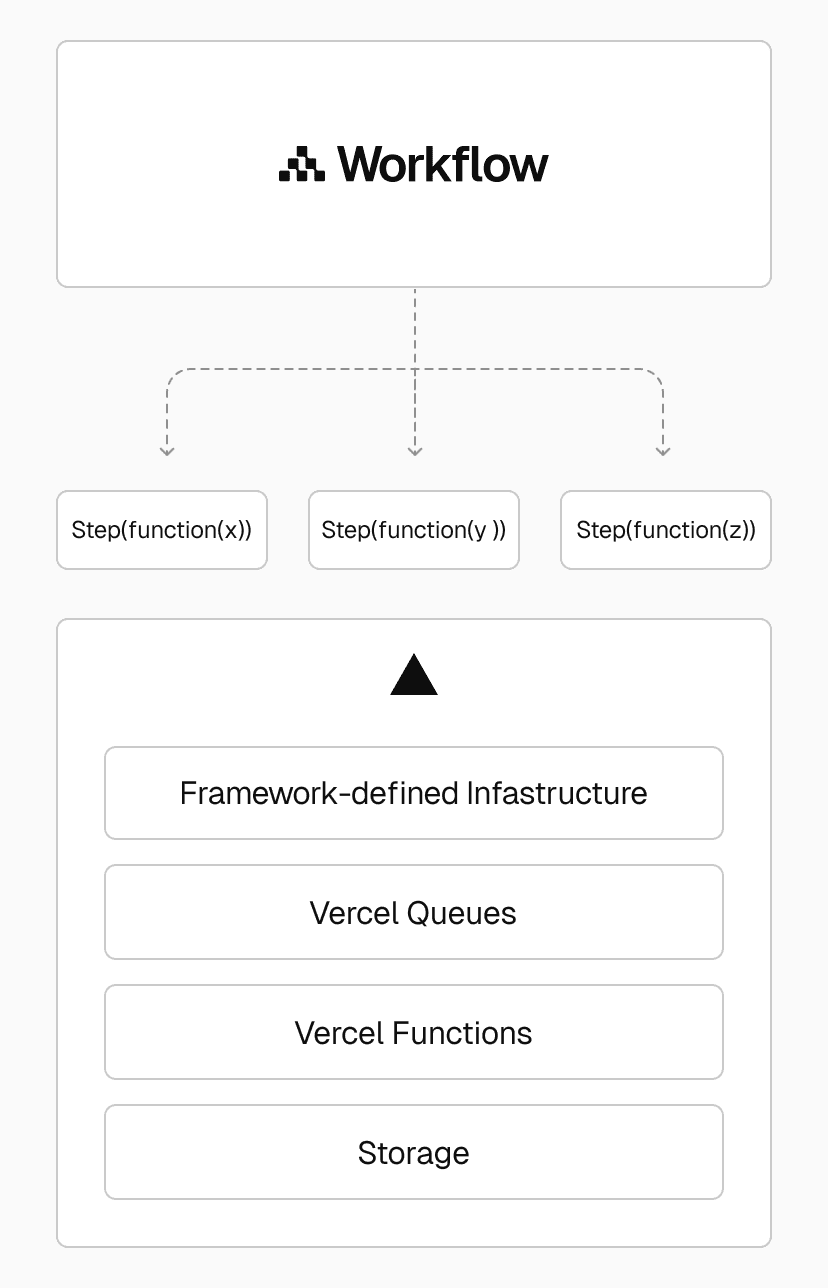

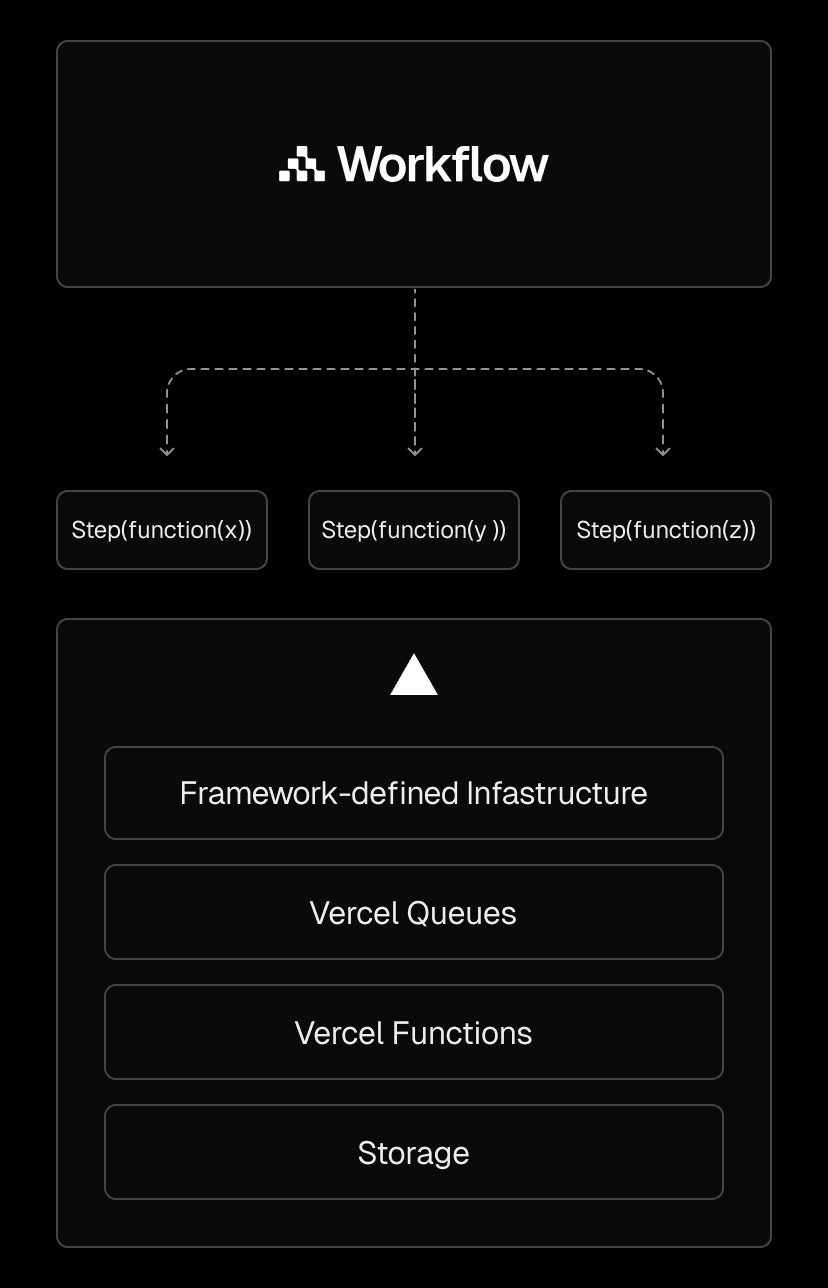

For backends that need persistence or reliability over time, the Workflow Development Kit (WDK) brings durability and observability into your code. It works for orchestrating multi-step processes like AI agent loops, data pipelines, or scheduled automations.

Link to headingGet started with new backend AI templates

We're releasing a library of backend templates that demonstrate production-ready AI patterns, including:

Chatbot systems with multi-turn memory and streaming responses

Coding assistants that reason across repositories and suggest changes

RAG pipelines that handle ingestion, embedding, and retrieval at scale

Each template demonstrates the full power of the AI Cloud, from orchestration to inference.

Link to headingOne platform for the entire stack

Every backend deployed on Vercel runs on the same orchestration, compute, and storage layers that power the AI Cloud. Together, these systems provide the foundation for fast, reliable, and cost-efficient backend development.

From real-time APIs to long-running background agents, you can build, test, and deploy every part of your application on Vercel.