4 min read

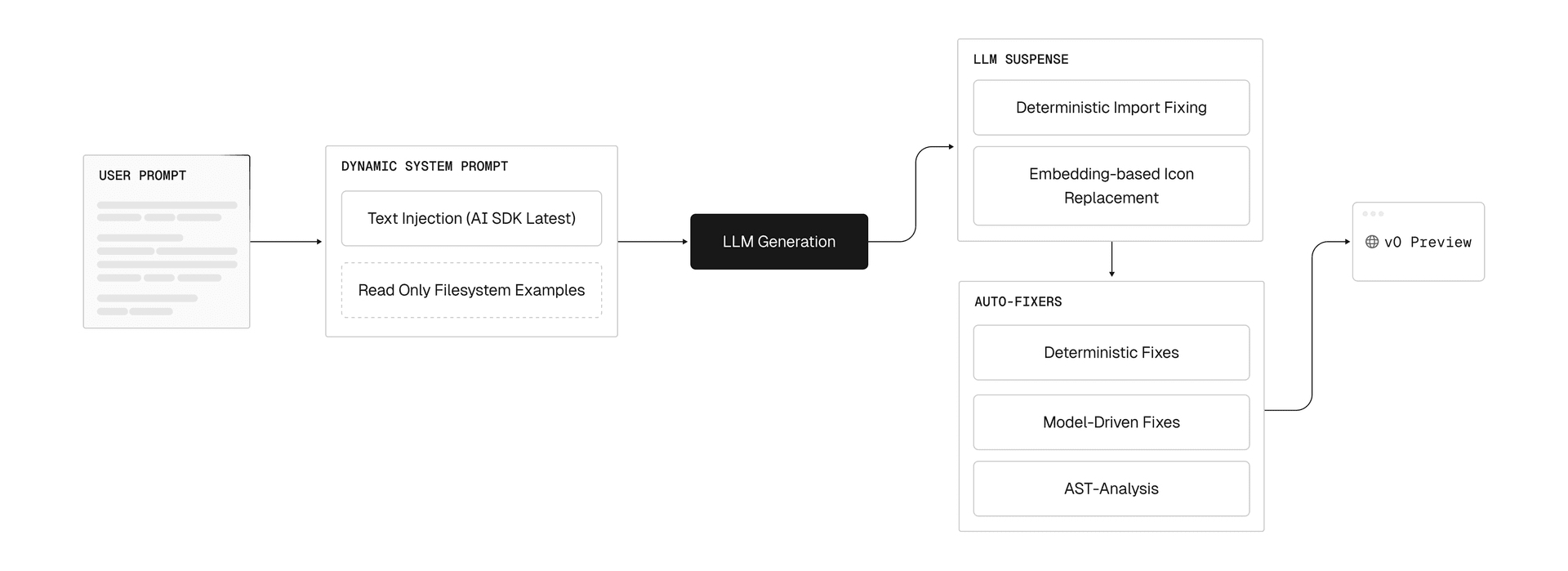

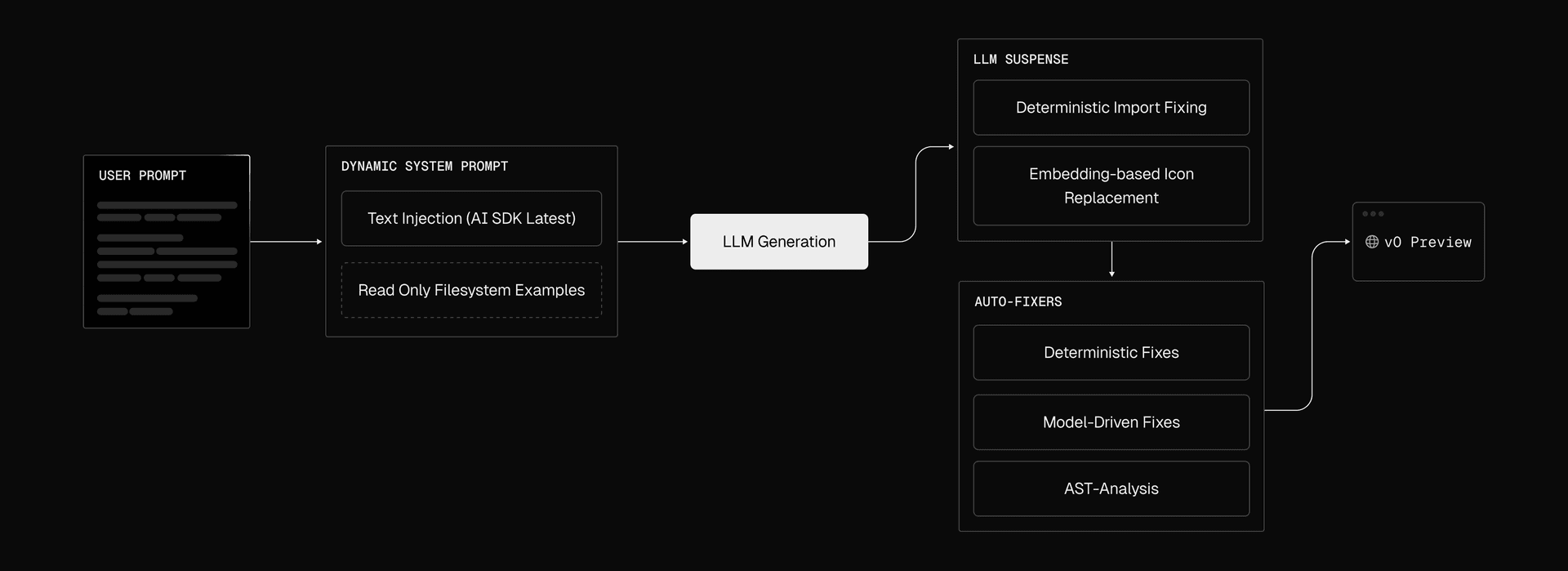

Last year we introduced the v0 Composite Model Family, and described how the v0 models operate inside a multi-step agentic pipeline. Three parts of that pipeline have had the greatest impact on reliability. These are the dynamic system prompt, a streaming manipulation layer that we call “LLM Suspense”, and a set of deterministic and model-driven autofixers that run after (or while!) the model finishes streaming its response.

What we optimize for

The primary metric we optimize for is the percentage of successful generations. A successful generation is one that produces a working website in v0’s preview instead of an error or blank screen. But the problem is that LLMs running in isolation encounter various issues when generating code at scale.

In our experience, code generated by LLMs can have errors as often as 10% of the time. Our composite pipeline is able to detect and fix many of these errors in real time as the LLM streams the output. This can lead to a double-digit increase in success rates.

Link to headingDynamic system prompt

Your product’s moat cannot be your system prompt. However, that does not change the fact that the system prompt is your most powerful tool for steering the model.

For example, take AI SDK usage. AI SDK ships major and minor releases regularly. Models often rely on outdated internal knowledge (their “training cutoff”), but we want v0 to use the latest version. This can lead to errors like using APIs from an older version of the SDK. These errors directly reduce our success rate.

Many agents rely on web search tools for ingesting new information. Web search is great (v0 uses it too), but it has its faults. You may get back old search results, like outdated blog posts and documentation. Further, many agents have a smaller model summarize the results of web search, which in turn becomes a bad game of telephone between the small model and parent model. The small model may hallucinate, misquote something, or omit important information.

Instead of relying on web search, we detect AI-related intent using embeddings and keyword matching. When a message is tagged as AI-related and relevant to the AI SDK, we inject knowledge into the prompt describing the targeted version of the SDK. We keep this injection consistent to maximize prompt-cache hits and keep token usage low.

In addition to text injection, we worked with the AI SDK team to provide examples in the v0 agent’s read-only filesystem. These are hand-curated directories with code samples designed for LLM consumption. When v0 decides to use the SDK, it can search these directories for relevant patterns such as image generation, routing, or integrating web search tools.

These dynamic system prompts are used for a variety of topics, including frontend frameworks and integrations.

Link to headingLLM Suspense

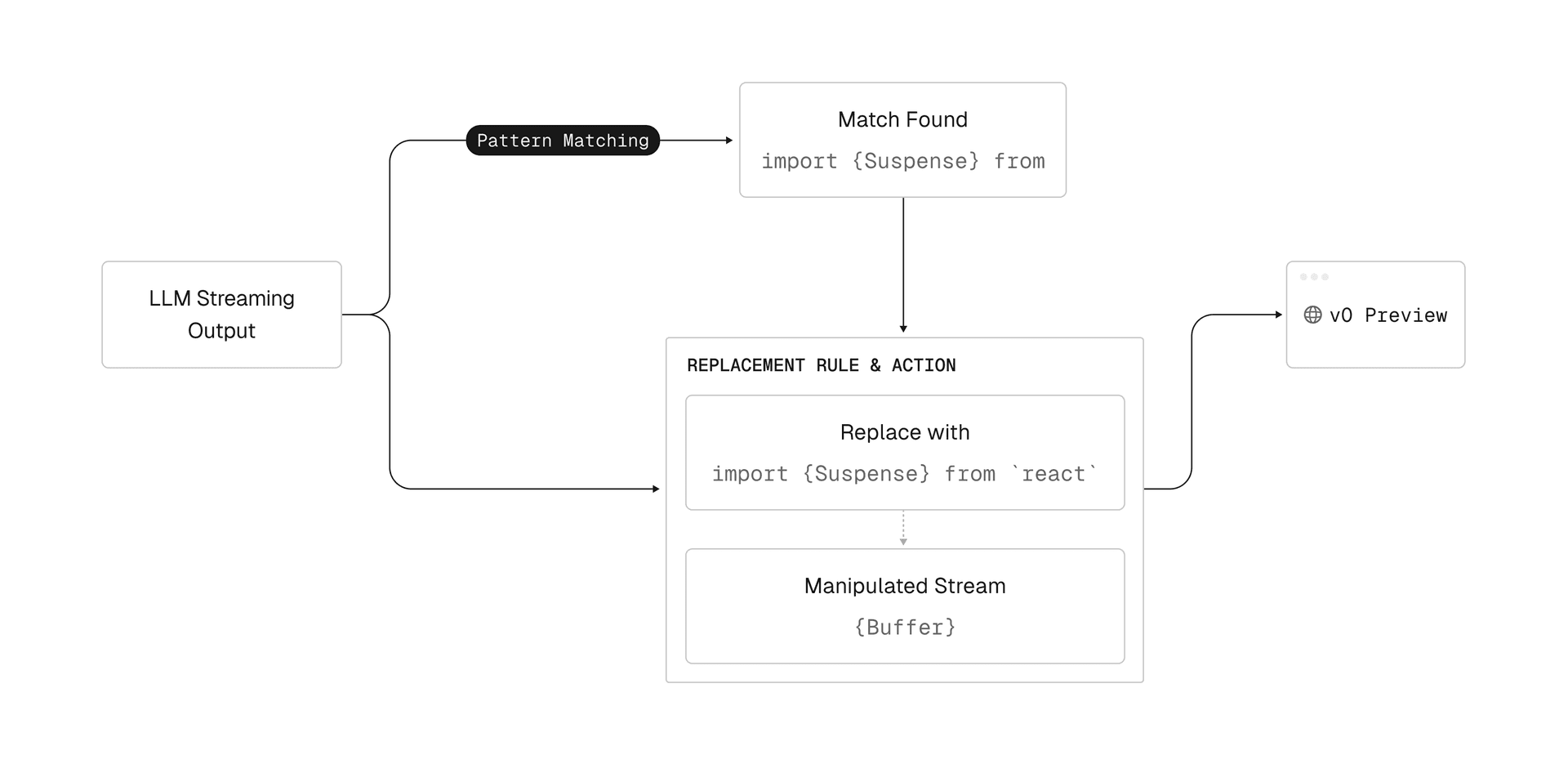

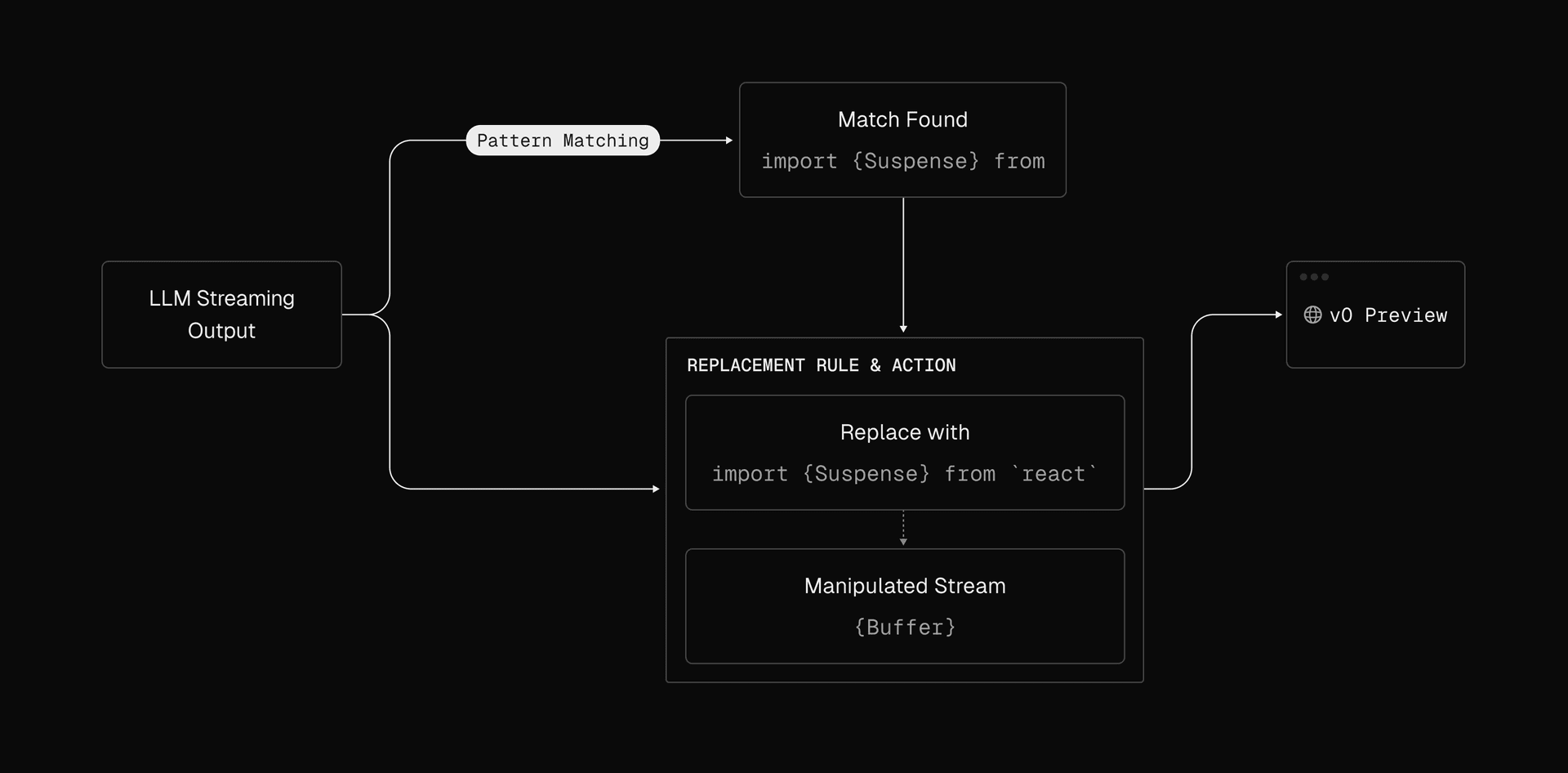

LLM Suspense is a framework that manipulates text as it streams to the user. This includes actions like find-and-replace for cleaning up incorrect imports, but can become much more sophisticated.

Two examples show the flexibility it provides:

A simple example is substituting long strings the LLM often refers to. For example, when a user uploads an attachment, we give v0 a blob storage URL. That URL can be very long (hundreds of characters), which can cost 10s of tokens and impact performance.

Before we invoke the LLM, we replace the long URLs with shorter versions that get transformed into the proper URL after the LLM finishes its response. This means the LLM reads and writes fewer tokens, saving our users money and time.

In production, these simple rules handle variations in quoting, formatting, and mixed import blocks. Because this happens during streaming, the user never sees an intermediate incorrect state.

Suspense can also handle more complex cases. By default, v0 uses the lucide-react icon library. It updates weekly, adding and removing icons. This means the LLM will often reference icons that no longer exist or never existed.

To correct this deterministically, we:

Embed every icon name in a vector database.

Analyze actual exports from lucide-react at runtime.

Pass through the correct icon when available.

When the icon does not exist, run an embedding search to find the closest match.

Rewrite the import during streaming.

For example, a request for a "Vercel logo icon" might produce:

import { VercelLogo } from ‘lucide-react’LLM Suspense will replace this with:

import { Triangle as VercelLogo } from ‘lucide-react’This process completes within 100 milliseconds and requires no further model calls.

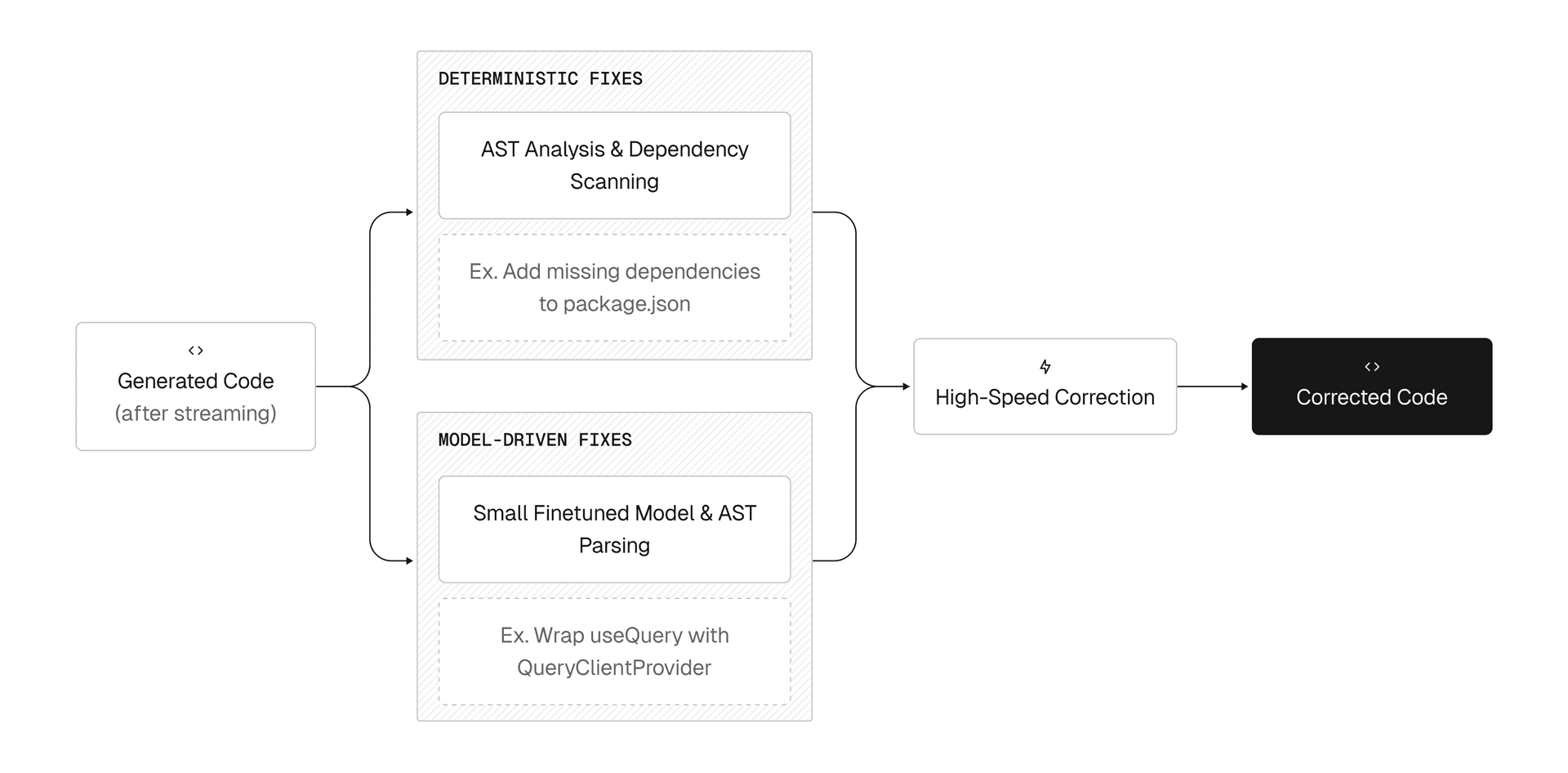

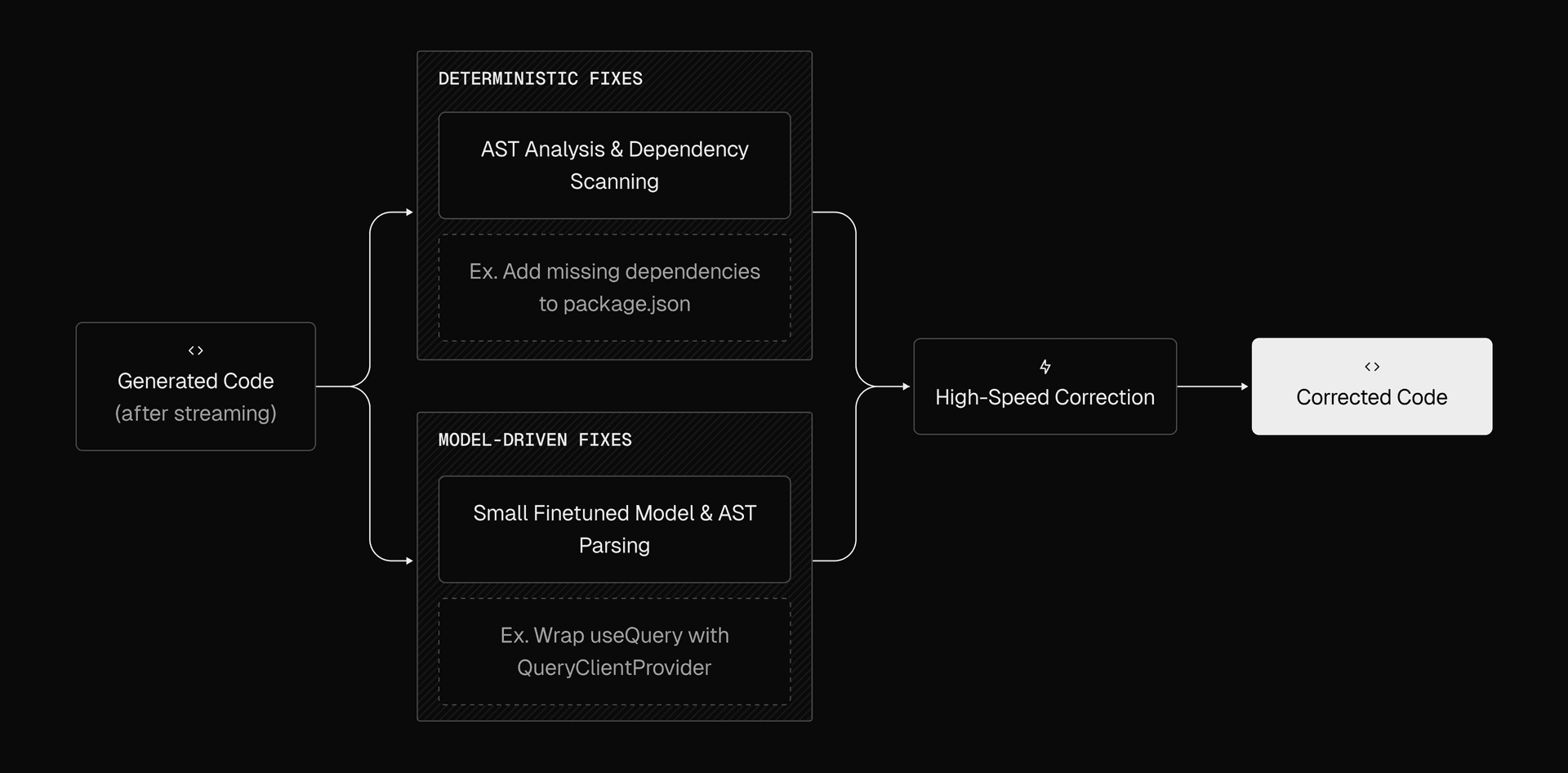

Link to headingAutofixers

Sometimes, there are issues that our system prompt and LLM Suspense cannot fix. These often involve changes across multiple files or require analyzing the abstract syntax tree (AST).

For these cases, we collect errors after streaming and pass them through our autofixers. These include deterministic fixes and a small, fast, fine tuned model trained on data from a large volume of real generations.

Some autofix examples include:

useQueryanduseMutationfrom@tanstack/react-queryrequire being wrapped in aQueryClientProvider. We parse the AST to check whether they're wrapped, but the autofix model determines where to add it.Completing missing dependencies in

package.jsonby scanning the generated code and deterministically updating the file.Repairing common JSX or TypeScript errors that slip through Suspense transformations.

These fixes run in under 250 milliseconds and only when needed, allowing us to maintain low latency while increasing reliability.

Link to headingPutting it together

Combining the dynamic system prompt, LLM Suspense, and autofixers gives us a pipeline that produces stable, functioning generations at higher rates than a standalone model. Each part of the pipeline addresses a specific failure mode, and together they significantly increase the likelihood that users see a rendered website in v0 on the first attempt.